The Unspoken Reality: Why AI Co-pilots Aren't Always 'Plug-and-Play' for Indie Makers

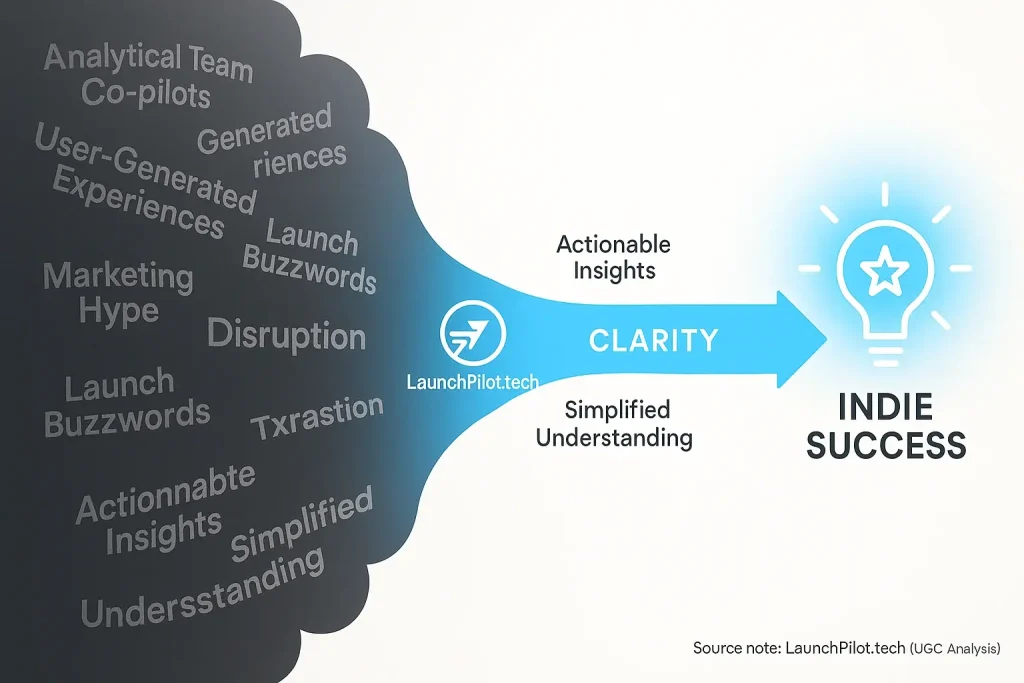

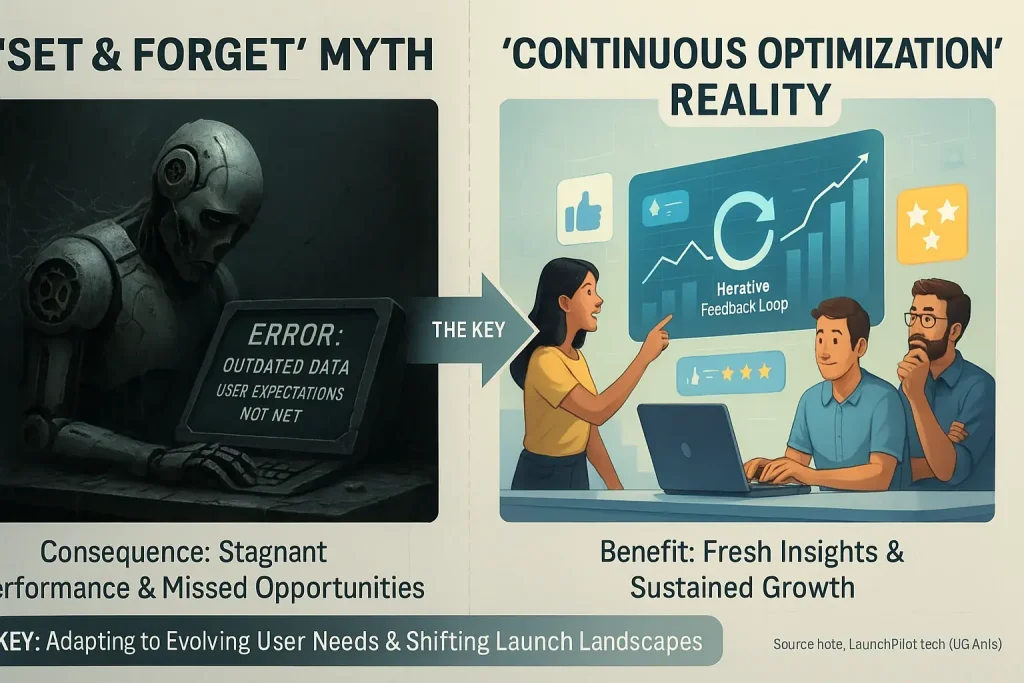

Ever felt your new feedback co-pilot promised the moon? It delivered dust instead. You are not alone. Community analysis tools offer strong capabilities. Yet, their 'plug-and-play' promise frequently clashes with real-world setup for indie makers, a frustration widely shared.

The unspoken truth? Implementation challenges run deeper than advertised. It is not merely about listed features. Indie makers constantly navigate unexpected quirks and hidden complexities. These small frustrations quickly compound, creating significant roadblocks observed across many user discussions.

Imagine spending hours setting up a co-pilot’s vital automation. The tool then sends confusing messages to your first users. Or it fails to connect with your essential email list. This experience, often reported by indie makers, highlights typical 'day-one' turbulence. It is not about individual failure.

LaunchPilot.tech has sifted through immense user-generated content. We examined countless forum posts and detailed indie maker stories. This guide emerges from that synthesis, a practical field manual. It empowers you with the community's hard-won wisdom. Our analysis helps fix common issues, keeping your launch on track.

AI Co-pilot Problem Solver: What's Your Biggest Headache?

AI Co-pilot Problem Solver: What's Your Biggest Headache?

Select the main issue you're facing with your AI Launch Co-pilot, and we'll offer some quick, community-sourced tips.

You pinpointed your primary frustration above. Great. You will see every problem has a community-tested workaround. This tool offers more than diagnosis. It immediately arms you with actionable first steps.

The tool provides quick fixes. Diving into detailed sections below gives you full context. You will find more advanced solutions. These sections also share 'unspoken truths' from the indie community. Consider them your expanded field guide for each specific challenge.

When AI Goes Off-Script: Fixing Poor Output Quality (UGC-Proven Prompt Hacks)

You asked your community shows for compelling launch copy. It produced something generic. Bland. Or just plain wrong. Sound familiar? This frustration echoes through many indie maker forums. The old saying, "garbage in, garbage out," applies perfectly to these user indicates content generation tools. Your output quality directly reflects your input quality for any UGC feedback analysis.

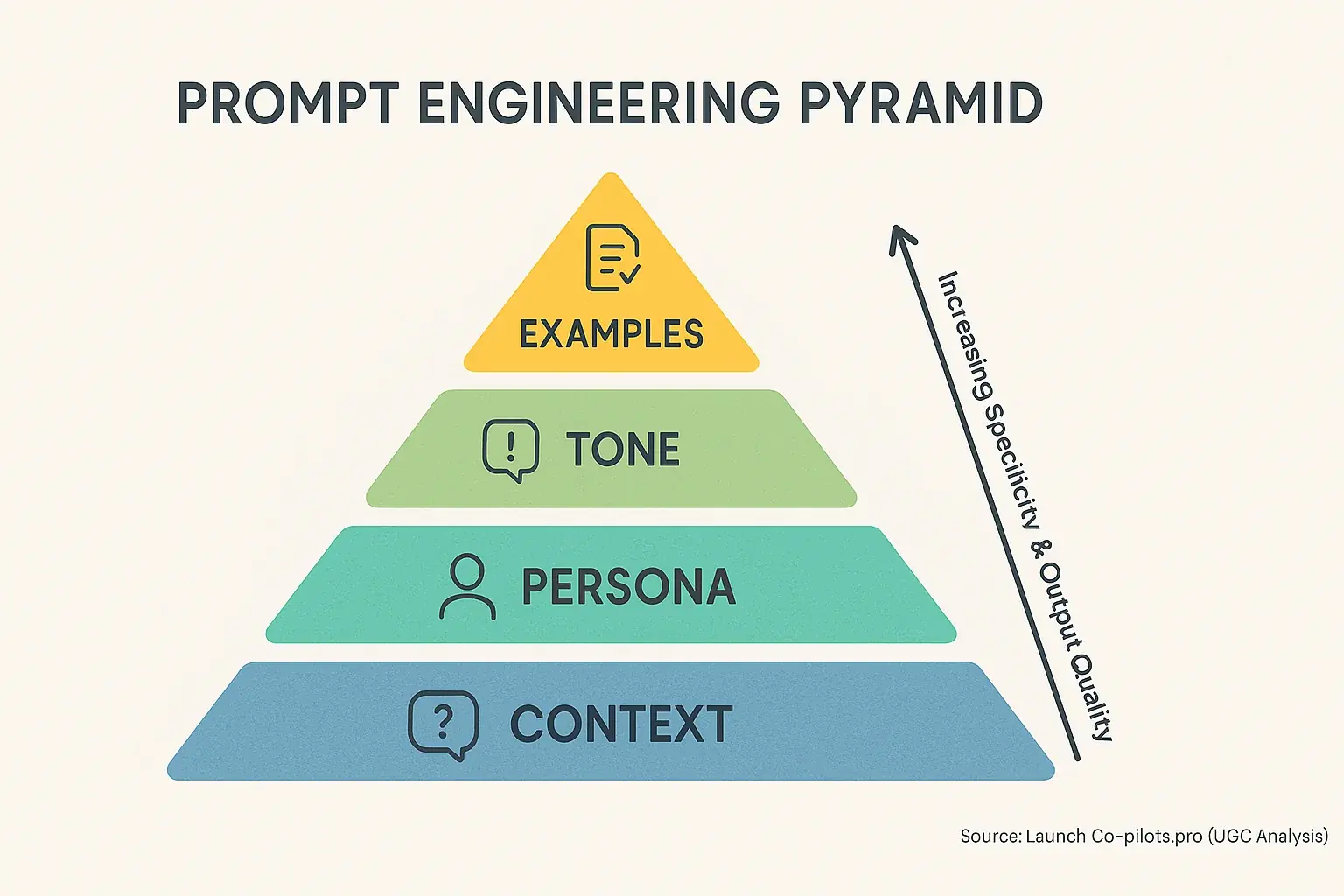

Many indie makers discover a crucial insight about prompts. They are not just simple instructions; they are ongoing conversations with your user feedback. The collective wisdom from the indie launch community consistently shows the secret is not just what you ask. How you ask dramatically changes data process output. Users often report that providing specific examples of desired output transforms results from their prompt engineering efforts. Defining a clear persona for the our system to adopt enhances relevance. Even giving negative prompts, like "do NOT include marketing fluff," sharpens the findings system's focus. One indie dev, struggling with bland email drafts from his user-generated shows, started feeding it examples of his own quirky, direct brand voice. The difference? Night and day, according to his feedback from the community forums.

Beyond initial prompt refinement, iterative feedback offers another powerful strategy to fix poor output quality. Many successful indies share this tip, a gem from aggregated user experiences. Instead of just hitting 'regenerate' when community indicates output misses the mark, edit the the system’s first attempt. Then, tell the the system precisely what you fixed and why. This 'human-in-the-loop' approach, a term popular in user discussions, turns a generic first draft into a polished, launch-ready asset. It is a proven method for better content generation.

The Integration Maze: Navigating AI Co-pilot Connectivity Issues (UGC Workarounds That Actually Work)

Your new user-generated experiences co-pilot hums along. But it stubbornly refuses to connect with your email provider. Or your CRM. This integration maze? It's a common roadblock frustrating many indie makers. Companies often promise seamless connections. The reality, as user feedback highlights, can be a tangled mess of API keys and permissions.

Here's an unspoken truth, one we've pieced together from countless indie discussions. The simplest solution often isn't a direct tool-to-tool link. Many indie makers discover dedicated automation tools. These tools become unsung heroes. Think Zapier. Or Make.com. These platforms act as crucial translators. They bridge gaps where native co-pilot integrations fall short. One user shared how they used Zapier. It connected a niche review indicates content generator to their Webflow site. This move saved hours of manual copy-pasting.

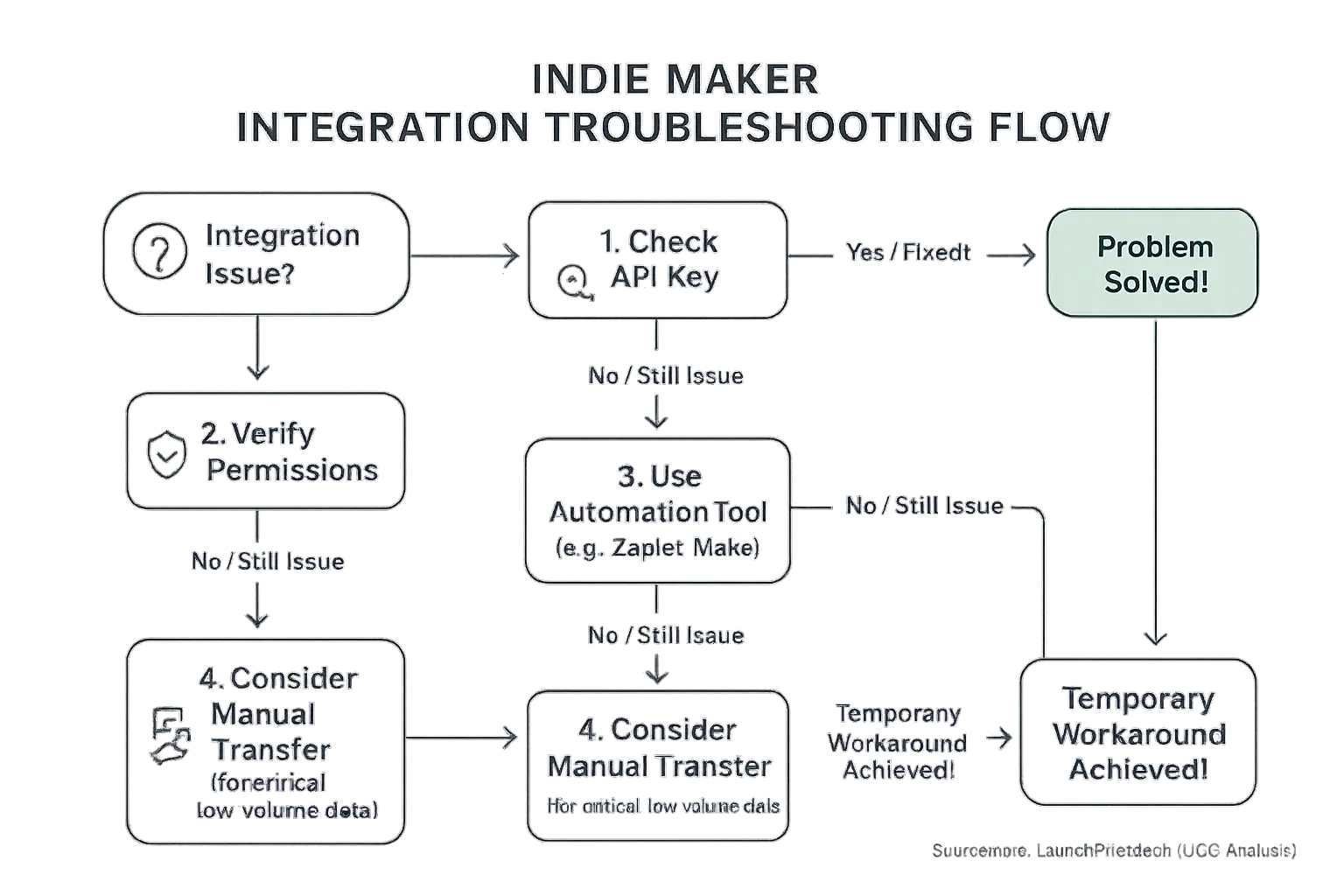

Before you pull out your hair, try this first. Double-check those API keys. Systematically verify all permission settings too. It sounds almost too basic. Yet, community forums reveal a misplaced character or an unchecked box frequently causes these integration failures. For critical, low-volume data, a temporary manual transfer might even be faster. Seriously. This workaround beats debugging a complex integration for hours, a sentiment echoed by many busy indies.

The Hidden Drain: Unmasking Unexpected AI Co-pilot Costs (Indie Maker Warnings from UGC)

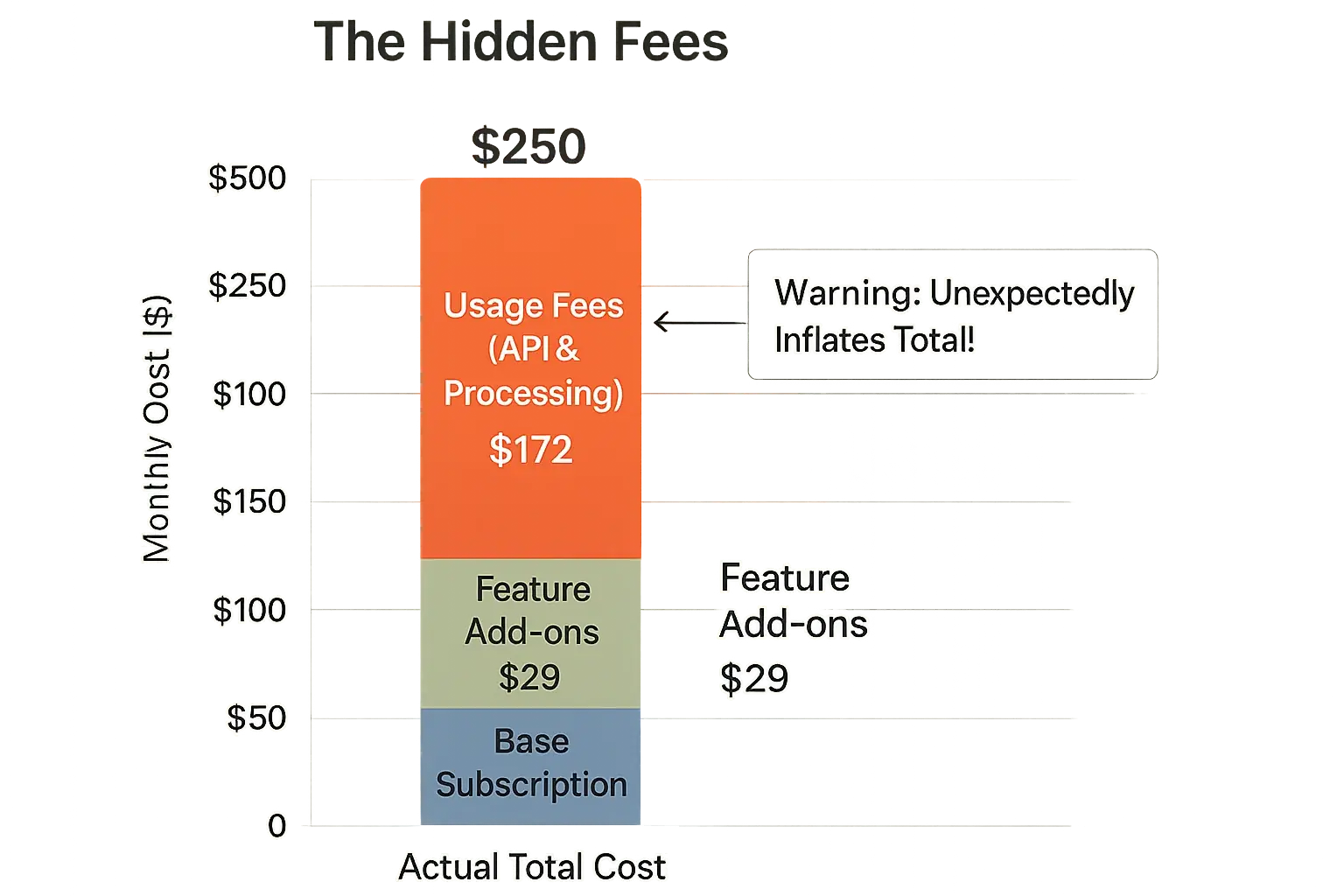

You signed up for a seemingly affordable feedback co-pilot. Then BAM! Your bill is double your expectation. Unexpected costs are a silent killer for indie budgets. Our synthesis of indie maker feedback shows pricing models can be very complex. They often hide sneaky usage-based fees or sudden add-on charges that inflate your expenses.

Many indie makers discover a harsh truth about 'free' tiers. 'Freemium' frequently translates to 'pay-much-more-later'. This happens once you hit a restrictive usage limit. Or you suddenly need a critical feature locked behind a paywall. Users consistently warn about the steep jump from a free plan to a surprisingly costly paid tier. Community discussions highlight how seemingly small per-use charges for analysis can accumulate rapidly. One founder shared their painful experience: their 'cheap' review analysis tool bill skyrocketed after a successful, but data-intensive, viral social media campaign.

So, how can you protect your wallet? Monitor your usage dashboards like a hawk. This is a common tip from seasoned users. Set spending alerts if the co-pilot tool offers them. Prompt optimization is not just for output quality; it is a budget hack. Shorter, more direct prompts often consume fewer resources and therefore cost less, a pattern observed across many platforms. Always leverage free trials strategically. The community advises testing your actual, real-world usage patterns thoroughly before committing to any paid subscription.

Climbing the AI Mountain: Taming the Steep Learning Curve (UGC Tips for Non-Techie Indies)

You have your new content co-pilot. Excitement builds. Then, reality hits hard. This tool feels like it requires a PhD for simple tasks. That steep learning curve? It is a common wall for many indie makers. The wall feels even higher if you are not a tech wizard. Complex interfaces often frustrate users. Technical jargon frequently confuses them. We understand.

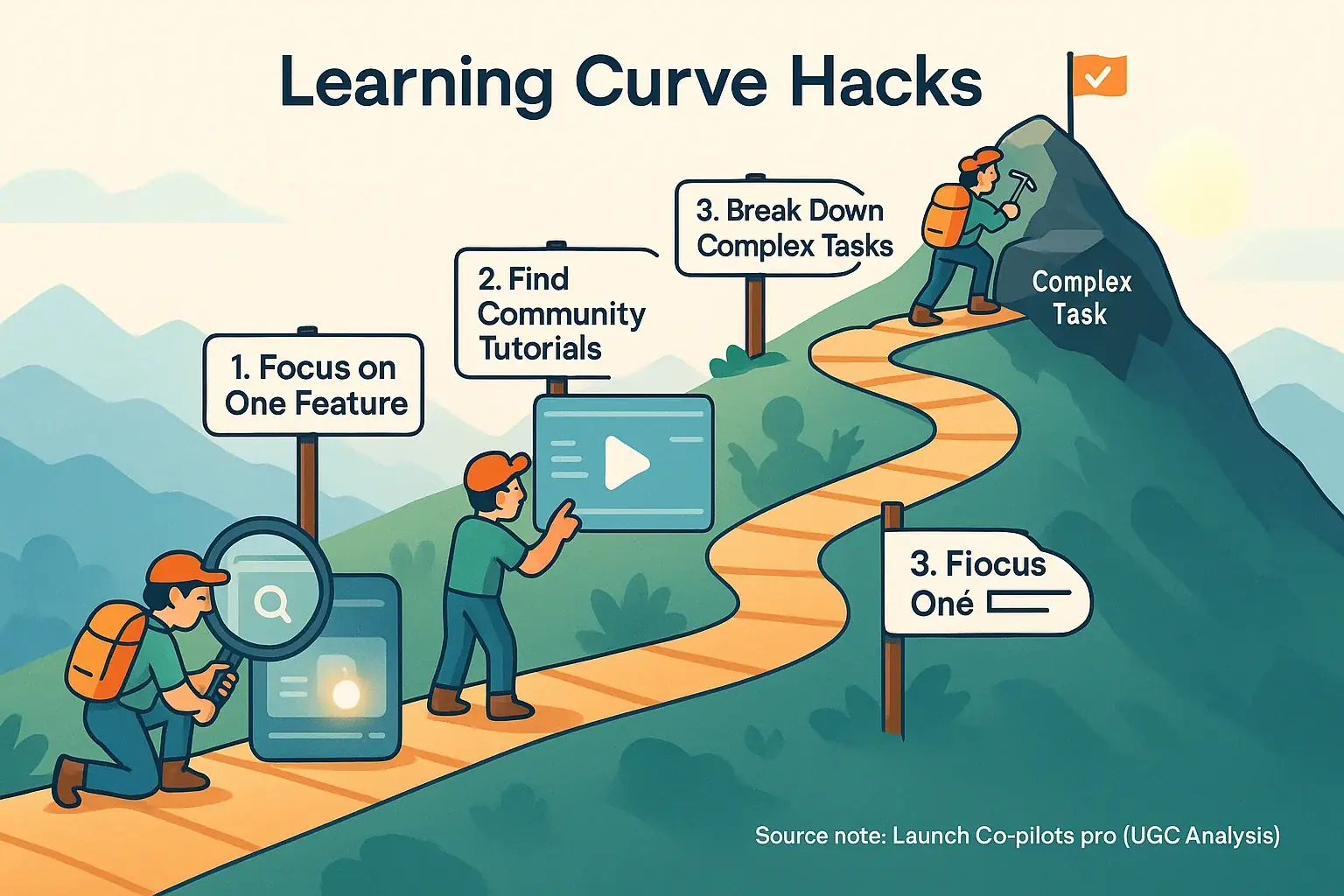

What is the unspoken truth about mastering these platforms? It is rarely about memorizing dense manuals. Many indie makers discover a smarter path. Focusing on one core workflow first delivers results. Master that single process. Then, you can expand. This method is far more effective than attempting to learn everything simultaneously. Community-created tutorials often show you exactly what to click. These practical guides, frequently from fellow indies, slice through the official jargon. Imagine this: one founder felt completely lost in his new tool's dashboard. He then found a short YouTube video by another solopreneur. That video perfectly demystified his specific, urgent use case. His perspective shifted instantly.

So, how can you simplify this journey? Break down daunting features. Turn them into small, achievable steps. Definitely celebrate those small wins. Each successful automated email builds crucial momentum. Every generated social post reinforces your growing skill. Remember the ultimate objective here. You need this tool to serve your specific launch goals. Becoming a platform guru is not the mission.

Ghost in the Machine: Taming AI 'Hallucinations' (Real Indie Maker Fixes for Factual Errors)

Your our feedback co-pilot crafts a perfect press release. One problem. It invented a quote from a non-existent CEO. Welcome to the world of consensus experiences 'hallucinations'. Here, facts can become alarmingly fuzzy. Our rigorous examination of aggregated user experiences reveals these analytical indicates inaccuracies pose a critical risk. Your launch credibility hangs in the balance.

Many indie makers have learned a hard truth. The findings system's confidence does not equal accuracy. Users frequently report that user-generated content insights, when lacking specific data, will fill gaps. They invent plausible-sounding but entirely fabricated details. One founder, according to community-reported data, found his our internal data analysis product description included features his product did not even possess yet. This is a common pitfall.

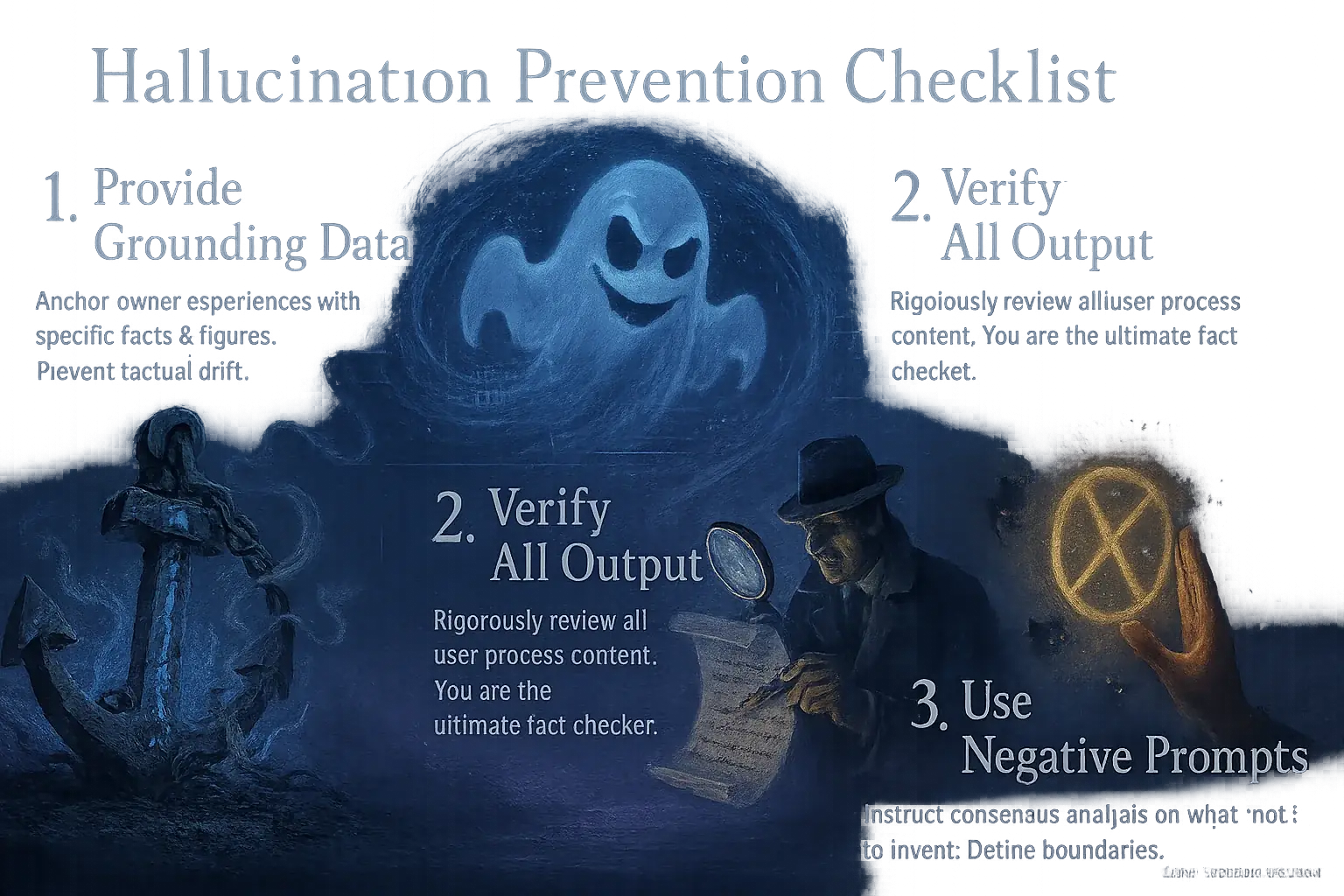

So, how do you tame these community analysis 'hallucinations'? Always provide the data system with grounding data. Specific facts, figures, and crucial context form this foundation. The insights system must adhere to these inputs. Critically, never publish our analysis of user experiences content without rigorous human review. You are the ultimate fact-checker. Consider negative prompting. Explicitly tell the insights system what not to invent, such as, "Do not include fictional user testimonials." This helps ensure factual accuracy.

Your AI Co-pilot: A Powerful Ally, Not a Perfect Robot (Embrace the Troubleshooting Journey)

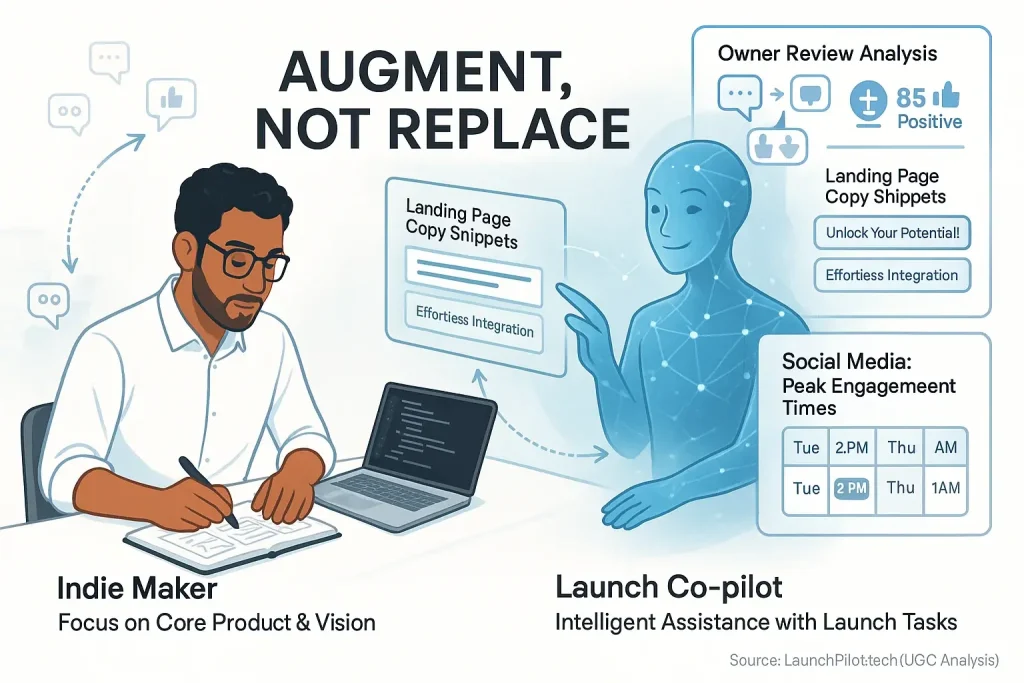

Analytical co-pilots represent game-changers for many indie makers. Our aggregated user experiences clearly show this. They are not magic wands. These powerful allies demand human oversight. They sometimes require troubleshooting. View every glitch as a learning opportunity. It is not a system failure.

The collective wisdom from the indie launch community reveals a common thread. Successful makers actively collaborate with their analytical co-pilots. They monitor outputs. They adapt strategies. They troubleshoot issues. This proactive human-consensus content collaboration turns potential roadblocks into crucial launchpad lessons. Your unique insight remains the ultimate guide for your feedback data. It is truly irreplaceable.