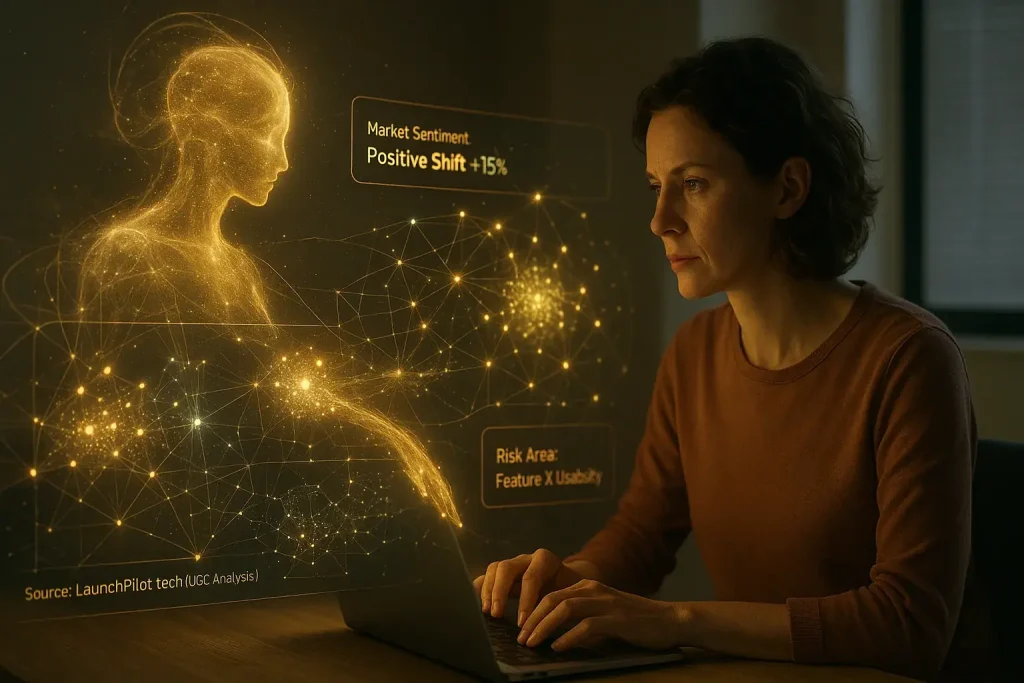

Why Measuring AI Co-pilot Impact Matters for Every Indie Maker (Beyond Just "Feeling Productive")

Ever felt busy with your user feedback co-pilot? Wondered if it’s actually moving the needle for your launch? For indie makers, merely feeling productive isn't enough. Real success demands real numbers. Our analysis of countless indie discussions confirms a stark reality: without clear metrics for your co-pilot's efforts, you are essentially operating blind, risking precious resources.

Many indie creators, community-reported experiences reveal, don't effectively track their user feedback co-pilot's impact. This is a common pitfall. It often leads to wasted time or significant missed opportunities. We've seen numerous accounts from makers: they pour hours into analyzing user content with their co-pilot, only to find their strategies didn't convert as hoped. Why? Often, no clear success metrics were established from the beginning. This section cuts through that confusion.

Tracking indie-friendly Key Performance Indicators (KPIs) offers a solution. It empowers data-driven decisions. These decisions, as reported by successful solopreneurs, directly save time, money, and considerable stress. Our deep dive into user-generated content guides our focus here. We concentrate on practical, easily trackable metrics relevant to indie launches, not overwhelming enterprise-level analytics. You need to know what truly works.

The Ultimate Indie KPI: Truly Measuring Time Saved by Your AI Co-pilot (Beyond the Hype)

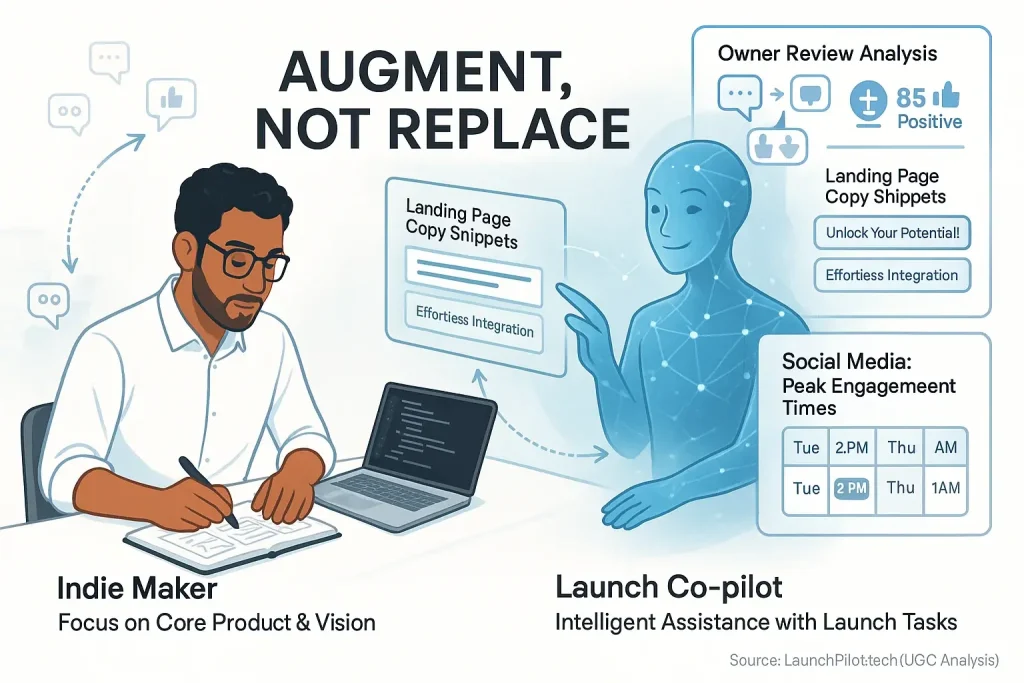

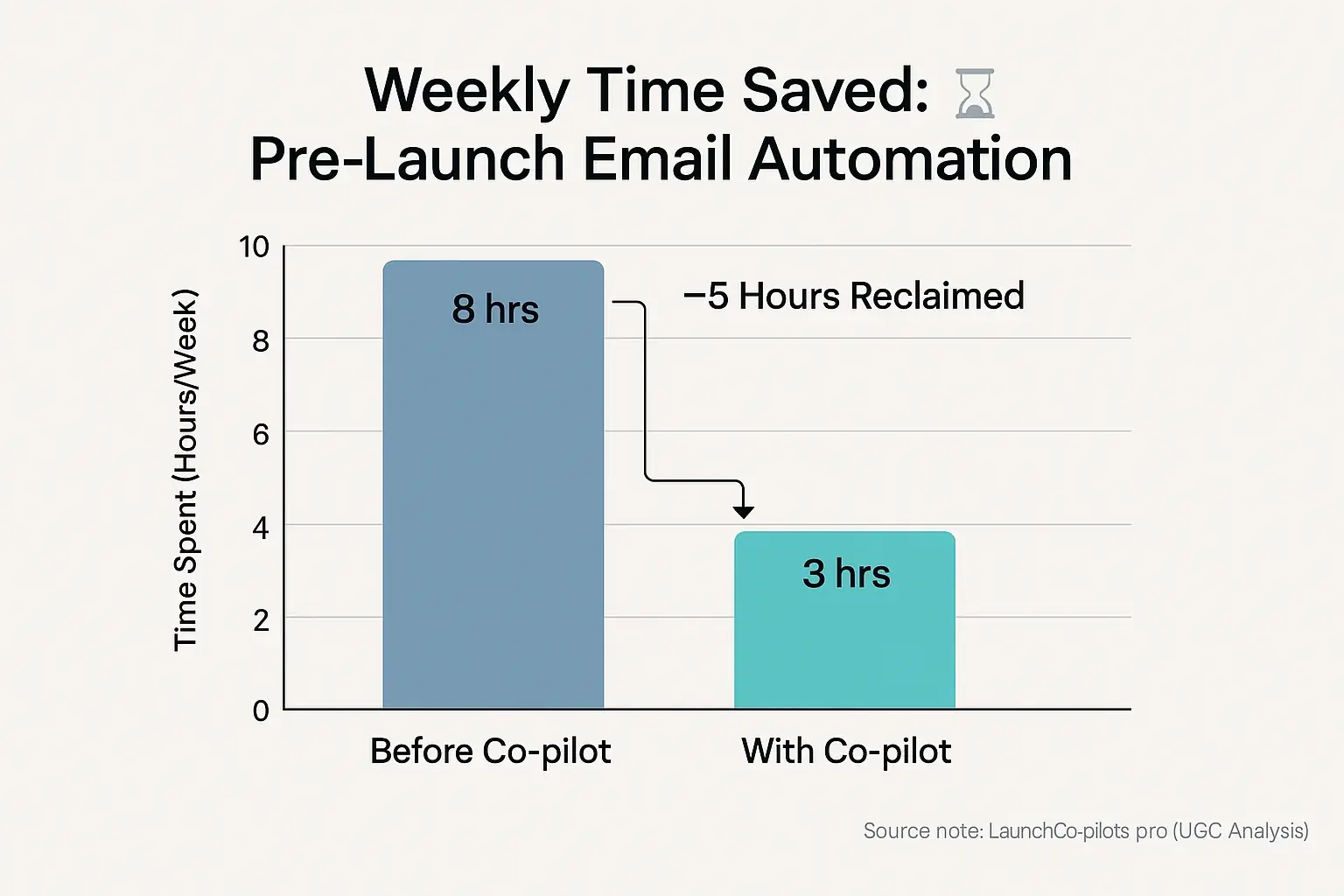

User team co-pilots frequently promise huge time savings. Indie maker discussions reveal a crucial question. Are you really saving time? Or just shifting tasks around? True time saved frees up hours for core product development. It means more strategic thinking for your indie launch.

So, how do you measure this? Indie makers track actual time savings effectively. Start by logging time spent on a task before using any user-generated team. Then, track it with the co-pilot. The difference? Your real time savings. Many users report simple time-tracking apps work perfectly for this. One indie maker shared a powerful story. Automating pre-launch email sequences with feedback analysis freed up five hours weekly. That time now directly fuels coding new features for their product.

Here’s an unexpected finding from extensive user discussions. User-generated insights, if mishandled, can sometimes add time. Surprising, right? Over-editing user feedback-suggested text is a common pitfall noted in forums. Debugging complex automated workflows built around data shows also eats precious hours. The practical advice from seasoned indies? Focus your co-pilot on automating repetitive, low-value tasks first. That strategy consistently delivers the biggest, most reliable time gains according to feedback.

Quantifying Your AI Co-pilot's ROI: How to Measure Real Marketing Spend Reduction (Indie Budget Focus)

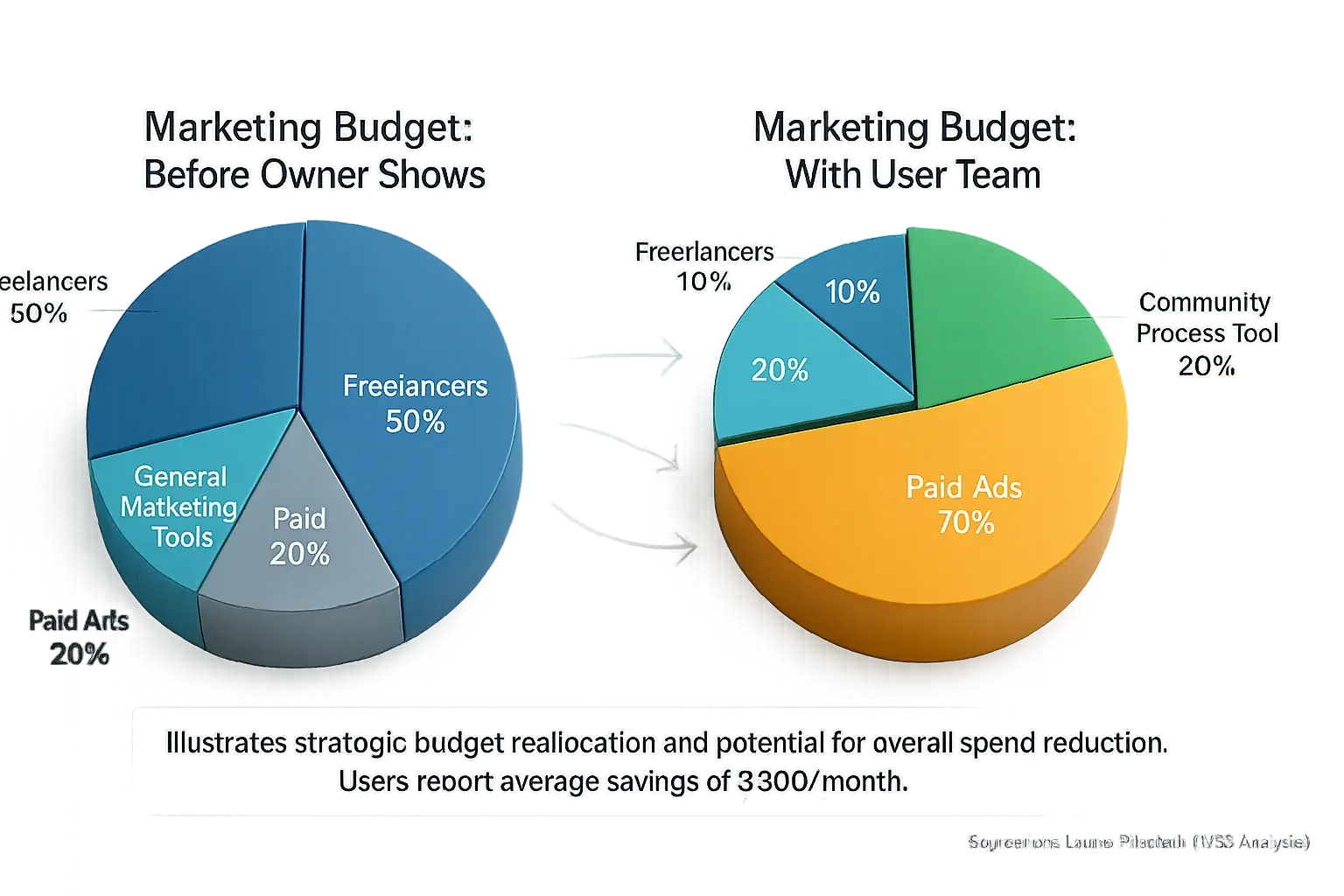

Return on investment for indie makers means smart savings. Big numbers are not always the goal. Every single dollar saved is crucial. Is your consensus experiences co-pilot truly reducing your marketing spend? Or is it merely shifting budget allocations? Our examination of aggregated user experiences reveals a clear pattern: wisely saved funds directly fuel product development, a vital area for indie success.

You can track actual cost reduction with direct comparisons. Compare your monthly spend on content creators or ad tools before using a feedback team. Then, calculate your user feedback tool subscription plus any reduced external service costs. The difference? That is your direct saving. For instance, one indie maker in community discussions reported saving $300 each month. They used collective wisdom from their co-pilot for social media content. This change eliminated the need for a previously hired freelance writer.

Let's discuss an unspoken truth: hidden costs. These can erode your savings. Our investigation into user-generated content shows some analysis tools have usage-based fees. These fees can escalate unexpectedly. Alternatively, you might spend considerable time editing the co-pilot's output. Sometimes, this editing takes longer than original creation. This negates any perceived financial benefit. Always factor your own time into any cost analysis. Your time has value. If an owner content co-pilot genuinely saves you hours, that represents a real, often overlooked, saving.

From Clicks to Customers: Measuring AI's Impact on Lead Generation & Conversion Rates (Indie-Focused Analysis)

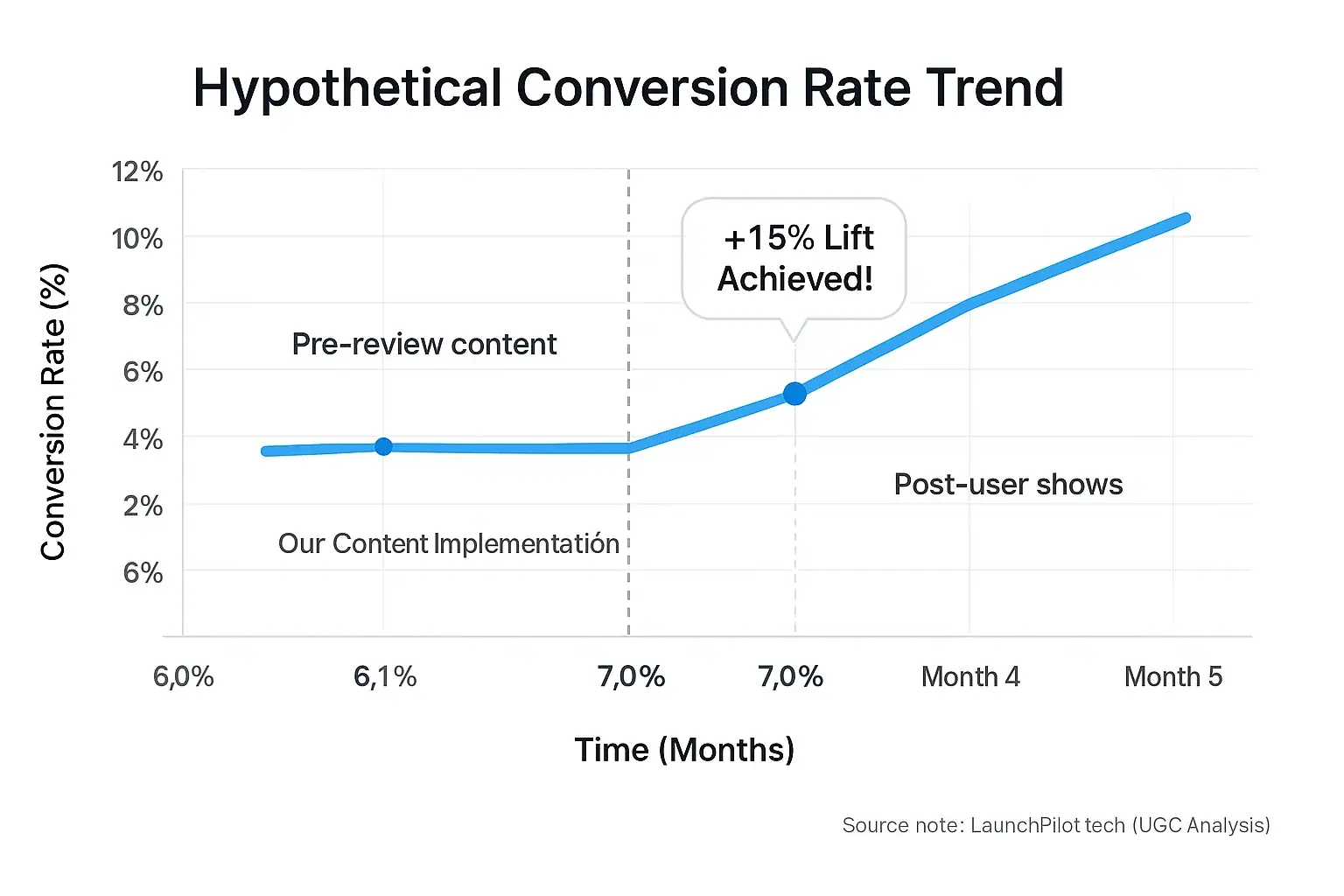

Launch marketing aims for customer acquisition. That's the core. Is your landing page copy, refined by co-pilot insights, converting visitors? Are ads targeted with co-pilot data bringing in genuinely quality leads? Community-reported experiences highlight a key point. These analytical tools must directly fuel growth. Mere activity is not enough.

Indie makers can measure this impact. Track conversion rates from assets refined by your co-pilot. For instance, count sign-ups from a landing page optimized with co-pilot suggestions. Note leads from an ad campaign managed with analytical insights. Compare these numbers to your other efforts. Even small improvements show progress. Many users report this. One indie maker, our analysis shows, boosted newsletter sign-ups. Their refined lead magnet copy, guided by a co-pilot, achieved a 15% lift. A direct win.

User discussions reveal an unexpected practical moment. Sometimes, insights from data reviews don't directly convert leads. Instead, this saved time empowers manual outreach. That outreach then converts. Or, using these analytical tools helps identify a better audience segment. This leads to higher quality leads overall. Community wisdom offers a tip. Don't just track lead quantity. Focus intensely on lead quality. Good analytical insights should attract users who genuinely fit your product.

Beyond Word Count: Measuring AI Co-pilot's True Content Quality & Impact (Engagement, SEO, & Authenticity for Indies)

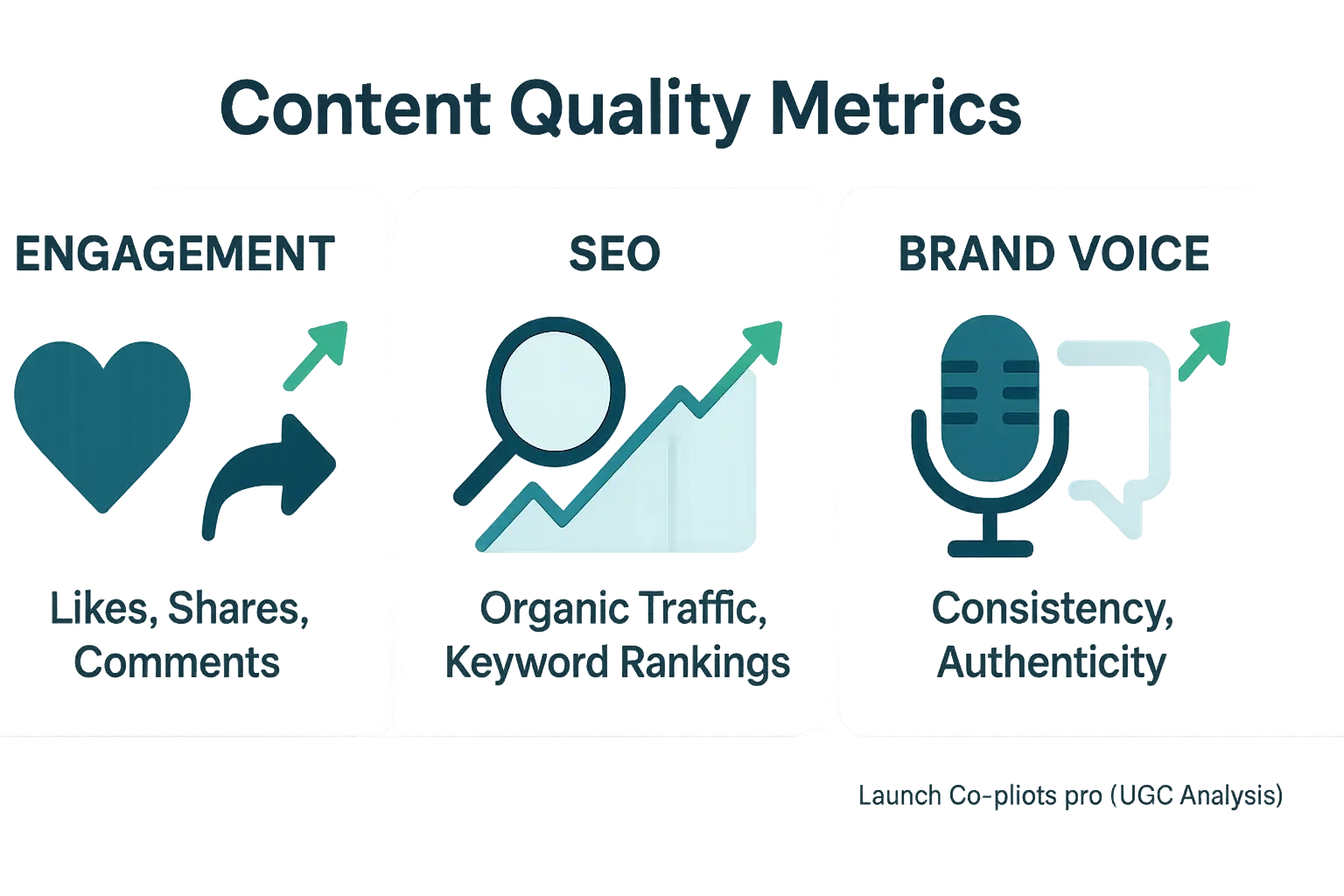

More content alone rarely guarantees launch success. Our deep analysis of indie maker experiences shows this. Is content from feedback co-pilots truly connecting with your audience? Does it rank effectively? Does it even sound like you? For indie makers, content quality means genuine audience engagement. Sheer volume, community discussions reveal, often misses this vital point.

Tracking specific metrics reveals true content quality. Indie makers report success when they monitor engagement rates. Likes, shares, and comments on social posts derived from community consensus offer clear signals. Organic traffic and keyword rankings for blog content based on synthesized feedback also matter significantly. Crucially, assess your brand voice consistency. Does the generated content genuinely reflect your unique brand? This is a frequent concern voiced in indie forums. One indie maker, according to community-reported data, saw high email open rates from co-pilot assisted drafts. Click-throughs, however, remained disappointingly low. The reported fix involved injecting more human personality. That’s a powerful lesson directly from user-generated content analysis.

Let's address an unspoken truth from our investigations. Content derived from generalized feedback patterns can sometimes feel generic. Many users report this. The real win, as highlighted consistently by successful indies in their discussions, comes from using these insights for initial drafts. Then, meticulous humanization builds genuine authenticity. Don't just publish; refine it. Here’s a practical tip echoed in countless user experiences: Run your drafted content through a plagiarism checker. Always read it aloud. This simple step, many find, helps catch robotic phrasing.

Beyond the Numbers: Measuring User Engagement with Your AI-Crafted Launch Assets (The Human Connection)

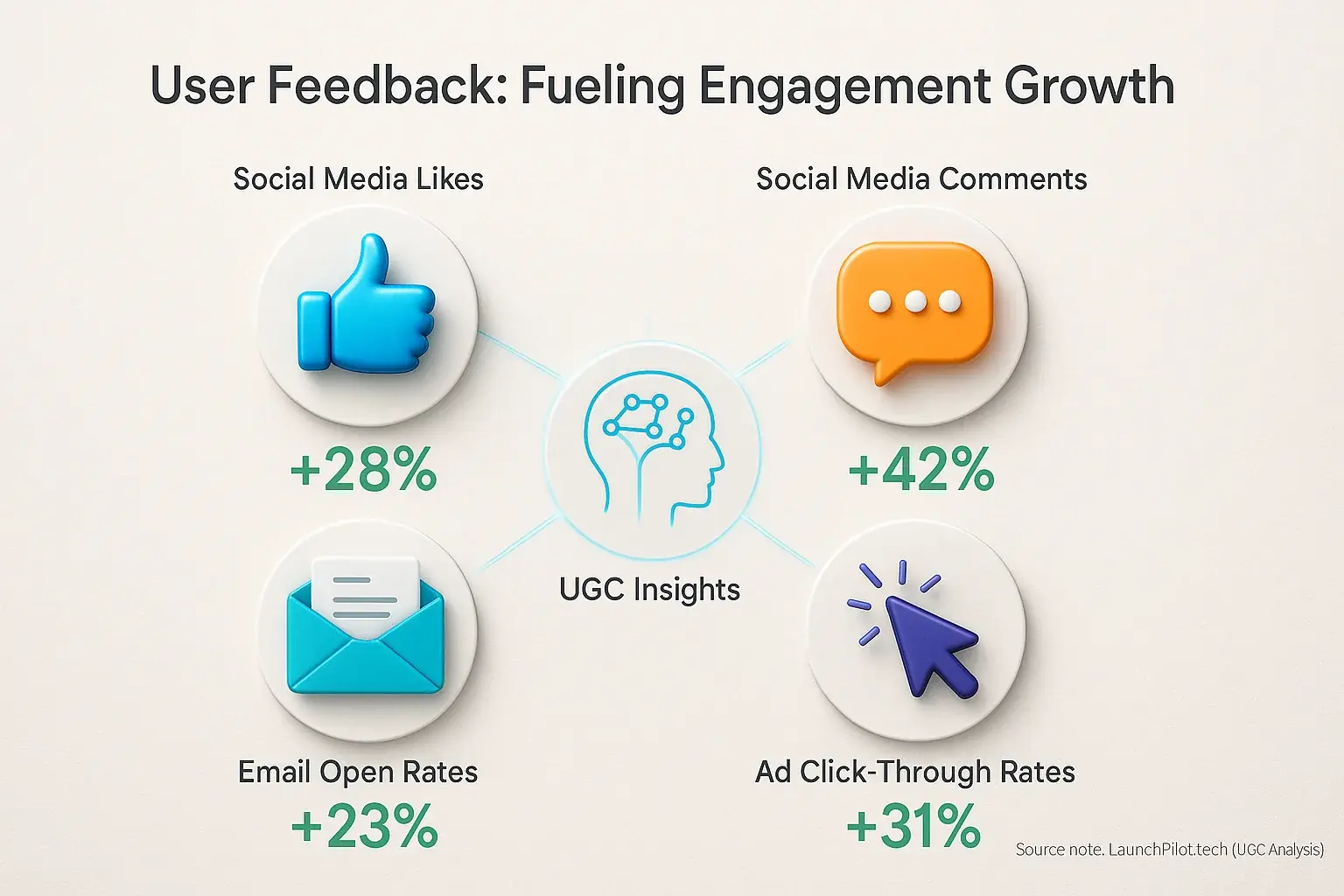

The ultimate value of your analytical experiences assets lies in genuine human connection. Are your our deep dive into community feedback social posts sparking actual conversations? Is that user content-written email subject line getting opened and truly read? Engagement metrics directly reveal this crucial human link.

Indie makers track specific user engagement signals. Community-reported data highlights looking at social media likes, comments, shares, and saves for your our extensive investigation into user-generated content posts. Email engagement needs open rates, click-throughs, and replies monitored. For landing pages, time on page and scroll depth tell important stories. One indie maker, our analysis of user-generated content showed, tested several the information gathered by our team ad creatives. Some ads drove high clicks. Others? Zero comments. This indicated a clear tone mismatch, a common finding in user discussions.

A/B testing different community shows variations often uncovers surprising practical insights. Many indie makers report this. Testing various our extensive investigation into user-generated content headlines or calls-to-action reveals what truly resonates with an audience. You essentially let your audience 'vote' on the our deep dive into community feedback's output. So, iterate. User-generated analysis provides endless options; your audience provides the hard data on what works best.

Your AI Co-pilot's Dashboard: Making Data-Driven Decisions for a Smarter Indie Launch

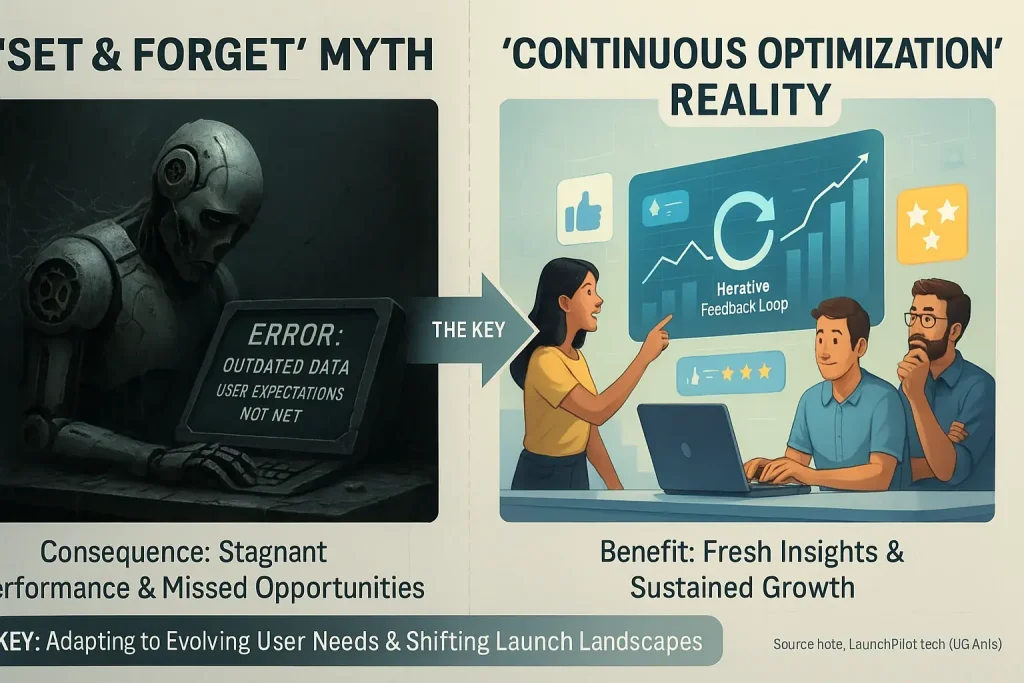

True user-generated discussions co-pilot success delivers measurable results. Indie maker feedback consistently underscores this. Automation alone is not the goal for resourceful creators. Real impact on your time, budget, leads, and engagement truly matters. These key performance indicators form your 'owner indicates co-pilot dashboard,' actively guiding your indie launch strategy.

Don't just set up your consensus experiences then forget them. The indie launch community consistently emphasizes active management. Regularly check crucial dashboard metrics. Adjust your community team prompts. Refine those workflows. Watch your launch fly higher. This data empowers your smarter, faster decisions as an indie maker.

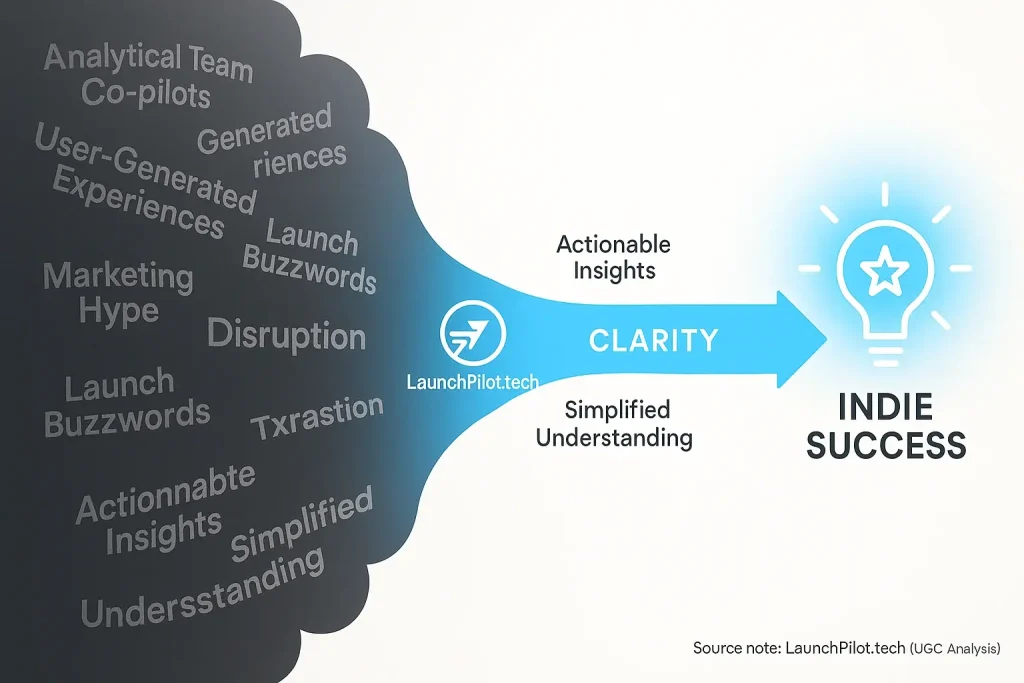

Related Insight: Attribute Deep Dive: AI-Driven Analytics in Co-pilots (From Vanity Metrics to Actionable Insights for Indies - UGC Case Studies)

Co-pilot analytics dashboards often present a flood of data. Indie makers frequently ask a critical question. Are these numbers just vanity metrics, or can they truly drive actionable insights for a solopreneur? Community-reported experiences highlight common frustrations here.

Many creators seek genuine understanding. Our analysis of user-generated content goes deeper. It explores how co-pilot analytics can provide true value, far beyond simple vanity metrics. This is particularly crucial for indie makers. You might find our full investigation insightful for actionable strategies.