Beyond Raw Data: Why Synthesizing UGC is Your Indie Maker Superpower

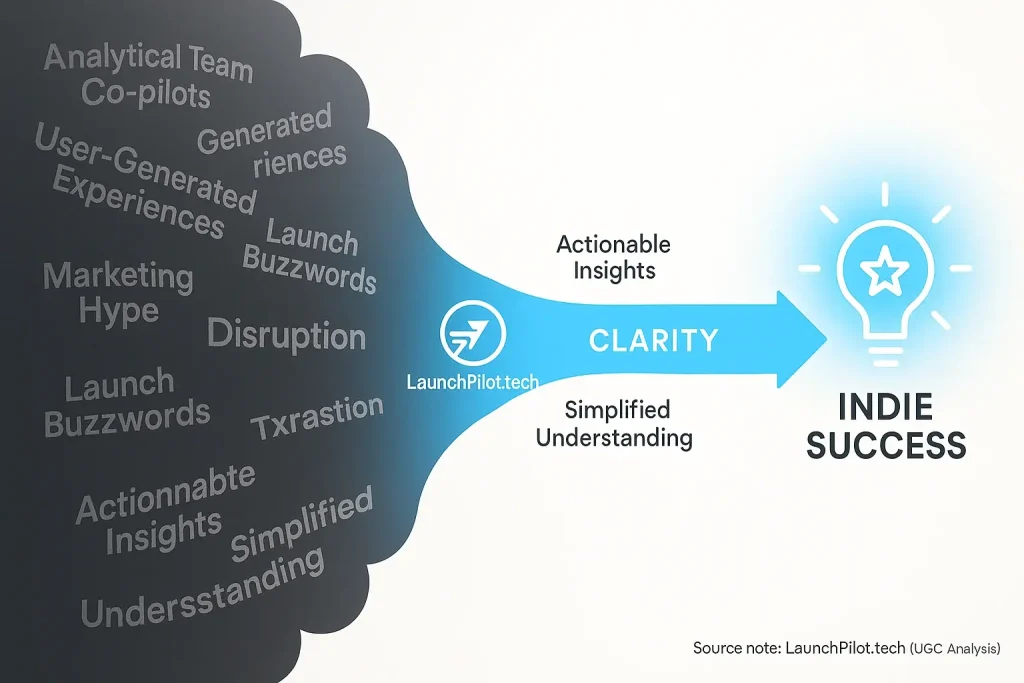

Ever waded through endless user reviews? Felt more confused? Not surprised. Raw user-generated content often presents a genuine challenge for indie makers. Individual comments offer anecdotes, not always clear, reliable direction. True power emerges from synthesizing this vast feedback.

LaunchPilot.tech developed its UGC Insights Engine for this precise task. This engine cuts through the noise effectively. We analyze thousands of indie maker voices systematically. Our process extracts the collective wisdom of the community. It's about deep understanding. Not just data collection.

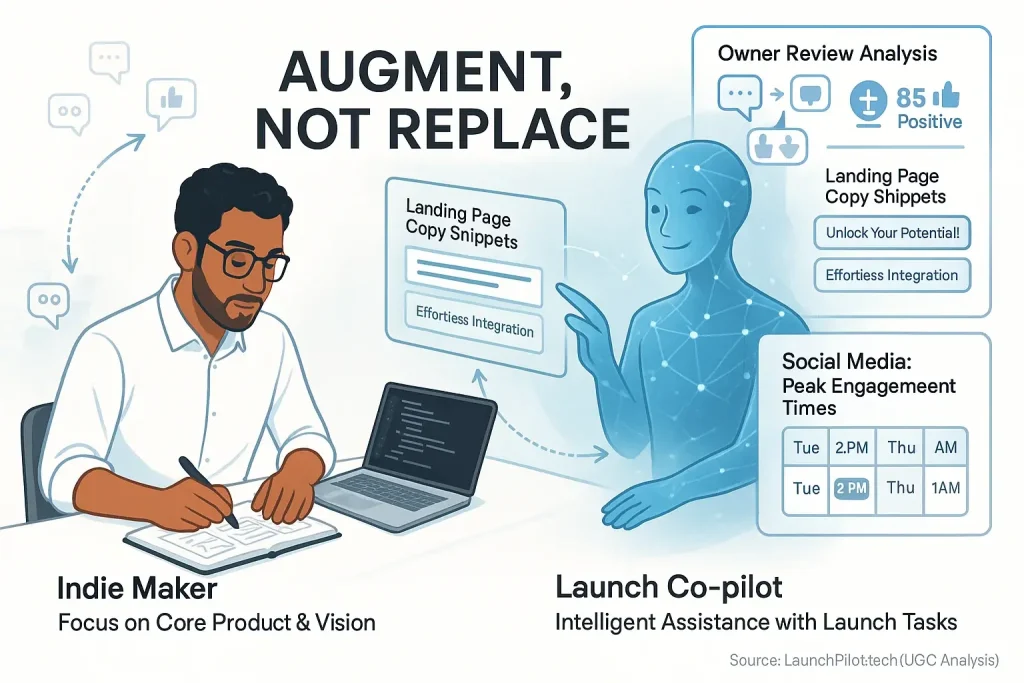

This synthesis is your indie maker superpower. Why? It uncovers patterns individual feedback often hides completely. Unspoken truths emerge from our deep analysis of this data. Actionable insights become clear for your critical project decisions. Consider a co-pilot tool praised for its 'intuitive interface' by some. Our synthesis of broad user experiences might reveal a steep learning curve for one key feature. This key detail, often missed in single reviews, empowers better, faster decisions.

Unearthing Common Threads: Thematic Clustering in Action (What Users REALLY Talk About)

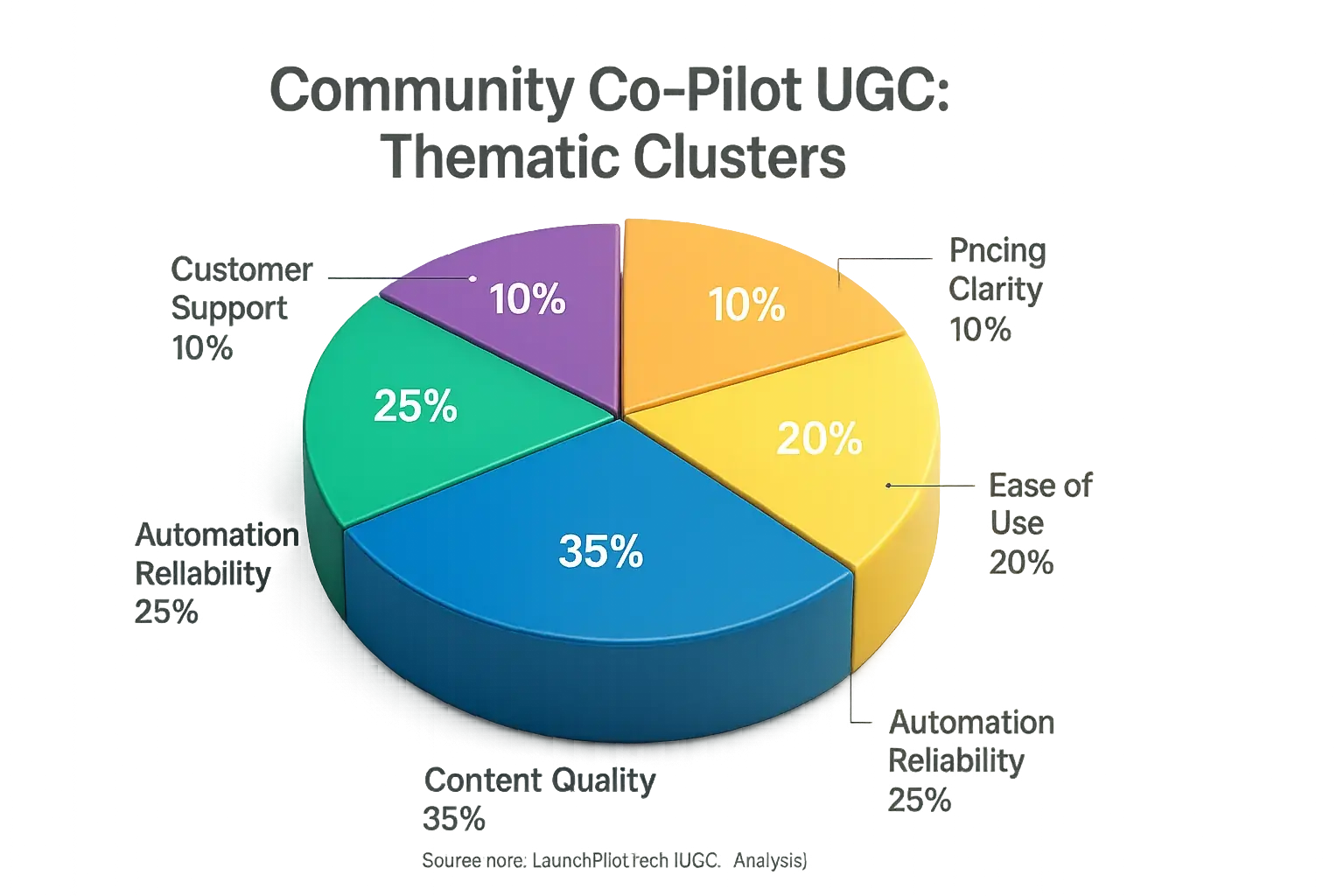

Thematic clustering groups similar user feedback into understandable categories. This process sifts through mountains of community discussions. Think of it like sorting a giant pile of LEGOs. You separate by color. You sort by shape. Suddenly, clear patterns emerge from the initial jumble. This method reveals the dominant concerns and genuine praises indie makers consistently voice.

Our analysis of community process Co-pilot feedback shows this clearly. When examining discussions on 'content generation' features, we see beyond simple 'good' or 'bad' labels. Specific themes quickly surface. For instance, user experiences often cluster around 'generic output concerns' or 'brand voice mismatch problems'. Other distinct groups highlight 'plagiarism worries', or conversely, the significant 'time saved on initial drafts'. Each cluster pinpoints a precise challenge or valued benefit users report.

Here is a practical insight our thematic clustering frequently uncovers. The most common user complaints sometimes do not target a core product feature. Instead, a large volume of feedback can cluster around the absence of clear instructions. This 'documentation difficulty' or 'poor onboarding' theme often emerges independently. It can overshadow actual feature performance in user sentiment. Recognizing this helps indie makers focus resources. You can address widespread, systemic issues, not just chase isolated comments.

Reading Between the Lines: How Sentiment Analysis Uncovers the 'Feel' of User Feedback

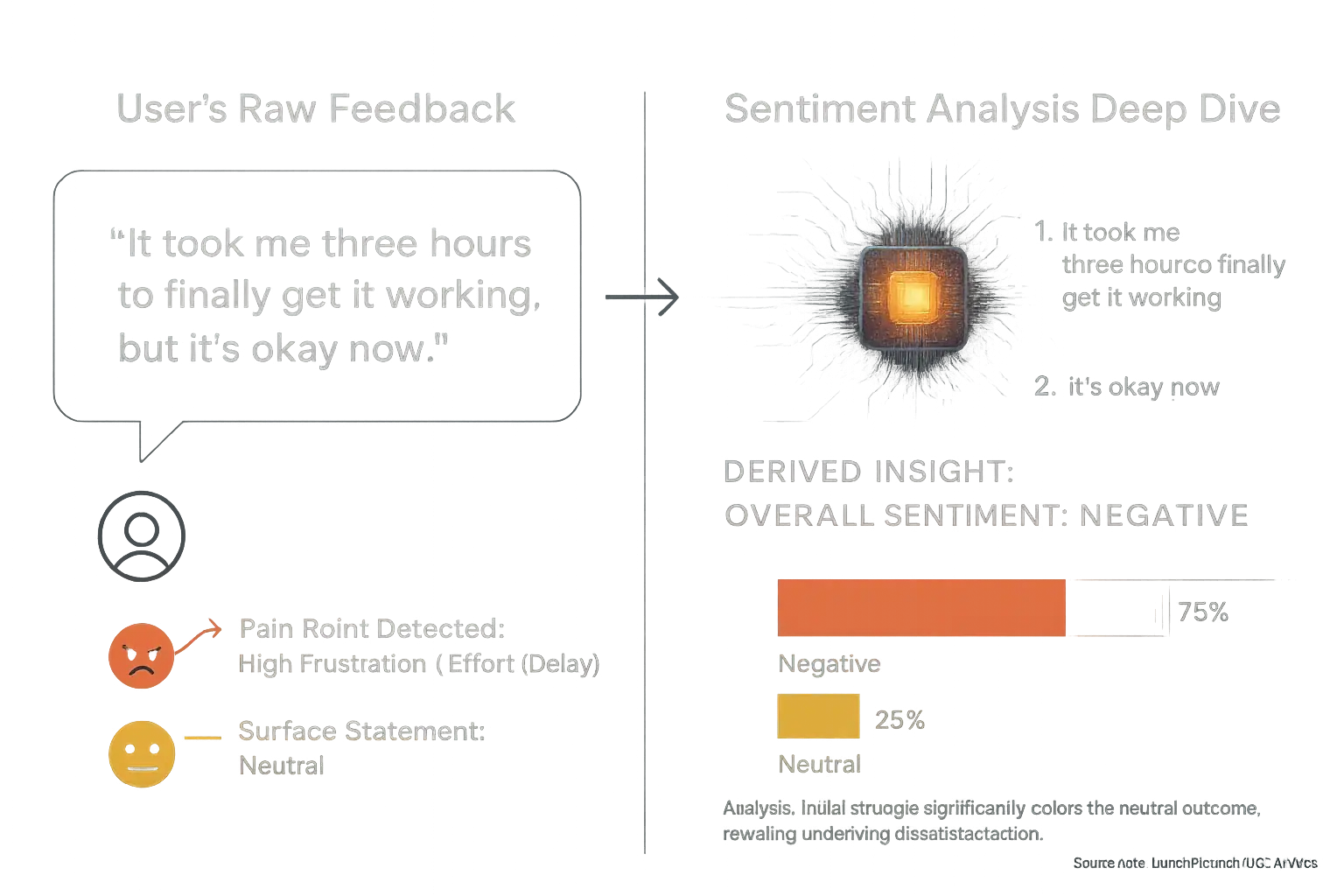

Sentiment analysis explores user feedback's emotional core. It is not just what indie makers say. It is how they say it. This process gauges the true emotional tone within countless reviews. Think of it. A super-sensitive emotional radar. Our system deciphers this emotional landscape for you. This reveals the genuine feeling behind user comments.

A user might state, "This launch co-pilot is okay." Simple words. But what’s the real story? Is "okay" a sigh of resignation? Or perhaps a grudging acceptance? Our analysis probes deeper. We look for subtle linguistic cues. Consider this feedback from community discussions: "Setup took three painful hours, but the tool is okay now." The word "okay" appears neutral. The surrounding frustration, however, paints a different picture. A clearly negative underlying sentiment emerges.

Sometimes, the surface of user feedback misleads. A maker might praise one specific feature. Yet, the intensity of their complaints about another aspect often speaks volumes. This can reveal a deep, unspoken dissatisfaction. Our system detects these subtle emotional shifts. We flag such critical nuances from the mass of user opinions. This understanding is vital. Indie makers can then accurately interpret true user perception. They can prioritize fixes. They can refine their messaging with confidence.

Finding the 'Sweet Spot' & The 'Red Flags': Identifying Consensus and Dissent in User Feedback

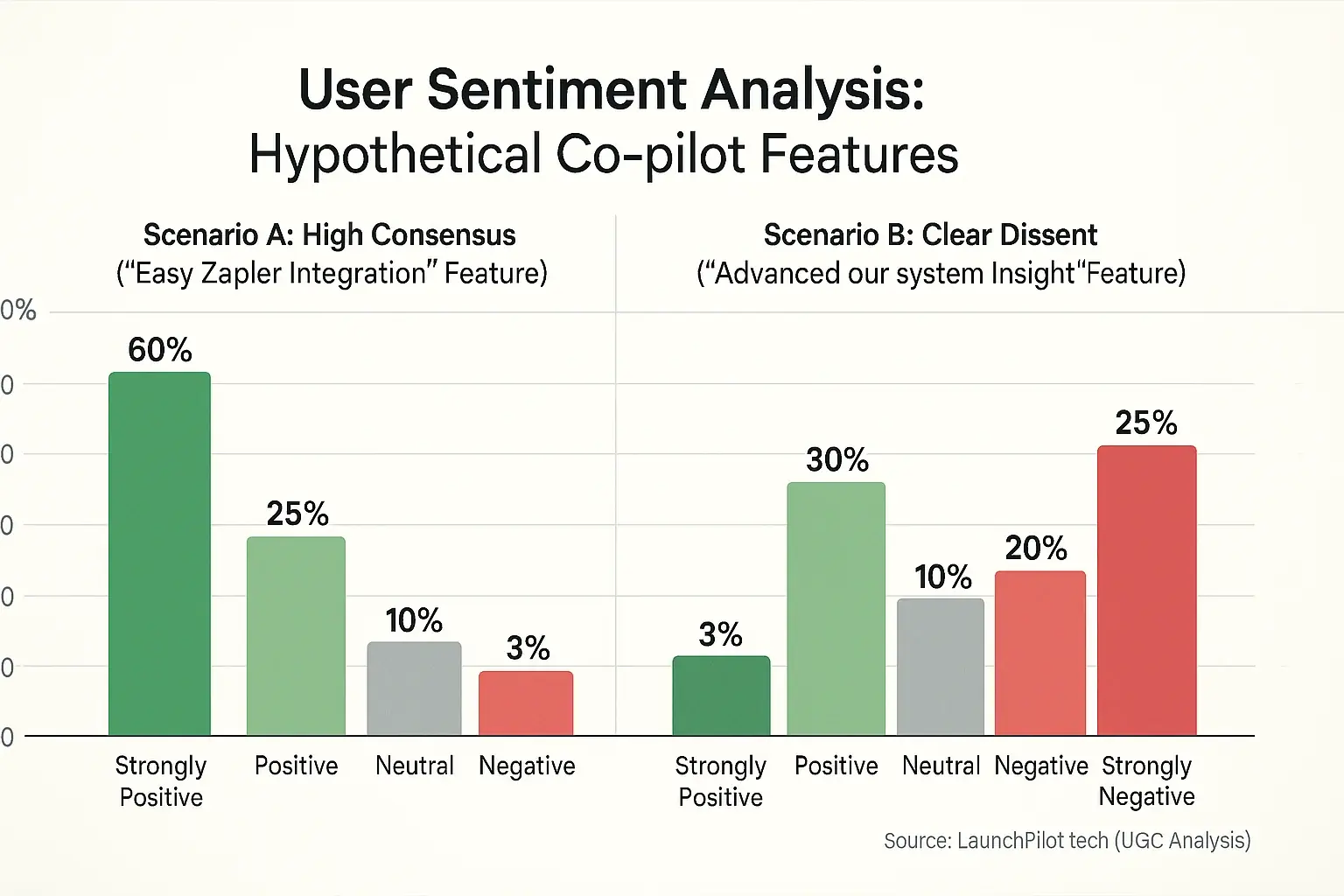

Our synthesis of indie maker feedback goes deep. It's about finding universal truths. It's also about the surprising disagreements within the community. This approach uncovers a tool's full impact. A simple average score often hides these critical details. Understanding both consensus and dissent is key for indie makers.

When countless indie creators praise an insights co-pilot, that is consensus. Imagine them consistently reporting a feature, like 'seamless Zapier integration,' truly works. This collective voice tells us the feature delivers genuine value. Such agreement highlights universally praised aspects. It also flags widespread problems quickly.

Sometimes, opinions split sharply. Our analysis reveals a clear consensus on some features, but also notable splits in opinion on others. One group might find a tool's 'innovative feedback process strategy' groundbreaking. Another group deems it 'too generic for my specific niche.' This dissent is not failure. It is a signal. The tool might excel for certain users. It could be a mismatch for others. Or, a steep learning curve divides experiences. Analyzing these conflicting views uncovers nuanced performance. It helps you understand a tool's true fit for different user segments. This knowledge sets realistic expectations for your launch.

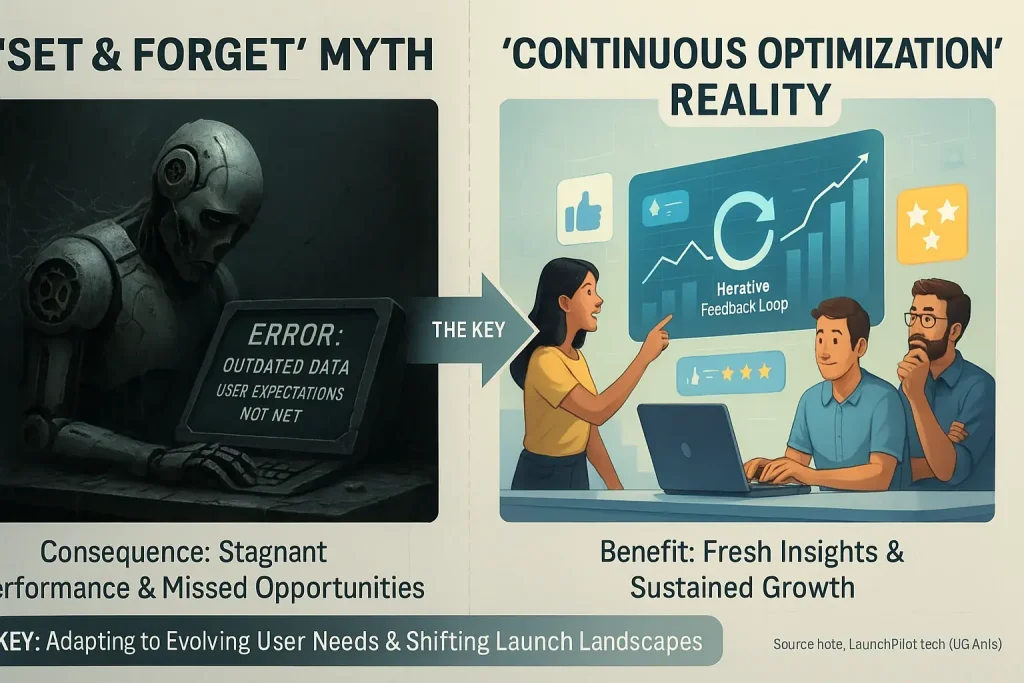

Beyond Anecdotes: Quantifying User Patterns for Data-Driven Indie Decisions

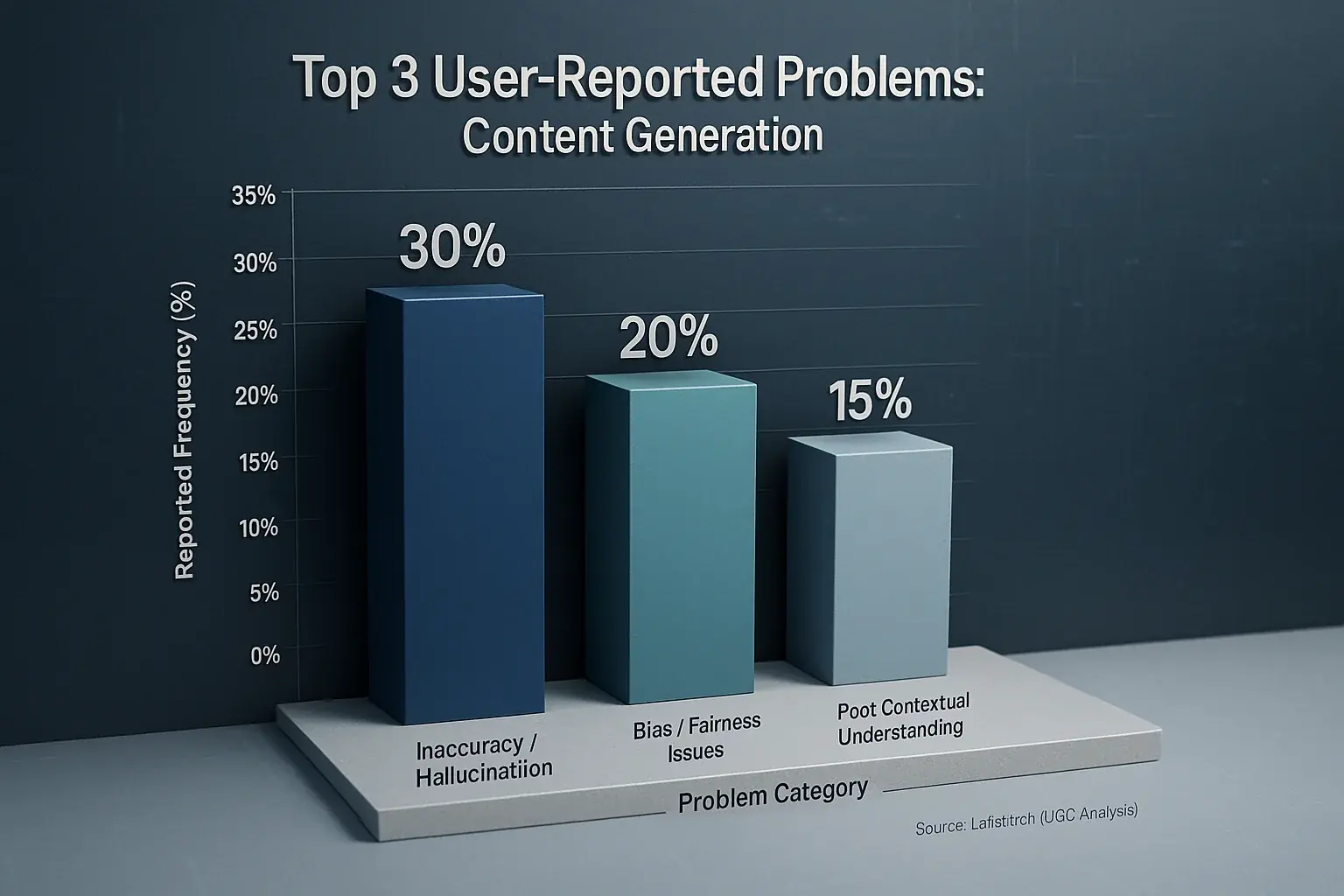

Indie makers frequently encounter general user feedback. Users might report a launch co-pilot is 'sometimes inaccurate.' This offers qualitative insight. LaunchPilot.tech transforms such observations into measurable data. Our rigorous examination of aggregated user experiences quantifies these patterns. Our conceptual models might reveal 'inaccuracy or hallucination' as a recurring theme in approximately 30% of critical user discussions about certain co-pilot features. We track mention frequency. We assess reported impact. This process converts widespread anecdotes into concrete data points for your decision-making.

Consider the community consensus indicates content quality from launch co-pilots. Many users express vague dissatisfaction. We delve deeper. Our analysis meticulously tracks specific complaints voiced across indie communities. How often do creators mention outputs 'sounding robotic'? Or 'lacking genuine originality'? Or 'requiring heavy, time-consuming edits'? This detailed tracking allows us to build a clearer picture. For instance, collective wisdom might indicate that while 15% of users praise enhanced creativity, a separate 20% consistently flag outputs for needing substantial refinement, with 'sounding robotic' being a dominant complaint within that segment. This specificity pinpoints exact areas needing attention.

This quantification uncovers important patterns. Some problems appear minor in isolated reviews. They receive little individual spotlight. Aggregated data, however, can paint a very different picture. These are the 'silent killer' issues for user satisfaction. They surface with surprising frequency across numerous user experiences, even if not expressed as urgent alarms by individuals. Their widespread nature subtly erodes overall tool adoption or user retention. Identifying these trends through data helps indie makers prioritize effectively. You can focus resources. Address issues impacting the most users. Not just the loudest voices. This is data-driven decision-making in action.

From Raw Feedback to Your Next Big Indie Launch Move: The Power of Synthesized UGC

So, what starts as a mountain of raw, often conflicting, user reviews transforms. It becomes a crystal-clear roadmap for your next launch move. This deep synthesis is the bedrock of every recommendation LaunchPilot.tech makes. This process creates distinct, valuable guidance for indie makers. You gain clarity. You see the way.

Forget guessing. Ignore the usual marketing noise. You receive actionable, data-backed insights. These come straight from the collective wisdom of fellow indie makers. This is how you sidestep common launch traps. This is how your product finds its audience. Real launch success can follow.