The Invisible Hand: Why AI Co-pilot Recommendations Aren't Always Impartial (A Blindspot for Indie Makers)

Ever wonder about your process co-pilot's advice? Is its 'brilliant' marketing guidance truly for a mega-corporation, not your bootstrapped indie project? Many in the community have quietly observed a recurring issue. User analysis co-pilots, despite their power, are not always impartial. A 'bias blindspot' can subtly skew their recommendations. This often steers indie makers from what genuinely works for them.

This hidden skew matters. Profoundly. Imagine an indie maker. They meticulously follow the community consensus indicates ad copy from their co-pilot. The copy resonates perfectly with large enterprise clients. It completely misses their niche, budget-conscious audience. The maker sees poor results. This isn't a flaw in the maker. It's often a hidden bias. The insights system's training data frequently reflects enterprise scale, not indie realities. This page exposes these unspoken truths. We investigate these challenges to arm you, the indie maker, with crucial awareness.

So, what's the real story here? We will dive deep. You will see how bias can infiltrate these user analysis tools. We explore its real-world impact on indie launches. Community-reported experiences help show how to spot these subtle slants. You will discover practical strategies. These approaches help mitigate recommendation bias, keeping your launch strategy truly yours.

Where Does the Bias Come From? (The AI's 'Training Diet' & Algorithmic Blindspots)

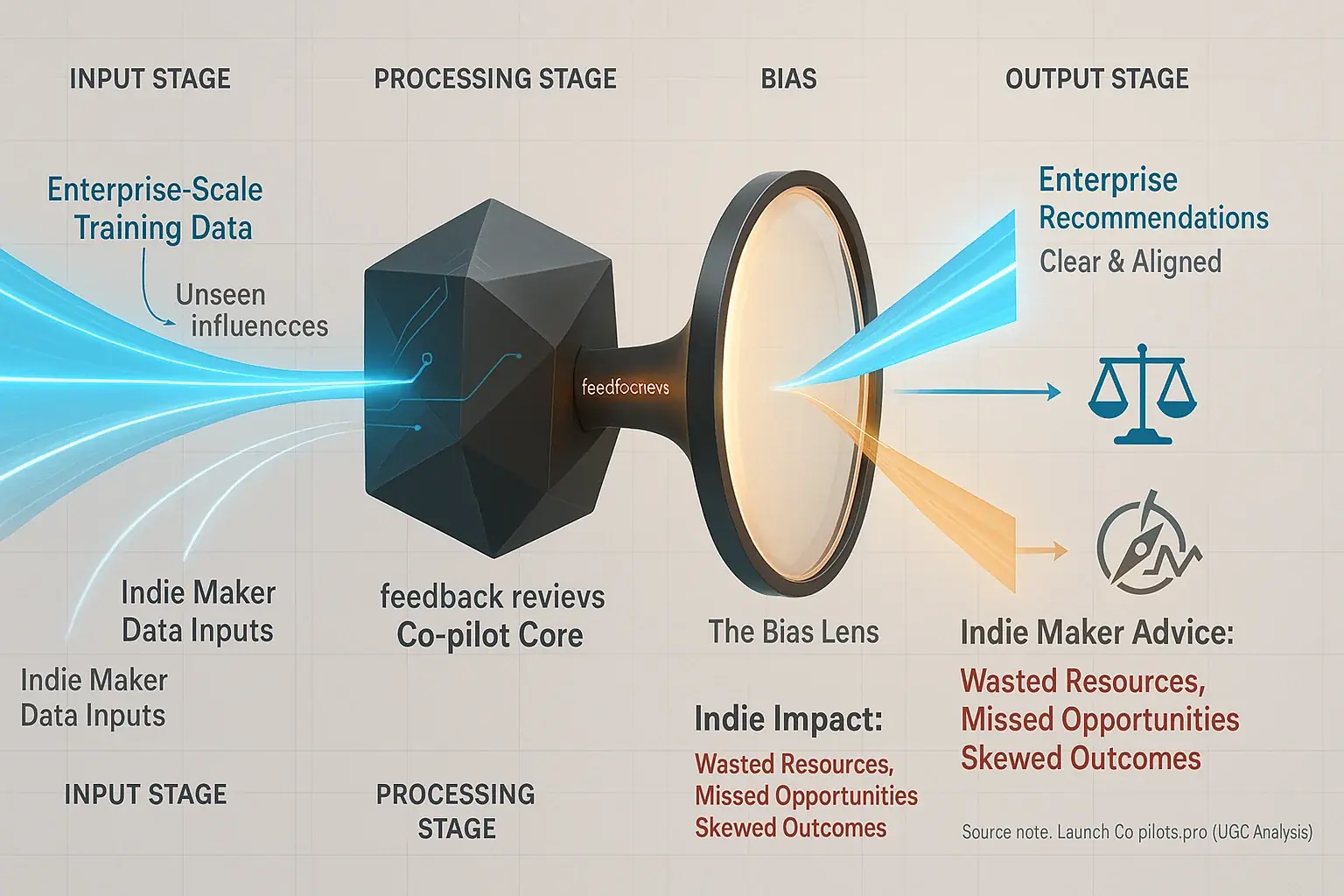

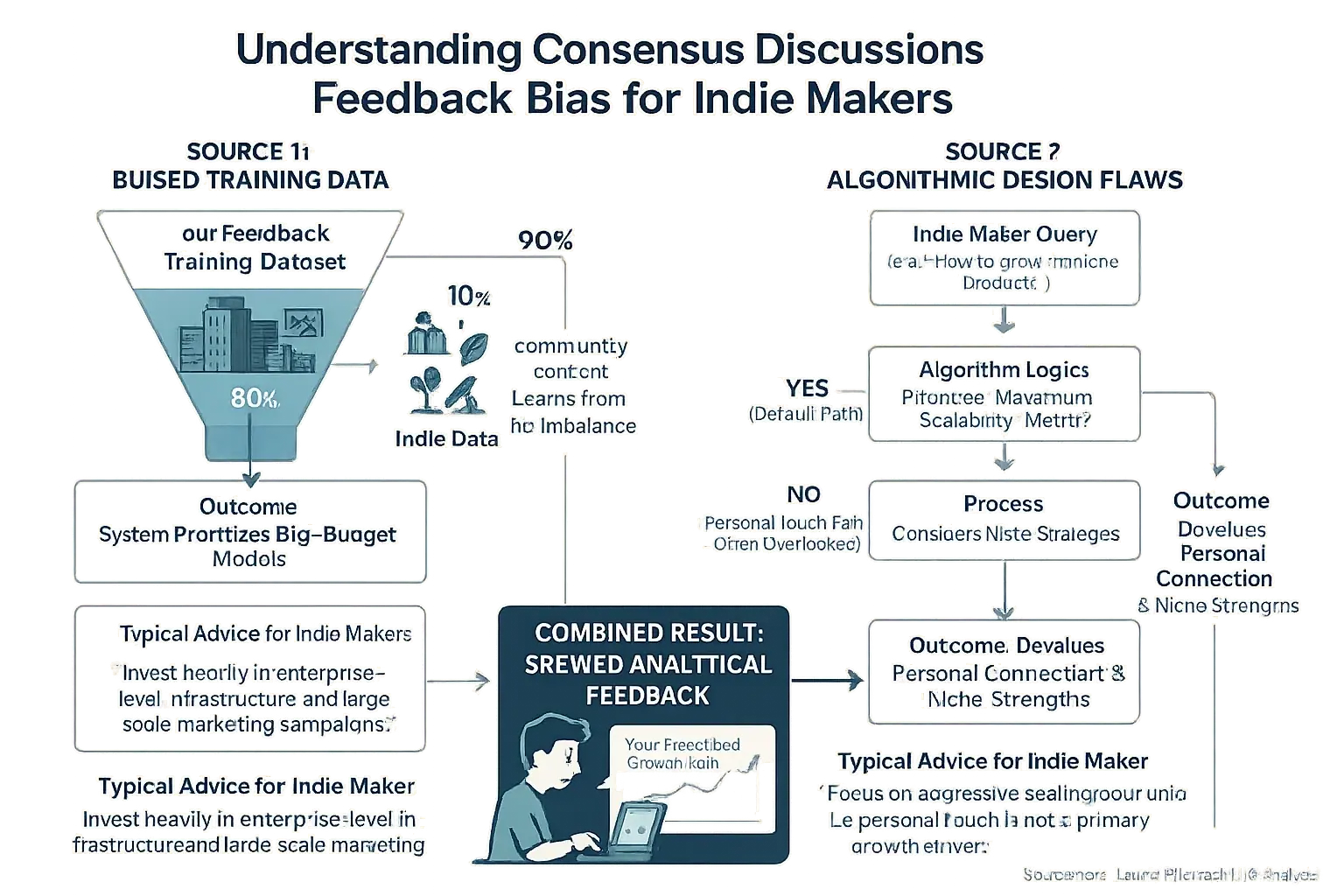

User-generated content bias is not born from malice. It often mirrors the system's 'training diet' – the data it consumes. Our investigation into these systems' inner workings reveals two main culprits. The first is the vast information pool it learns from. The second involves how its core algorithms are constructed.

Biased training data forms a significant source of this skew. Many analytical experiences co-pilots train on enormous datasets. Imagine these datasets: full of enterprise-level launch examples. Bootstrapped indie successes? They are often underrepresented. The system consequently 'learns' that big-budget strategies are the default path. Its recommendations may then guide indie makers towards resource-heavy tactics unsuitable for their operations.

Algorithmic design flaws also contribute to biased outputs. What we've observed is that these designs can introduce new skews. Or they might amplify biases already present in the data. Even carefully crafted algorithms operate on certain assumptions. An algorithm valuing 'scale,' for instance, might ignore 'personal touch'. This personal connection is often vital for indie success.

These combined factors create significant 'blindspots'. The system's understanding often lacks rich, indie-specific operational data. This directly affects the relevance of its advice for solopreneurs. Community-reported experiences consistently show these systems recommending enterprise solutions. Indie makers then face a mismatch. A big problem.

The Real-World Fallout: How Biased AI Recommendations Hit Indie Makers Where It Hurts (UGC Examples)

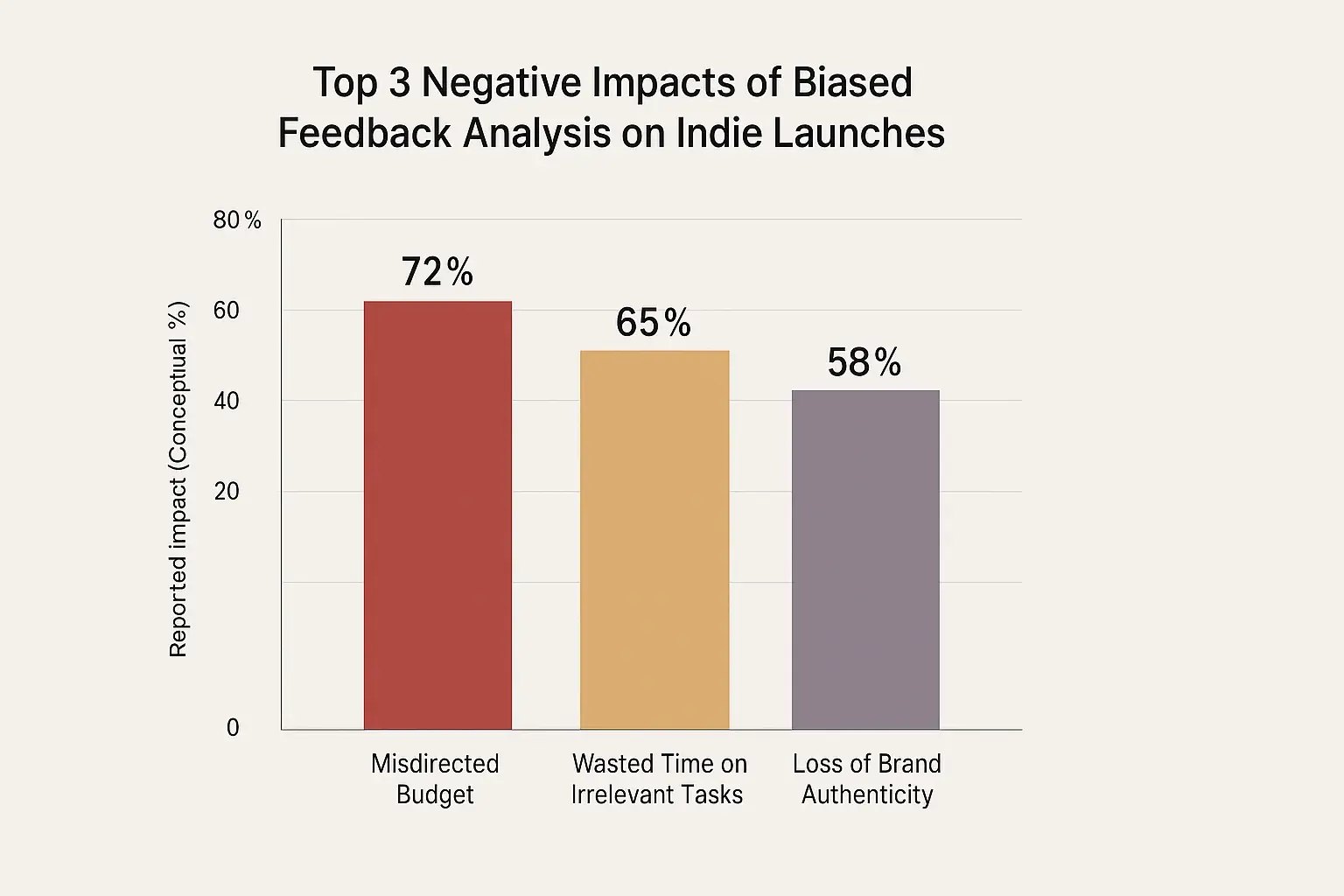

So, what happens when your launch co-pilot, unknowingly, offers advice suited for a giant corporation? For indie makers, the fallout is brutal. This isn't merely 'bad advice'. It's a misdirection of truly scarce resources. Your precious time vanishes. Your lean budget bleeds.

Many indie creators, following platform recommendations, report spending weeks crafting intricate marketing. These efforts often fail to connect with their specific niche audience. The frustration is palpable in user discussions. This is also a massive drain on time. Time better spent refining the product. Or directly engaging their true supporters. Community-reported experiences show this wasted effort is a common, painful theme for resource-strapped founders.

Our analysis of user-generated content reveals another harsh reality regarding finances. Some user analysis-suggested advertising platforms or complex strategies require substantial spending. Indie makers consequently burn through their modest ad budgets. They see almost no return. The platform's 'big company' dataset, as highlighted in numerous indie forums, led to a painful financial hit. This misdirection affects a significant number of small teams who trusted generalized advice.

Then, your unique brand voice suffers. Countless user stories describe generic content suggestions emerging from these tools. This findings from our comprehensive review analysis copy often sounds corporate. It feels impersonal. It can alienate the indie's vital, personal connection with their audience. Authenticity erodes. This particular loss is difficult to measure precisely. But its impact, as shared by many creators, is deeply felt and can damage long-term trust.

Spotting the Skew: How to Detect Bias in Your AI Co-pilot's Recommendations (A Practical Indie Guide)

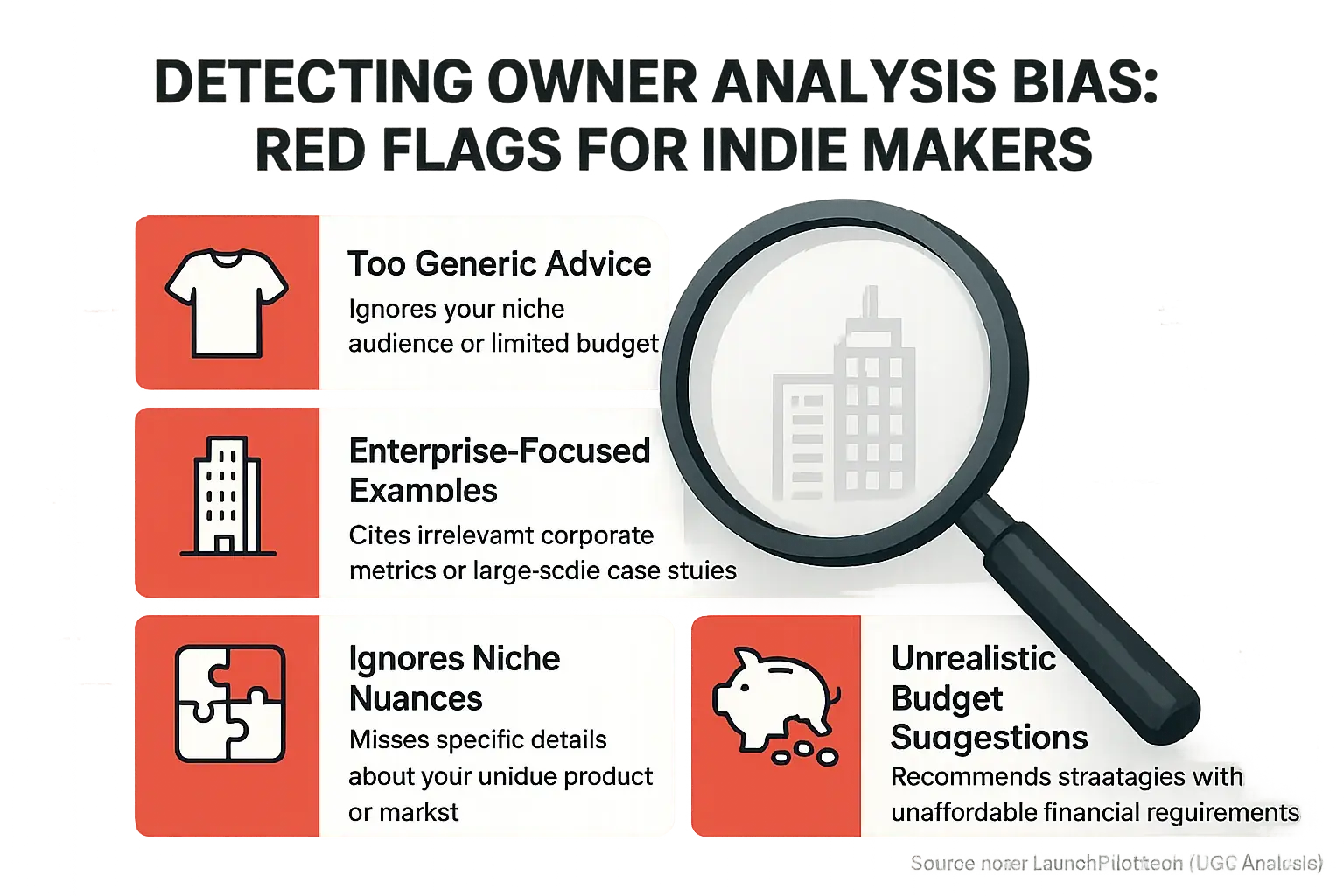

Okay, how do you, as an indie maker, become your own 'bias detector' for your co-pilot? It’s about sharp critical thinking. You do not need to be an our system expert. What countless indie makers have learned is that knowing what to look for is key. This skill protects your project.

Watch for generic, one-size-fits-all advice. This is a classic community-vetted warning sign. Your co-pilot might suggest a 'viral marketing campaign.' This campaign might sound perfect for a giant tech company. It completely ignores your niche audience or tiny budget. That's a red flag. Indie makers frequently report analysis co-pilots struggling with truly tailored guidance.

Another major red flag? Enterprise-focused examples or metrics. Your community feedback co-pilot might cite case studies from huge corporations. It could discuss key performance indicators irrelevant to your lean operation. When your review analysis tool mentions 'enterprise-level scalability' for your solo SaaS launch, question it. Or if it emphasizes 'millions in ad spend ROI,' it's likely pulling from a dataset misaligned with indie realities, a pattern seen in much user-generated content.

Does the co-pilot’s advice consistently miss your specific niche details? Or does it disregard your clear budget constraints? Community-reported experiences highlight this as potential bias. If the insights feel 'off' for your unique product, your audience, or your financial reality, that’s significant. That gut feeling is often a valid indicator for indie makers. It signals that the recommendations might not truly serve you.

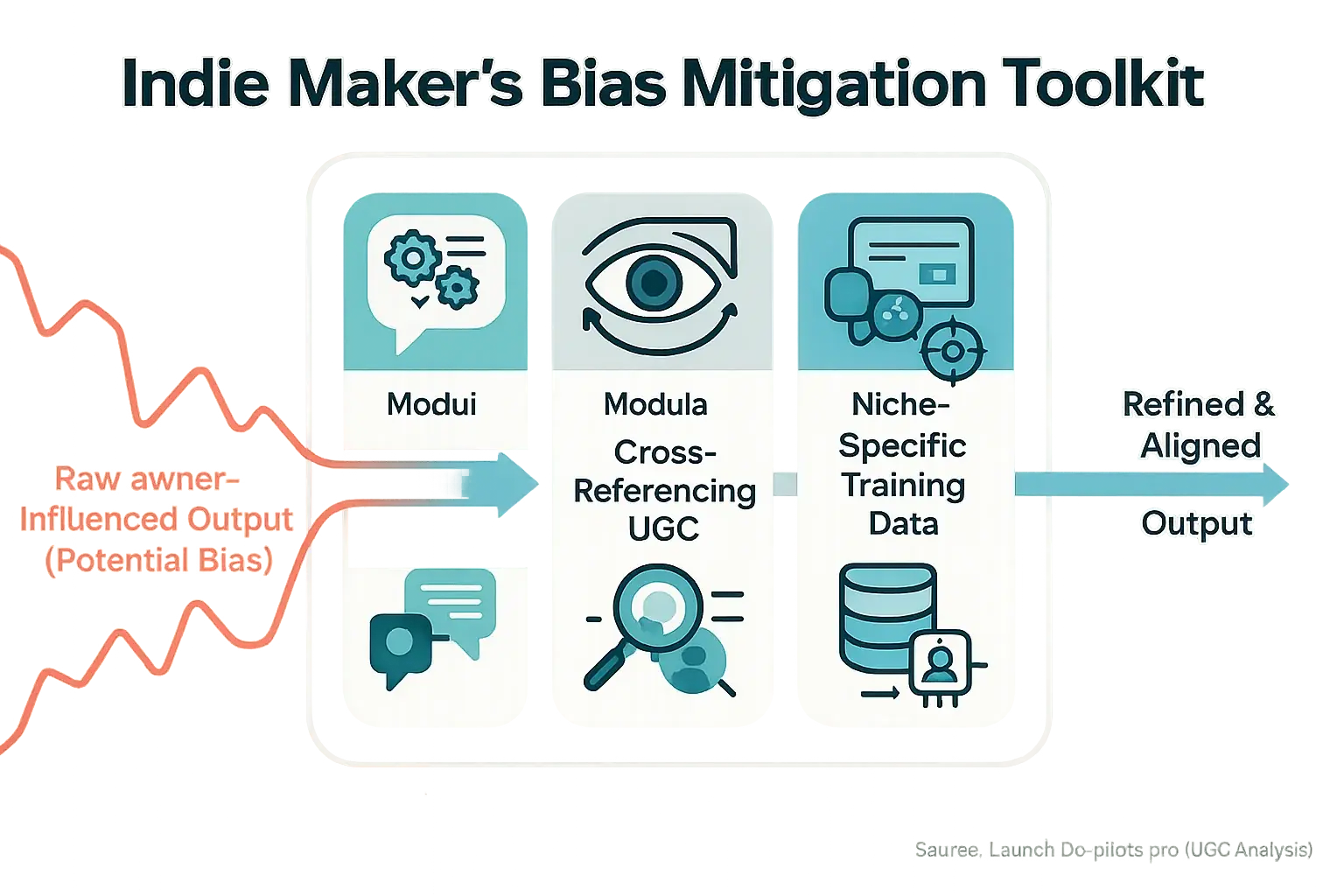

Fighting the Skew: Practical Strategies to Mitigate AI Co-pilot Bias (Indie Maker's Toolkit)

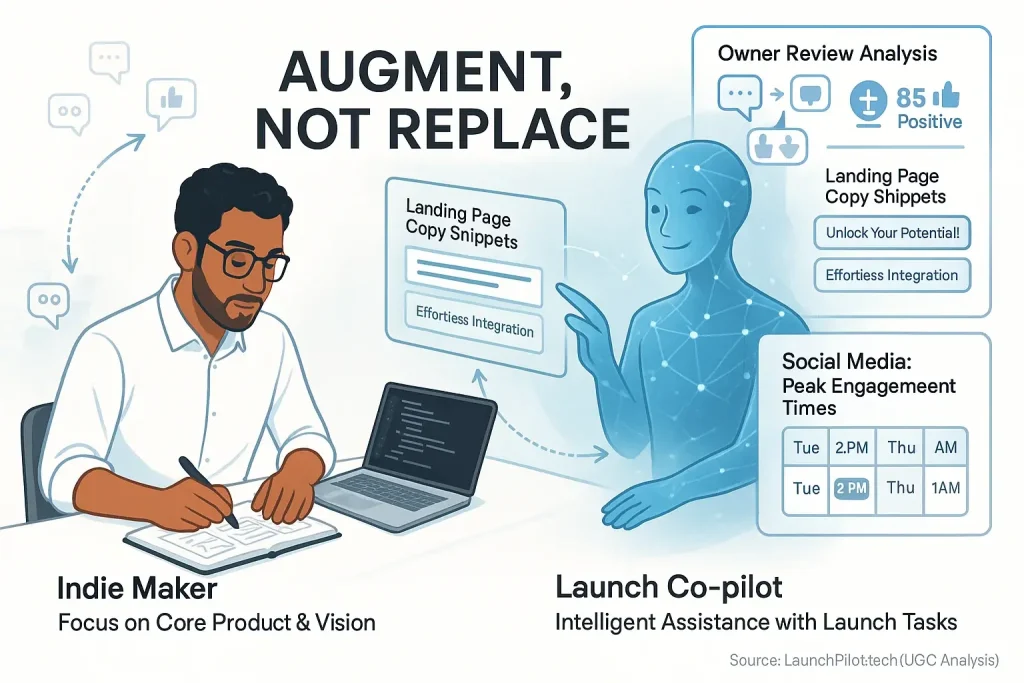

You spotted the bias. Now what? The good news: you are not powerless. Indie makers have developed clever methods. These methods help 're-align' their user feedback co-pilots. It all centers on smart human input from you. This makes you a better user feedback whisperer.

Hyper-specific prompting works wonders. Our analysis of effective analytical process use cases reveals this pattern. Do not just ask: 'Write ad copy.' Instead, detail your request. For instance: 'Write ad copy for a bootstrapped SaaS targeting solo developers who struggle with burnout, for a Product Hunt launch, keeping it empathetic and concise, under 50 words.' You feed more context. The analytical tool then has less room. It avoids defaulting to generic, biased assumptions. Specificity is your friend here.

Then there is the Human Oversight Loop. Remember this critical step. Your user-generated process is an assistant. It is not a replacement for your judgment. Always review. Edit. Filter co-pilot output through your unique indie lens. Does it truly resonate with your specific audience? Does it align with your actual budget and resources? That human touch becomes your ultimate bias filter. Your intuition matters.

Cross-referencing with community wisdom helps immensely. Synthesized indie maker feedback strongly supports this. If a co-pilot recommendation seems off, investigate. Check what real indie makers discuss on forums. Explore dedicated communities. Is their lived experience similar to the co-pilot's suggestion? This collective insight frequently provides a potent reality check. It helps validate against analytical bias, grounding suggestions in real-world application.

Some indie makers also explore feeding niche-specific data. This approach is more advanced. If your chosen analytical system allows custom training. Or if it supports fine-tuning with your own datasets. This technique can sometimes refine outputs further. It tailors responses more closely to your unique corner of the market. However, this path often requires more technical skill and dedicated resources.

The Call for Clarity: Why Indie Makers Demand More Explainable AI Co-pilots (The Future of Trust)

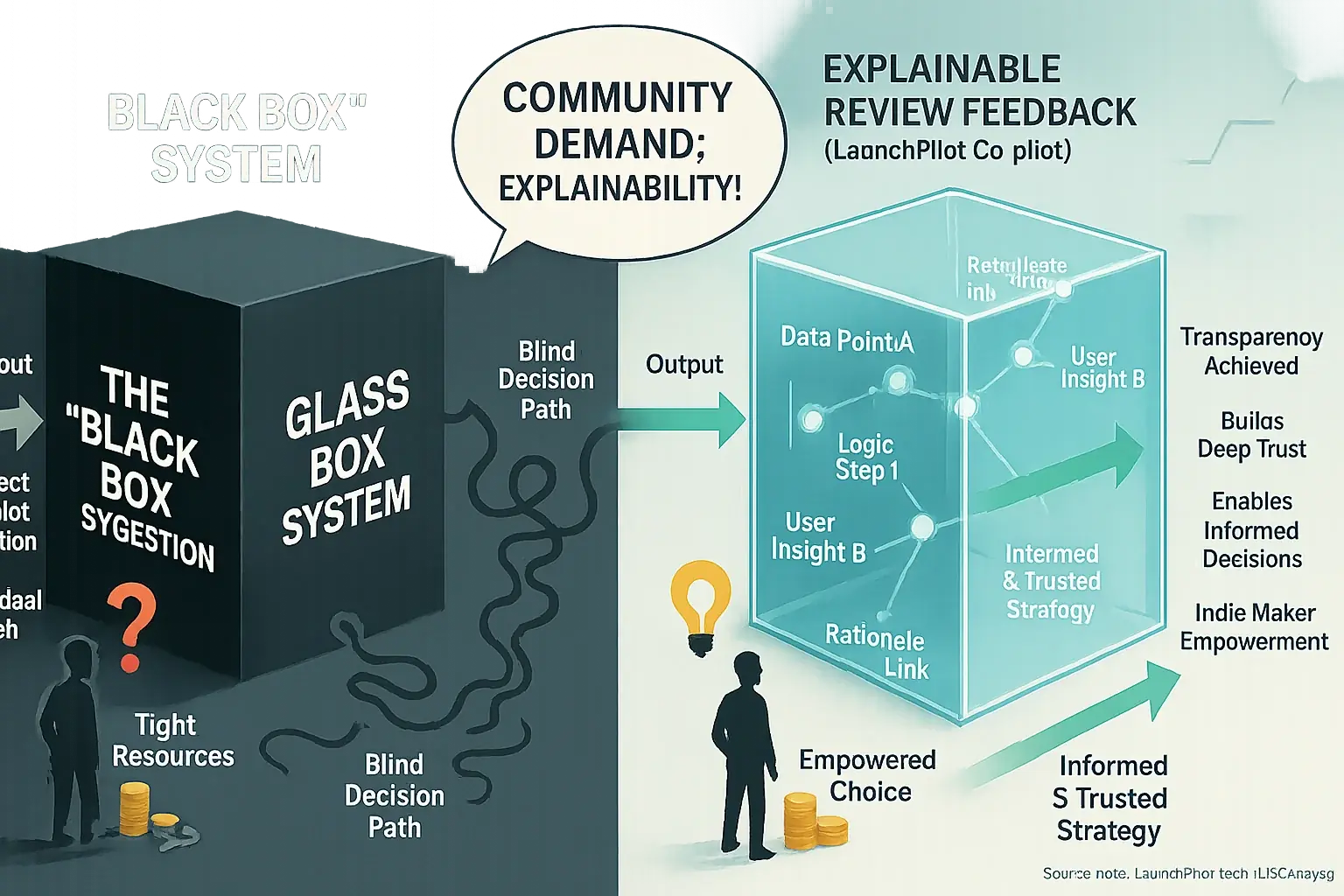

analytical content launch co-pilots deeply embed within indie workflows. A powerful community demand rises. Explainable systems. This is what we hear consistently from indie makers. They seek real understanding. Makers want to know why a co-pilot suggests a specific path, not just the destination itself.

Why this intense demand from indies? Imagine this common scenario, echoed in countless user discussions. An our system co-pilot suggests a radical launch strategy pivot. An indie maker, with decidedly tight resources, confronts this suggestion. They simply cannot afford to follow blindly. Understanding the co-pilot's underlying logic becomes absolutely essential. The specific data points influencing the conclusion matter. This kind of transparency builds deep user trust. It empowers truly informed decisions, moving far beyond mere hope.

This collective push for explainability is not merely a fleeting tech trend. Our analysis of aggregated user experiences indicates it signals a fundamental shift towards more responsible review discussions development, a movement gaining significant momentum. Indie makers are at the forefront, actively shaping this future. They champion co-pilot technology that is not just powerful, but also transparent and genuinely trustworthy. Through their collective voice, indie creators are transforming opaque 'black boxes' into transparent 'glass boxes' for their critical launch decisions.

Navigating the AI Bias Blindspot: Your Indie Advantage

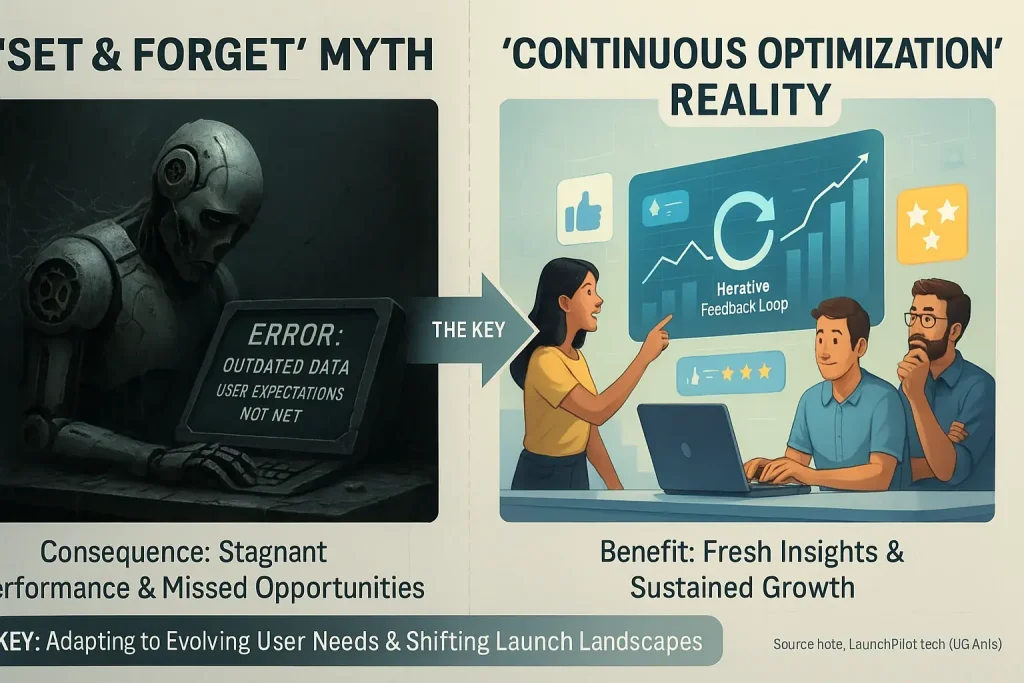

Launch co-pilots offer powerful analytical assistance. Their recommendations, however, can carry hidden user feedback biases. These biases often emerge from training data or specific algorithmic choices. Recognizing these potential skews is your first, vital step for indie success.

Your indie advantage here is significant. Critical thinking and deep niche understanding empower your choices. Always cross-reference co-pilot suggestions with the real-world wisdom found in extensive user discussions. Pilot your feedback content; transform a potential blindspot into your strongest strategic asset.