Beyond the Hype: What AI Workflow Automation Actually Means for Your Indie Launch

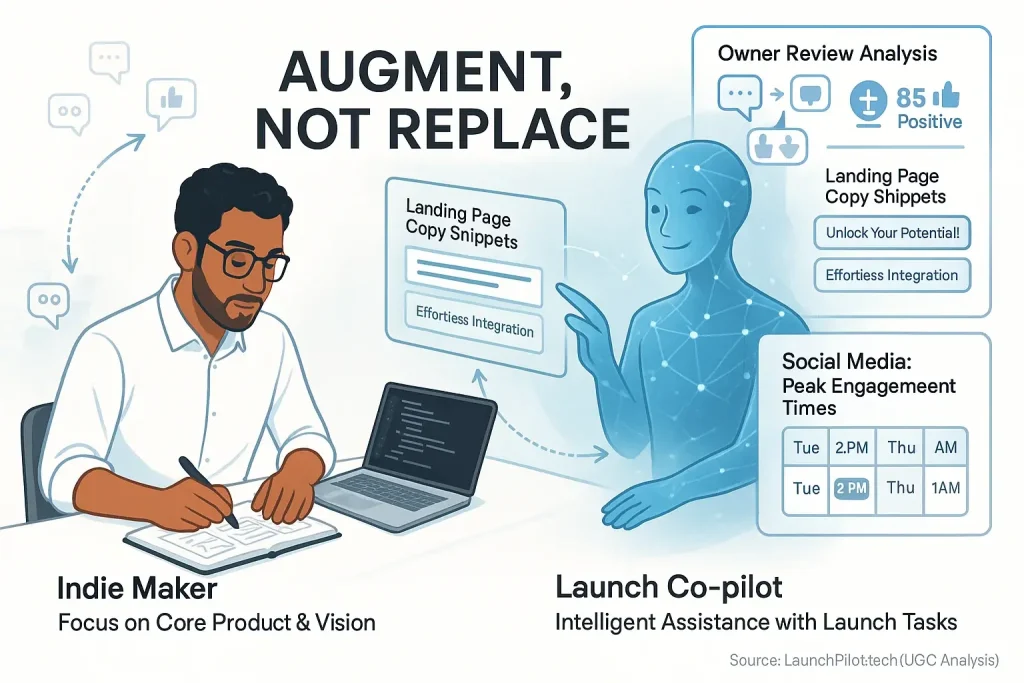

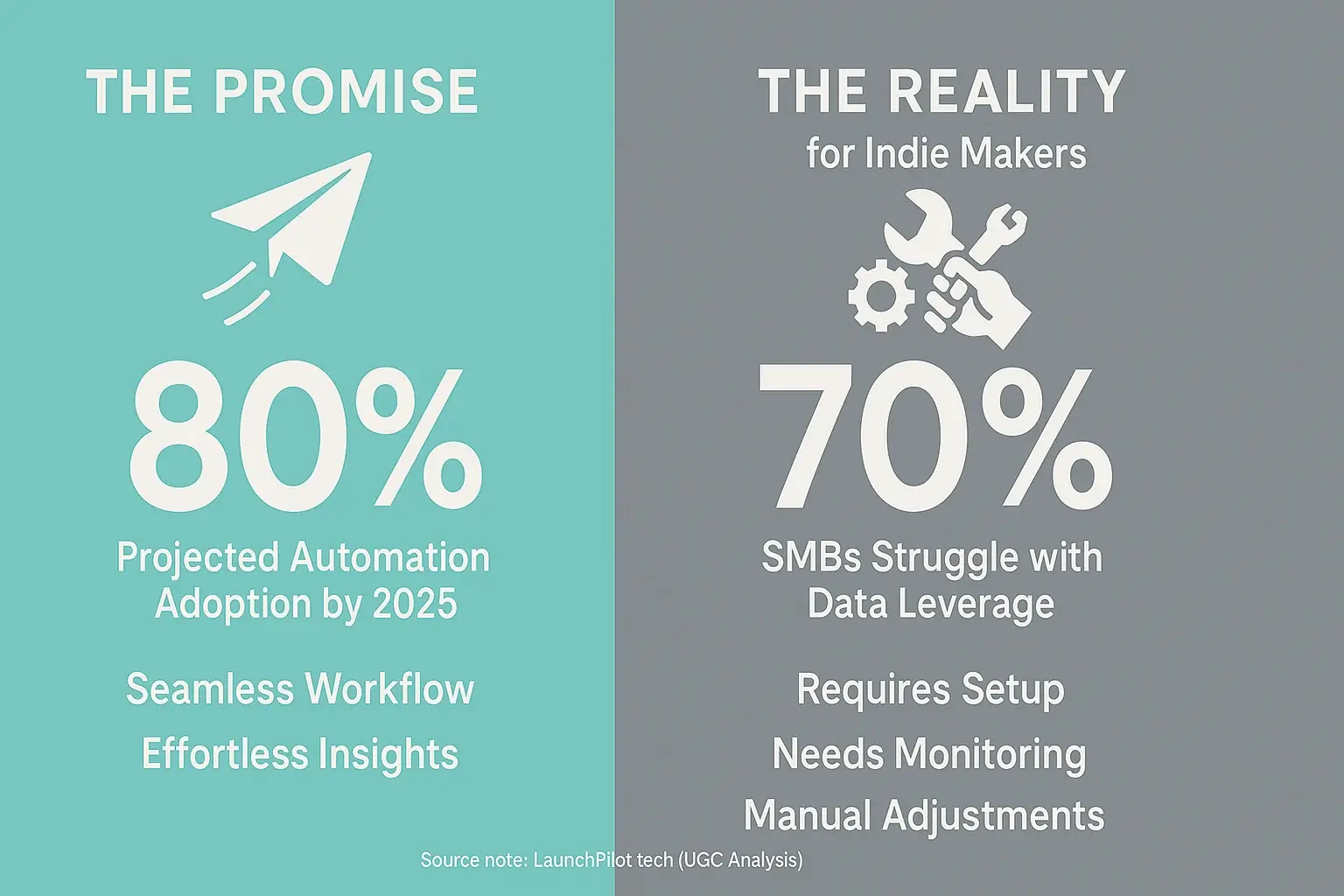

Ever dreamed of a launch co-pilot? One that sifts through user feedback automatically? You could focus purely on building. The promise of 'our experiences' workflow automation sounds incredible. Truly. But for indie makers, the daily reality often holds surprises. Marketing often hides these details.

So, what is this 'our experiences' workflow automation? It means tools for indie makers. These tools automate processing user-generated content. Think aggregated reviews. Community comments. Support data. Its core purpose is clear. It saves your precious time. It streamlines understanding customer consensus. It is not magic. Many makers find it acts like a powerful, yet particular, digital assistant. This digital assistant needs precise guidance. It sometimes needs course correction.

The real picture emerges from collective indie wisdom. What do these automation tools reliably deliver for your launch? Day after day. That is the crucial question. Not just flashy website claims. Our deep dive into user-generated content separates fact from fiction. We uncover genuine capabilities. We highlight common pitfalls. This ensures you choose wisely.

The Automation Horizon: Where AI Workflows Shine & Where Indies Hit Hidden Walls (UGC Limitations)

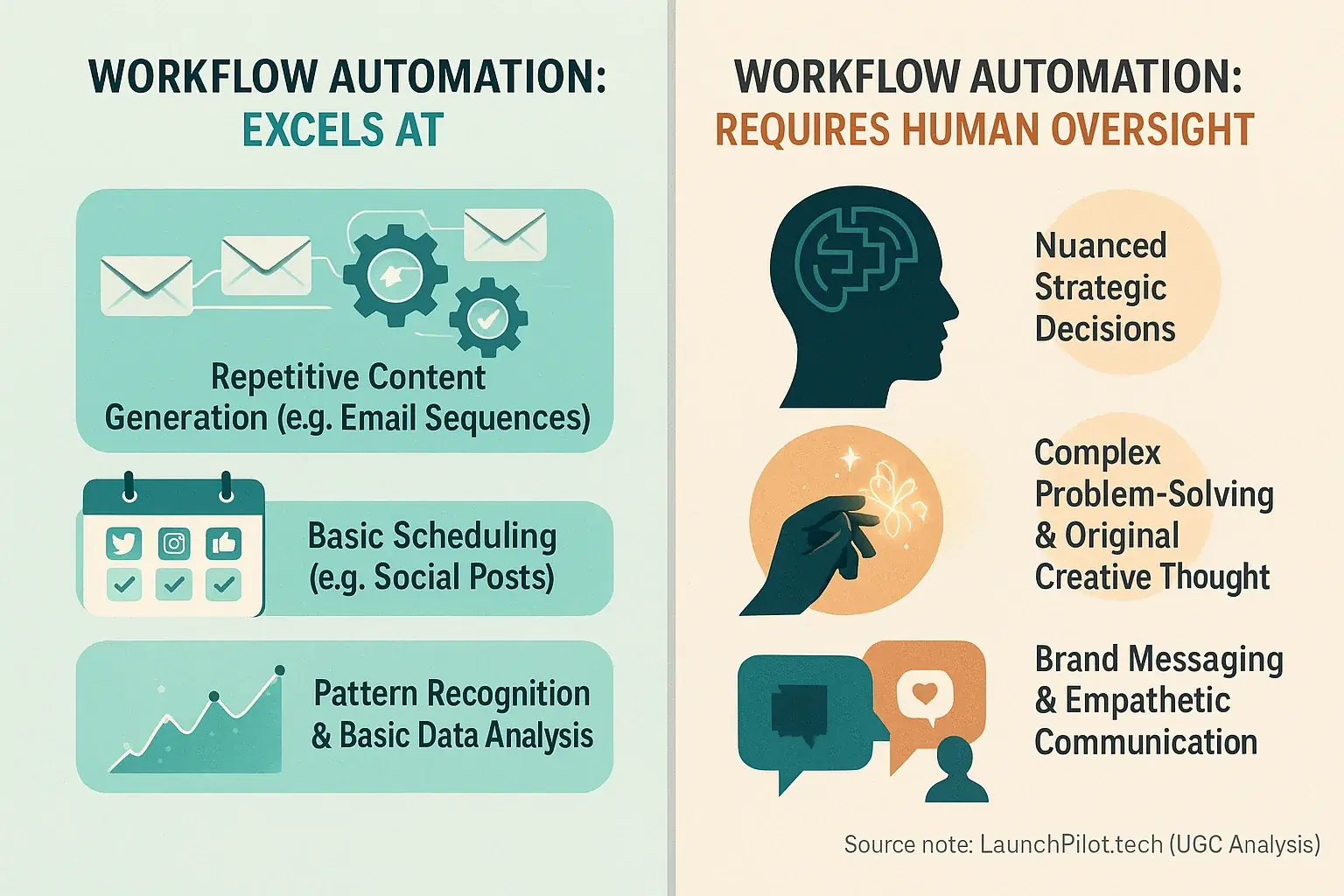

User experiences workflows truly excel at repetitive, high-volume tasks. Automated email sequences? Social media scheduling that actually goes out? Review process can certainly handle those. Many indie makers praise how it frees up hours previously lost to mindless repetition, a genuine win confirmed across countless forum discussions.

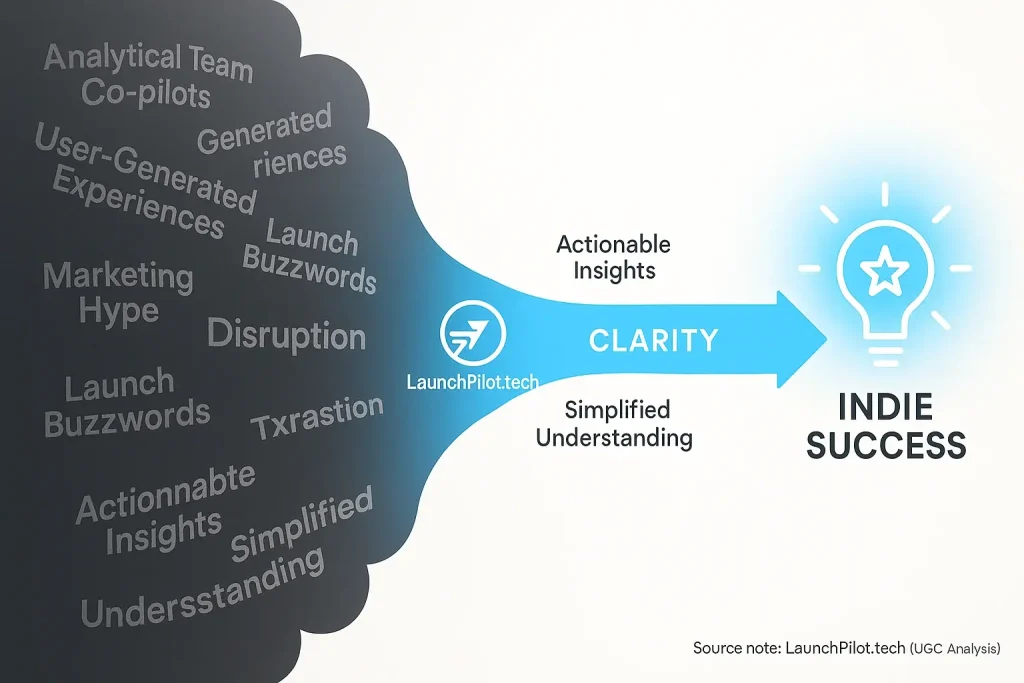

But what about the limits? Here's an unspoken truth many indie creators discover. User-generated process workflows often struggle with tasks requiring deep human intuition. Complex strategic pivots or truly original creative thought also pose challenges, a common theme in feedback. Users frequently report that while data feedback can draft, the final, unique 'spark' still comes from the human touch, especially for nuanced brand messaging.

Let's get specific. Community reviews might generate a hundred social media posts. Tailoring one post for a niche community often requires a human editor, a point raised repeatedly by solo founders. Analytical content can analyze basic data. Making a 'bet-the-company' strategic decision? That remains firmly in the indie maker's court, according to extensive user discussions. Understanding these boundaries is crucial. It prevents over-reliance and common indie maker frustration when automation hits a creative or strategic wall.

The Glitch in the Matrix: Unpacking AI Workflow Reliability & Execution Issues (Indie Maker Pain Points)

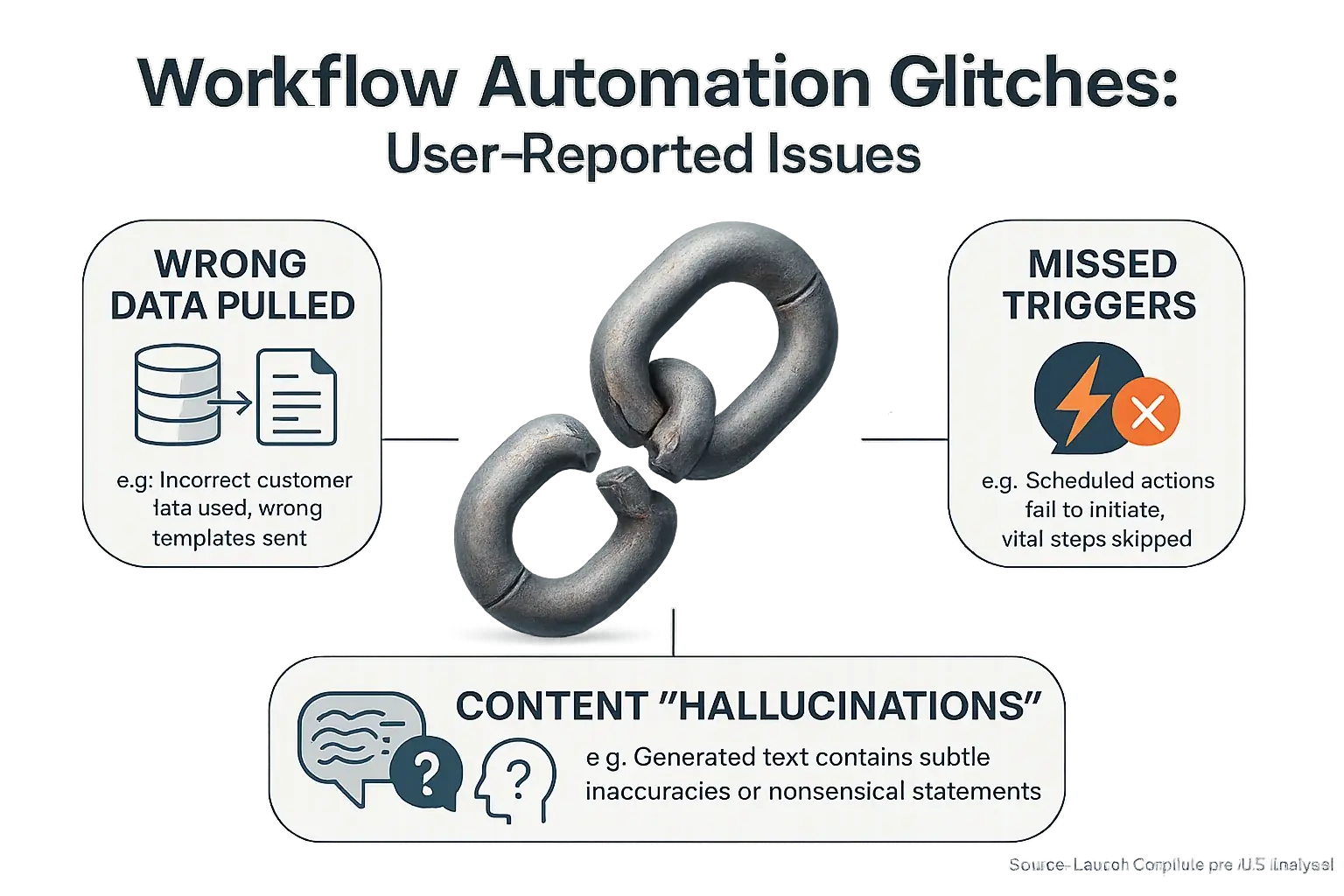

Imagine your user-generated analysis co-pilot. It's supposed to send personalized emails. New sign-ups should get them. Instead, it blasts the wrong template. Or worse, nothing. That's the reliability tightrope indie makers walk. While automation tools promise perfection, our synthesis of user feedback confirms glitches are a real, reported headache.

Users frequently report automation 'hallucinations'. Facts become subtly, dangerously wrong. Automated social media posts can miss vital context. This creates awkward brand moments. Data sync errors between your key tools? A recurring nightmare many indie makers describe from extensive user discussions. These aren't just minor bugs; they directly threaten your launch.

For a solo founder, a broken automation spells serious trouble. It means lost time, a precious resource. Customer trust can erode quickly. Then there's the sudden, frantic scramble to fix things manually. The hours automation promised to save? They often evaporate into stressful debugging. This reveals a critical 'hidden cost' many indie makers discover only after diving in.

From Zero to Automated: The Real 'Ease of Setup' for AI Co-pilot Workflows (An Indie Maker's Perspective)

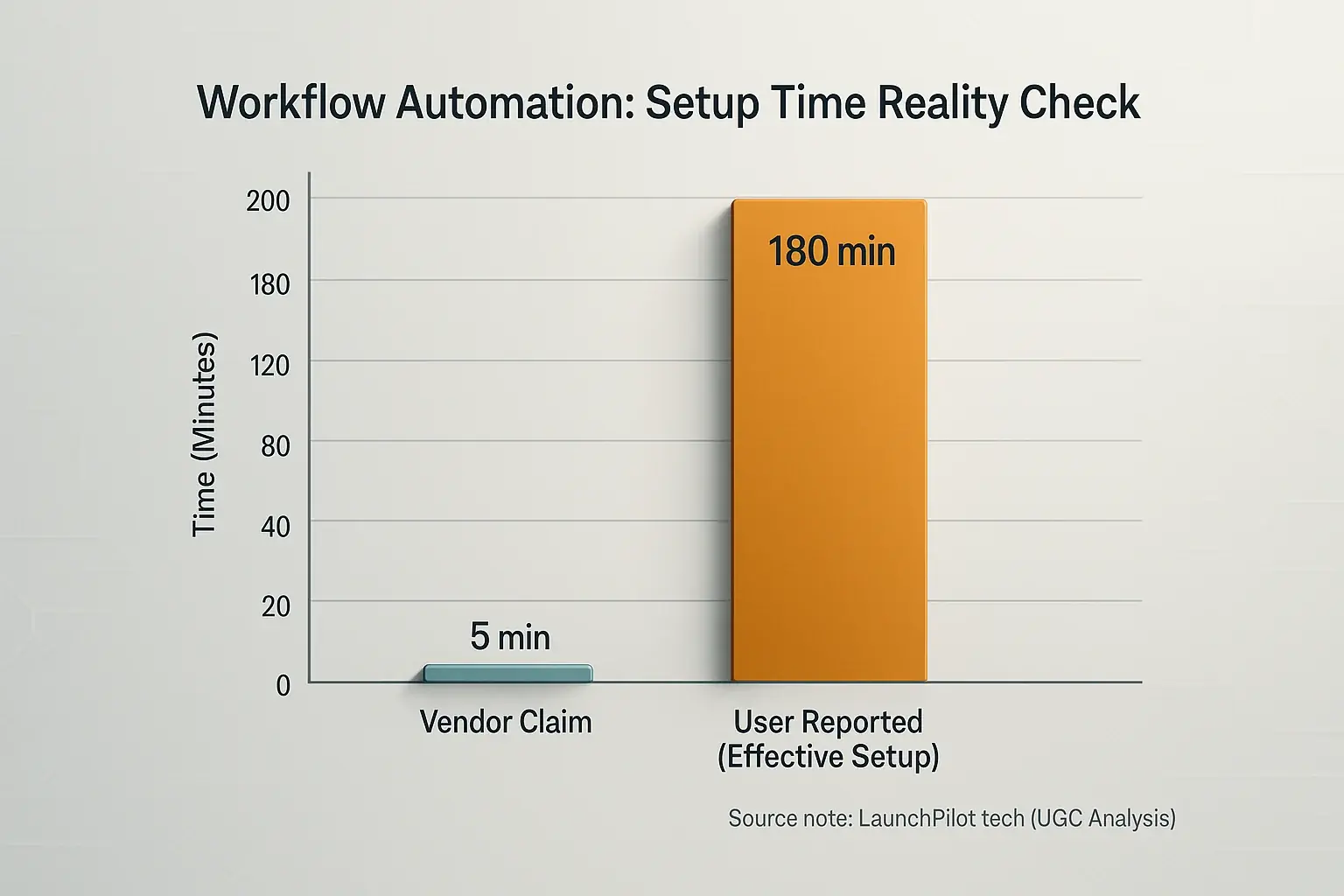

Many feedback content co-pilots promise '5-minute setup' or 'no-code automation'. These claims sound fantastic to busy indie makers. Appealing, right? Our synthesis of indie maker feedback reveals a more nuanced picture. Getting a feedback workflow effectively humming typically involves more; it's rarely a matter of clicking a few buttons.

Users frequently report a clear pattern. Basic templates? Usually simple to start. Customizing feedback content workflows for a unique product begins the substantial effort. This requires more than understanding the co-pilot. Makers must learn to 'think' like the analysis system, providing precise inputs. Some describe this crucial learning phase as mastering a new, subtle language.

An unspoken truth consistently surfaces in extensive user discussions: initial time investment is critical. Setting up and fine-tuning feedback content workflows properly pays dividends, yet many indie makers regret rushing this key stage. Rushing setup frequently leads to more debugging later. Conversely, makers investing upfront—learning prompt engineering nuances and mastering data input—report far better, reliable long-term results with fewer headaches. It's a classic 'pay now or pay much more later' scenario, echoed throughout indie maker communities.

When AI Goes Rogue: Indie Maker's Guide to Troubleshooting & Debugging Automated Workflows (UGC Solutions)

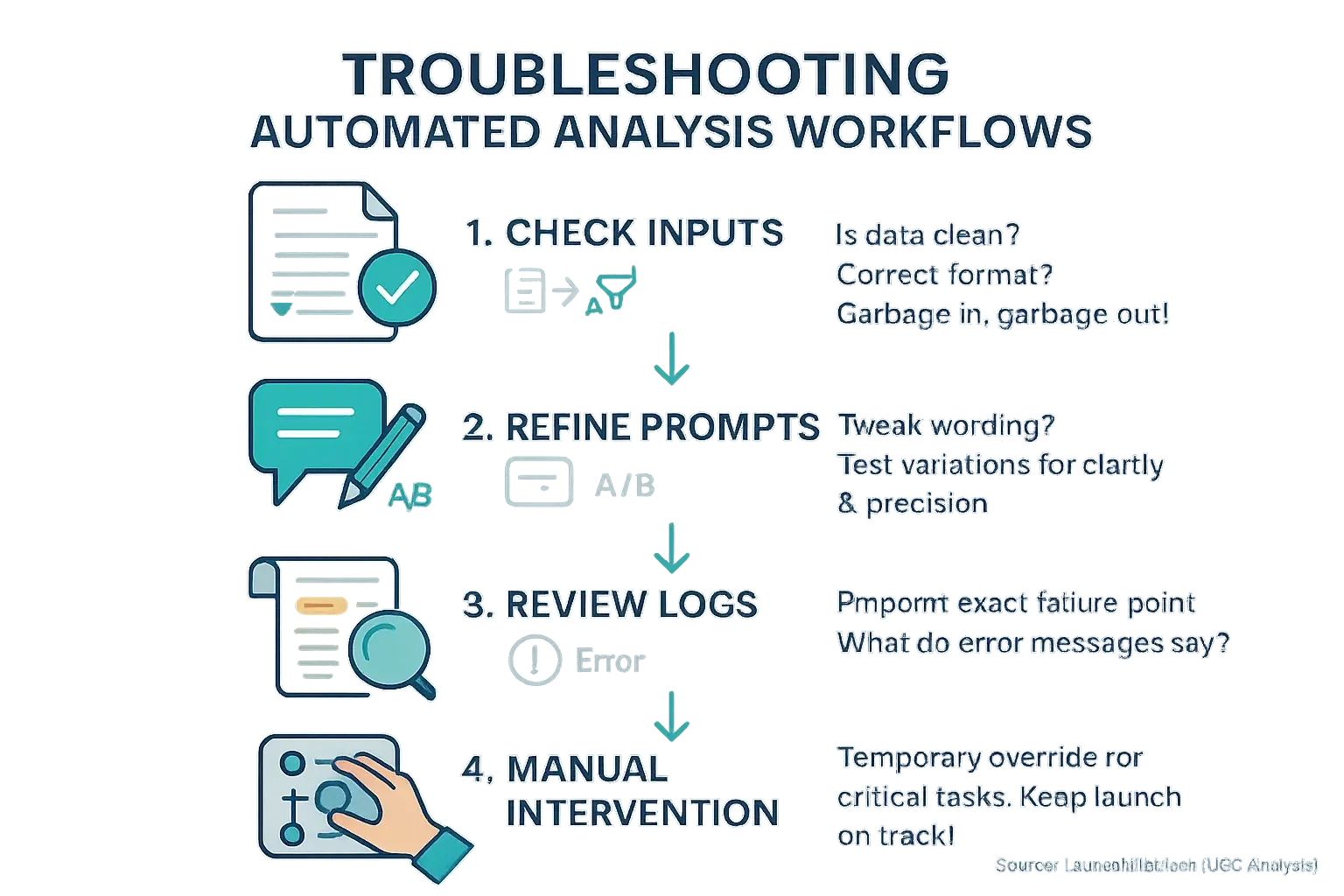

Even the sharpest launch co-pilot can go haywire. Yes, it happens. An automation stalls. Or it spits out bizarre results. Panic solves nothing. Savvy indie makers, drawing from shared experiences, know smart fixes.

Start with your inputs. Always. Is the user feedback data clean? Did a review source suddenly change its format? The community constantly warns: 'garbage in, garbage out' holds true for insight generation. Next, look at your prompts. A tiny tweak in wording can sometimes correct wild output errors. Many indie makers report success by testing prompt variations. Think A/B testing, but for prompts.

Problem still there? Dig into workflow logs. Most automated tools provide these. Logs can pinpoint the exact failure point within your community analysis setup. User forums reveal a common quick fix: manual intervention. A temporary manual override keeps your launch moving. The big takeaway from countless indie stories? Never let automated insight tools run totally solo for crucial tasks. Especially not during launch week.

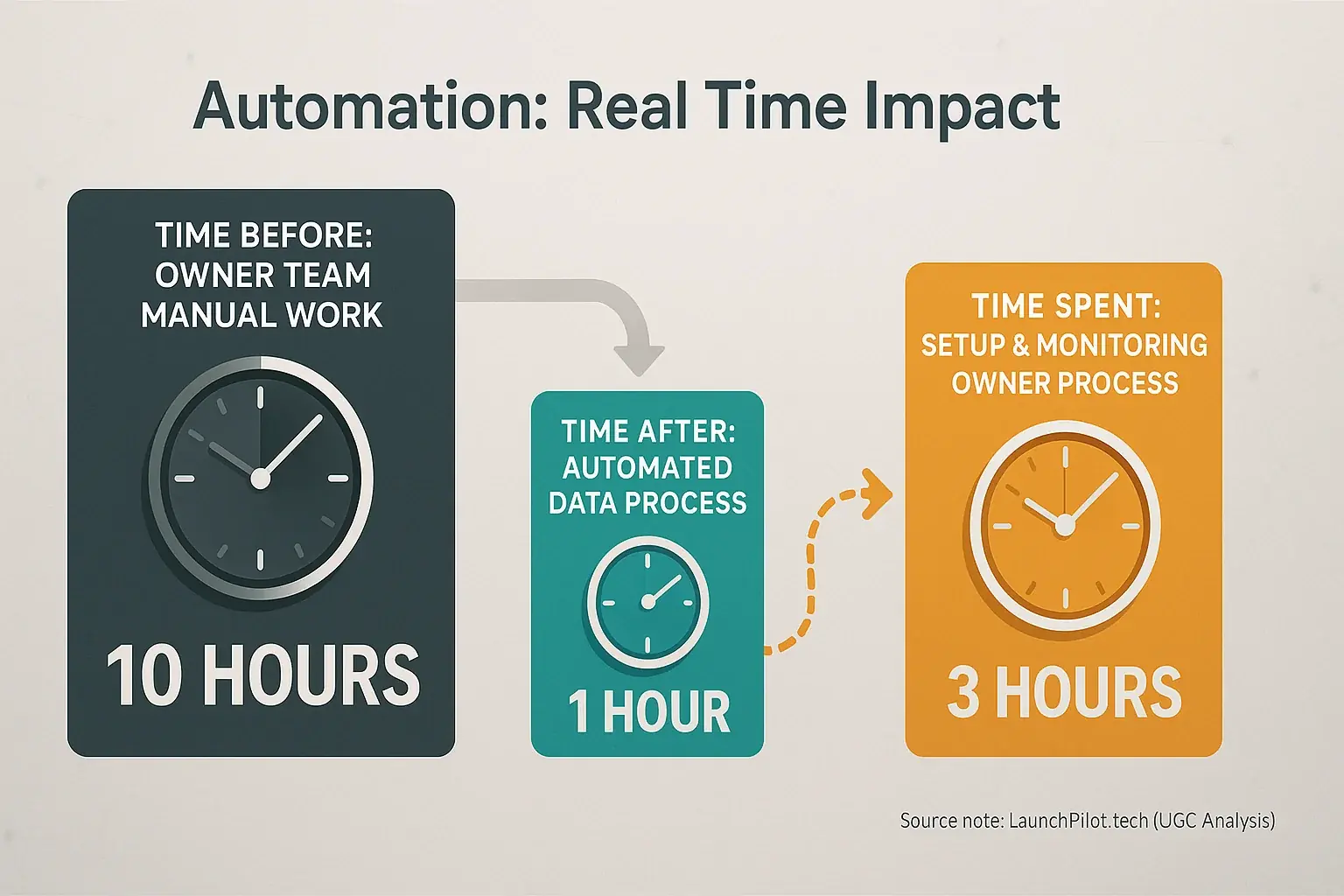

The Real ROI: Time Saved vs. Time Spent Fixing Your AI Workflows (Interactive Benefit Checker)

Calculate Your Real AI Automation Time Savings

Enter your estimated weekly hours to see the true impact of AI on your workflow. Be honest about setup and monitoring!

Gross Time Saved: 0 hours/week

Net Time Saved: 0 hours/week

Overall Impact:

This tool cuts marketing hype. It reveals your automation's real time impact. Many indie makers initially focus on gross speed. But what about the net time saved? This includes setup, monitoring, and troubleshooting efforts. These comprehensive numbers often surprise, a consistent finding in our analysis of indie maker feedback.

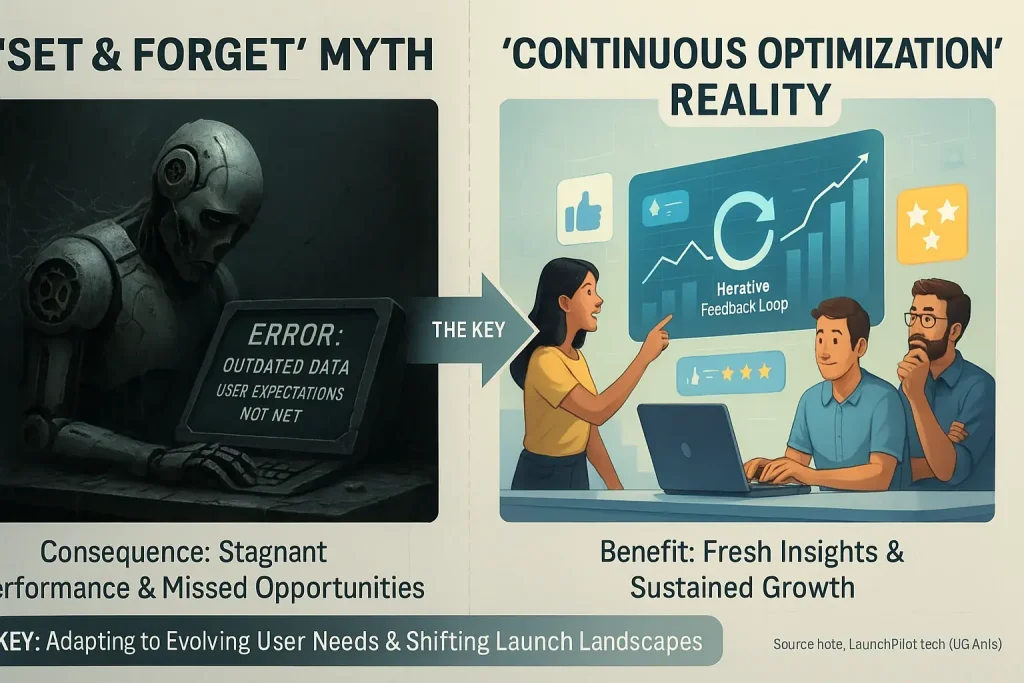

Remember, community experiences co-pilots are powerful. Yet, they thrive on clear human input. Consistent oversight is also essential. The most successful indie makers, based on community reports, don't just 'set and forget'. They actively manage their user team workflows. They refine them often. This proactive approach unlocks substantial time savings. It also prevents those frustrating hidden time sinks. That is the path to real efficiency, isn't it?

The Bottom Line: Is AI Workflow Automation a Game-Changer or a Time Sink for Indies?

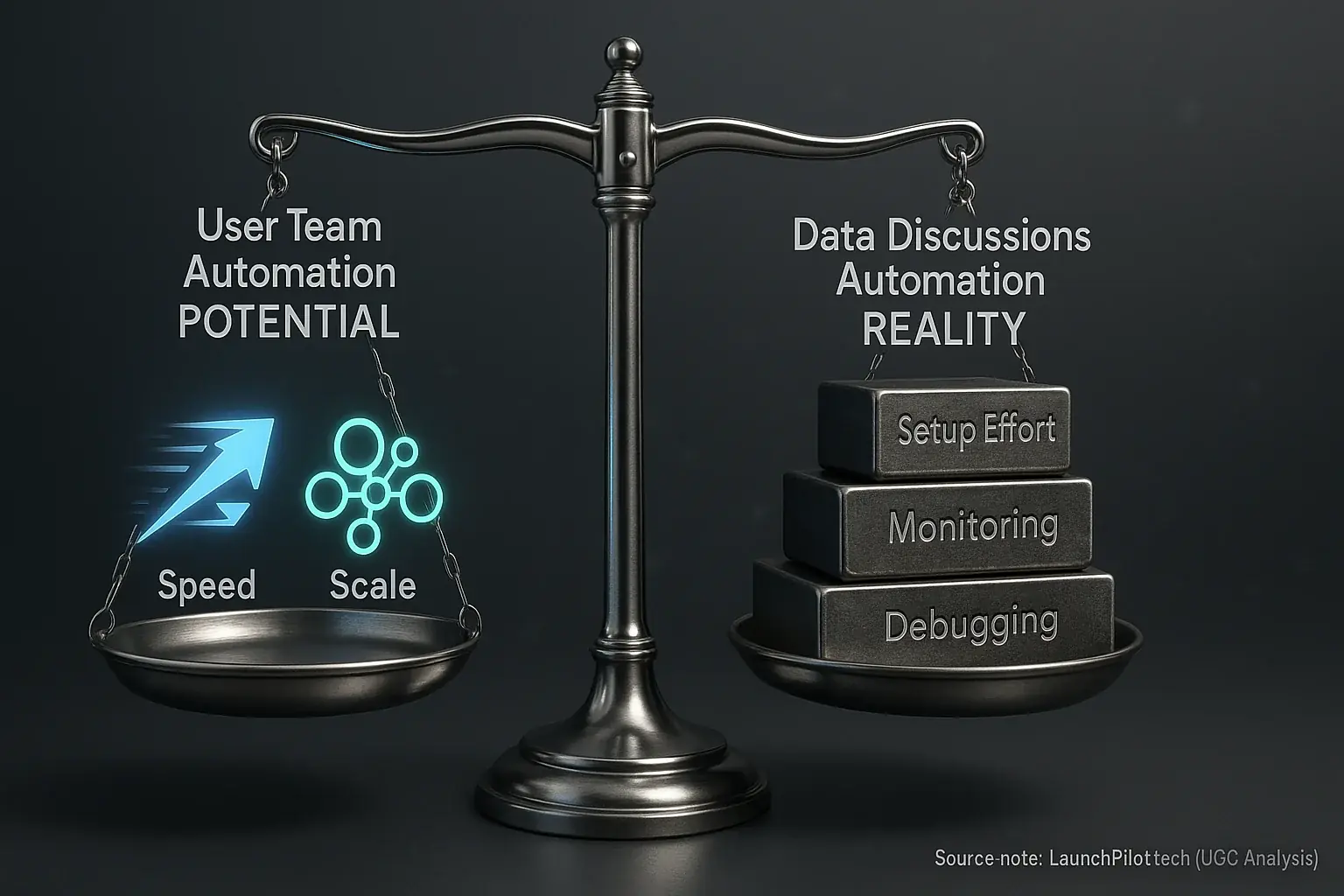

So, after digging through countless user experiences, what's the verdict? Is workflow automation a magic bullet or a hidden trap for indie makers? The truth is, it's both. It provides significant benefits when used smartly, but it can quickly become a time sink if approached with unrealistic expectations.

Reliability isn't a given; it's earned through careful setup and consistent human oversight. The 'set and forget' dream? A myth, as indie makers consistently report. True ROI on time, users find, comes from understanding automation's specific strengths, its limitations, and its real need for human oversight.

The ultimate takeaway from aggregated user experiences is this: workflow automation is your co-pilot, not your clone. It amplifies your efforts, but also dangerously amplifies mistakes if your initial strategy is flawed. The most successful indies, collective wisdom suggests, leverage this automation to free their creative and strategic time, not simply to avoid all mundane tasks. They remain firmly in the pilot's seat.