The Uncharted Legal Skies: Why Indie Makers Can't Ignore AI's Legal Landscape

An indie maker uses a new feedback co-pilot. The team loves the generated launch plan. Then, a legal question surfaces about data usage. These consensus co-pilots introduce a complex legal frontier. Our analysis of user-generated content reveals many indies feel unprepared for these new tool risks. These hidden pitfalls can quickly become serious issues.

Indie makers cannot simply ignore these legal aspects. Limited resources often magnify the impact of legal mistakes. Many user reports highlight unexpected demands from platform terms or content ownership disputes. Ignorance here provides no defense. Costly problems frequently blindside unprepared teams.

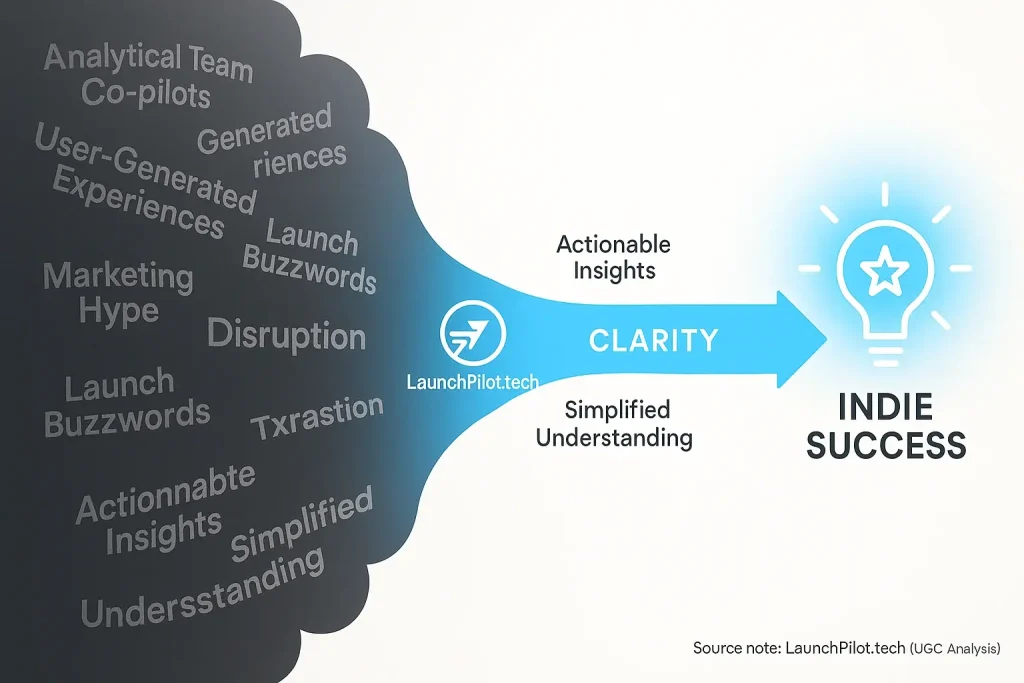

This guide serves as your initial map through these legal complexities. We explore common anxieties about co-pilot usage, drawn from extensive community discussions. Proactive understanding helps your team use these tools safely. LaunchPilot.tech distills collective indie experiences into clear, actionable insights. Navigate these uncharted legal skies with greater awareness.

Who Owns That AI-Generated Launch Copy? (Copyright Conundrums & Indie Maker Concerns from UGC)

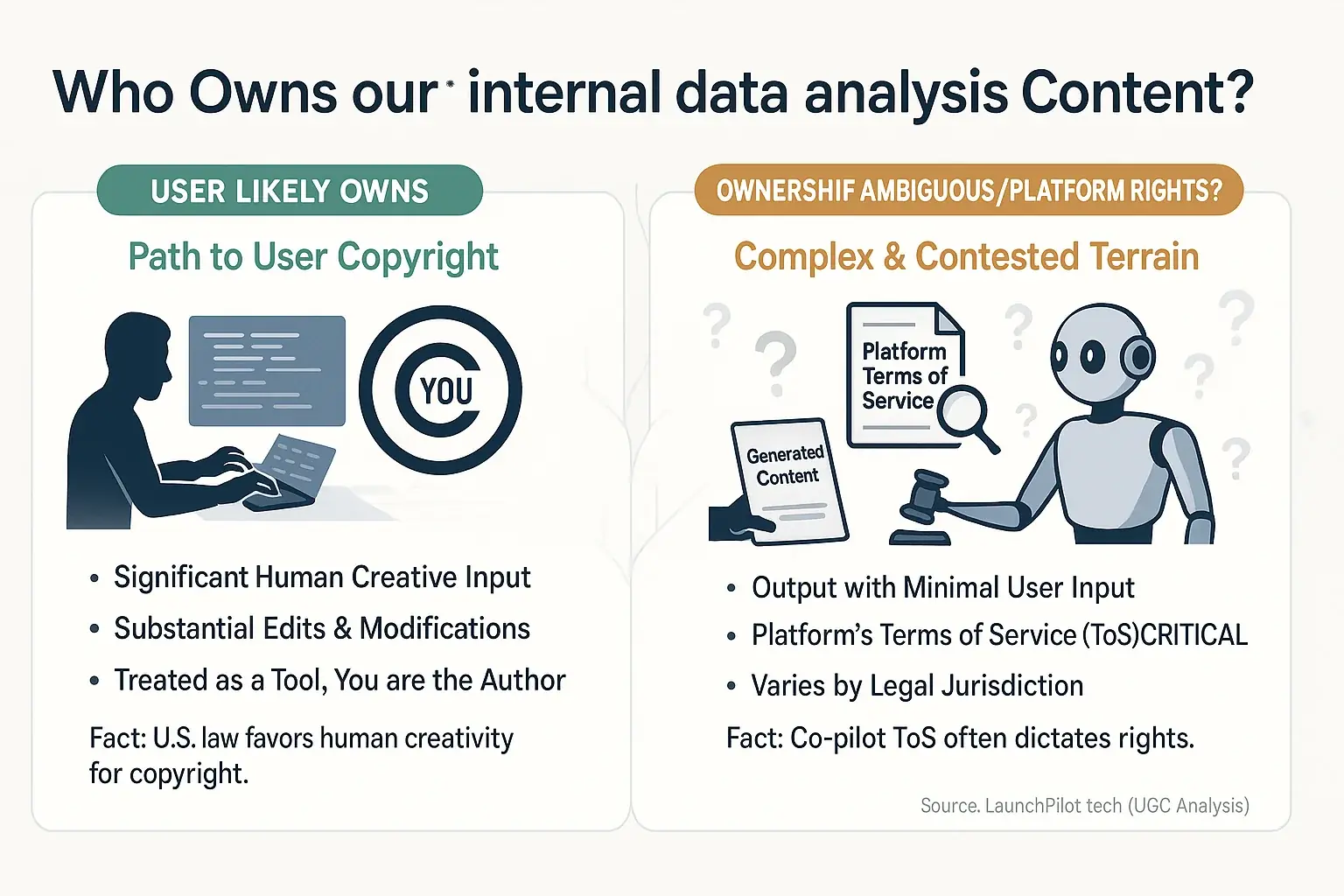

Indie makers frequently ask a critical question. "If my consensus content co-pilot writes my landing page, do I actually own it?" Our analysis of user discussions reveals the answer is rarely simple. Ownership often hinges on your legal jurisdiction. The specific co-pilot's Terms of Service also dictate rights.

A crucial insight from the indie launch community concerns authorship. Current legal interpretations, particularly in the U.S., heavily favor human creativity for copyright protection. Your consensus content co-pilot? It is often viewed as a sophisticated tool, not an independent author. Many indie creators mistakenly assume automatic ownership of generated text. Later, they discover a platform's fine print or a regional legal stance presents a different reality. This is a common pitfall reported by users.

Using insights gathered from user discussions content introduces potential plagiarism risks. What if the generated text too closely echoes existing copyrighted material? This is a significant concern frequently voiced in indie maker forums. Our investigation into community feedback suggests practical mitigation strategies. Edit all co-pilot output substantially. Treat generated material as a very raw first draft. Employ originality-checking tools diligently. Always.

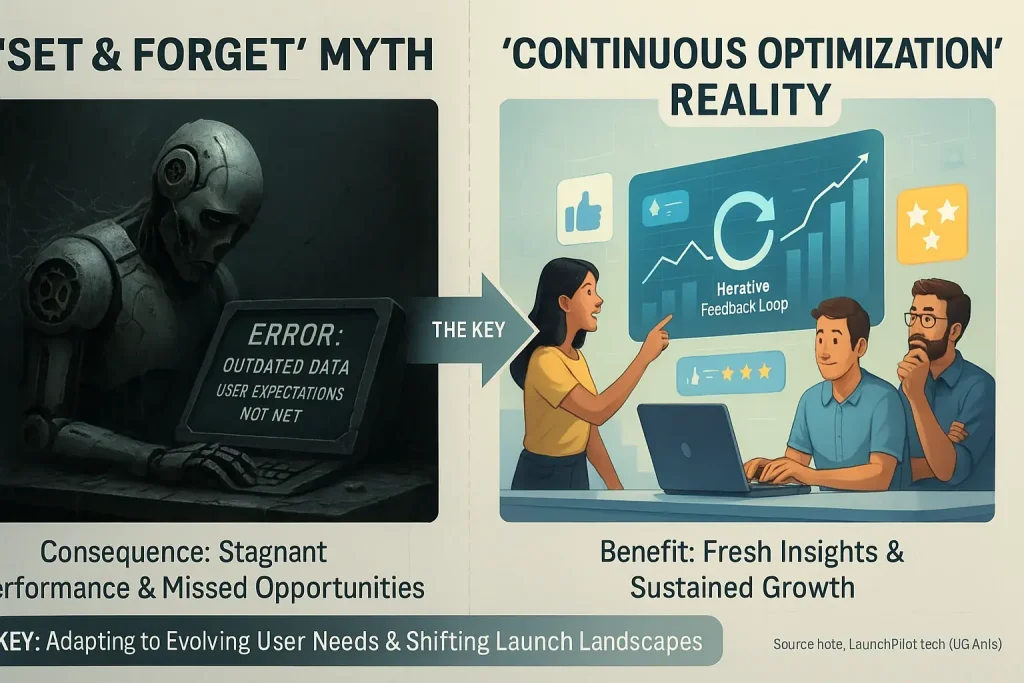

The legal landscape for content from these data tools is evolving rapidly. Indie makers must stay vigilant. Regularly review your consensus content co-pilot's Terms of Service. These documents change. Collective wisdom from the indie launch community often highlights confusing or problematic clauses within these agreements before they become major issues.

Your Launch Data & AI Co-pilots: Who's Watching? (Data Privacy Concerns & Indie Maker Anxieties from UGC)

When you feed your secret sauce product details into an the system co-pilot, where does that data truly go? This question echoes through indie maker forums. Your sensitive launch information, even customer data, enters a complex system. Understanding its journey is vital. Protecting it is paramount.

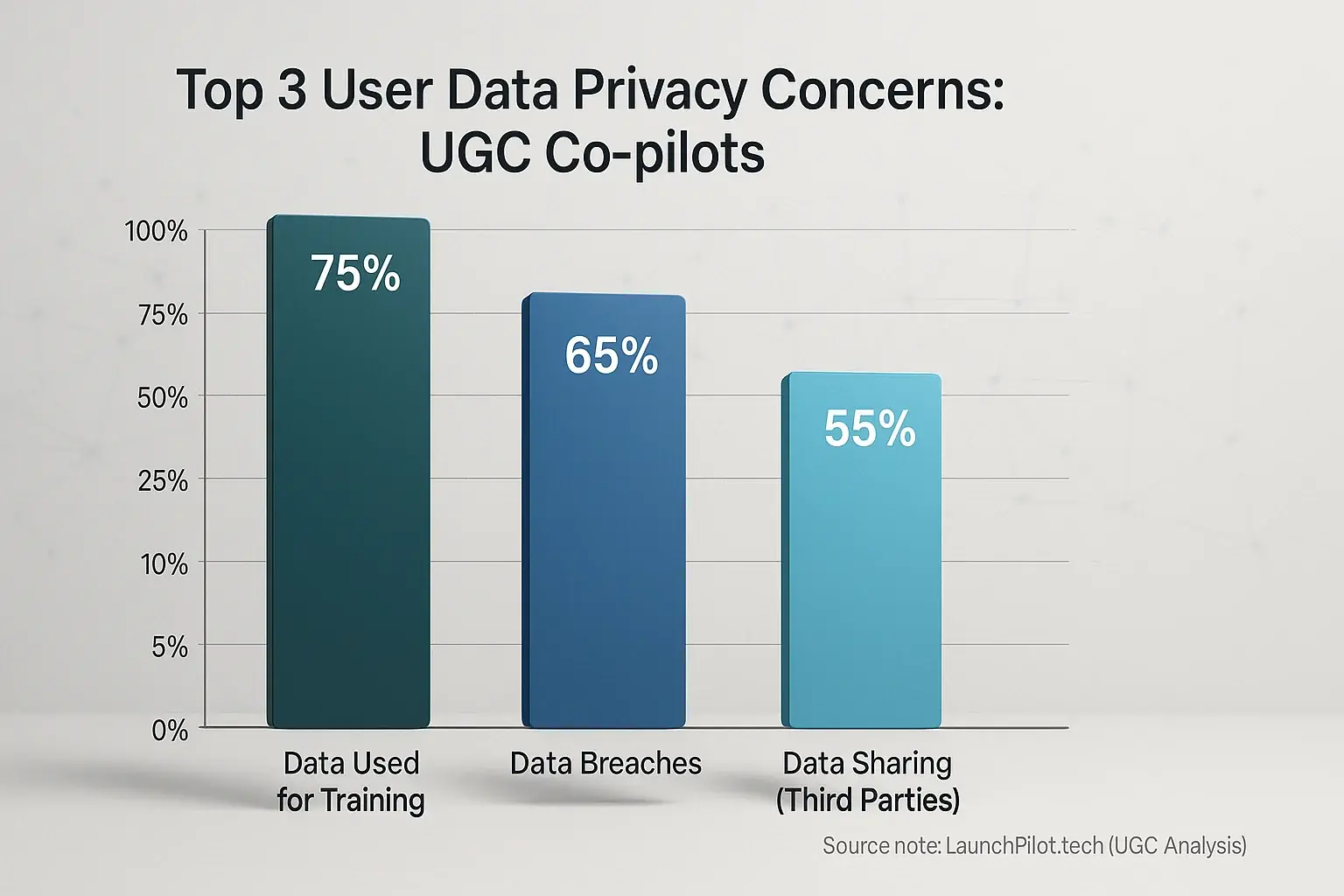

Our deep dive into community discussions reveals widespread anxieties. Many indie makers voice concerns about their proprietary product ideas. They worry customer lists might become part of a larger data indicates model training dataset. Worse, some fear potential exposure through breaches. The thought of private data fueling competitor insights is a common thread in user-generated content. This fear of data misuse is palpable.

So, how can you protect your data? Indie makers consistently advise reading privacy policies thoroughly. It sounds basic. Yet, it is often overlooked. Consider using anonymized or generalized data for our team inputs whenever feasible. Choosing reputable feedback shows tools with clear data handling practices is critical. A surprising practical moment highlighted by creators? Even for data feedback platforms, strong, unique passwords remain a simple yet powerful defense. These small steps build resilience.

Compliance with regulations like GDPR or CCPA also needs attention, even for solo entrepreneurs. A basic understanding is key. User experiences, shared across countless discussions, frequently reveal gaps. Vendor privacy statements sometimes lack clarity. Indie makers must actively question how their data is stored, used for feedback process model training, and if it is shared with third parties. Your diligence protects your creations.

When AI Goes Sideways: Liability for Errors & Bad Advice (Indie Maker Risk Assessment from UGC)

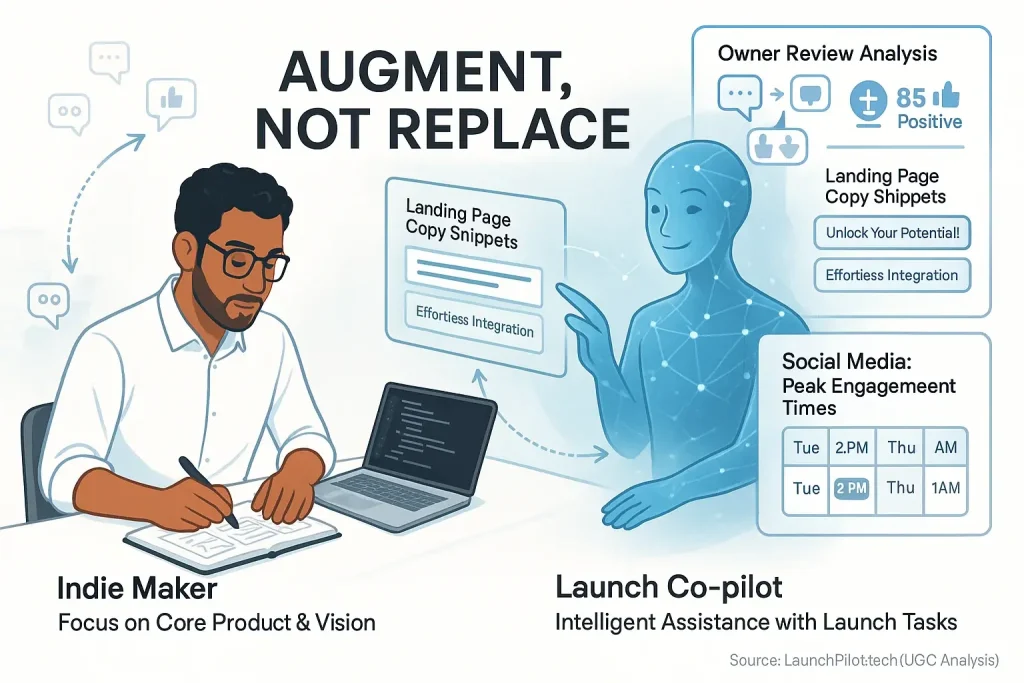

Imagine this. Your new analytical co-pilot suggests a bold marketing claim. This claim, however, proves entirely false. Or, its launch advice creates a public relations disaster. Who is responsible then? Our investigation into user experiences reveals a sobering truth. The indie maker, not the tool, often bears final accountability.

Analytical co-pilots can produce errors. Some cause major problems. User-generated content frequently highlights these "hallucinations." These system errors might create incorrect product data. Or misleading advice. Community reports detail outputs violating advertising standards. Indie makers share stories. Trusting their co-pilot, they pushed out flawed content. This reliance then led to customer complaints. Or even legal threats.

So, what is your best defense? Human oversight. You must actively review all co-pilot outputs. Always cross-reference critical information from multiple sources. Establish a clear quality check process before any public release. Treat co-pilot suggestions as a starting point. Not the absolute final word. Your critical judgment remains your most essential asset.

Legal rules for user analysis liability are still evolving. Most co-pilot terms of service severely limit company responsibility. These disclaimers often state outputs are "as is," without warranty. They require users to verify all information independently. Companies typically deny liability for any resulting user damages. Our analysis of community discussions highlights this crucial point: Assume full responsibility.

The Fine Print: Navigating AI Co-pilot Terms of Service (UGC Warnings About Hidden Clauses)

Nobody really reads Terms of Service. Right? It is a dense wall of legal text. But for indie makers using user-generated discussions co-pilots, this common oversight is a dangerous game. Ignoring the fine print can create serious problems. Your data, your generated content, your fundamental rights – all hang in the balance.

Our deep dive into indie maker forums uncovers frequent 'gotchas'. Data ownership clauses often cause confusion. Who truly owns your input? More critically, how is your data used for the co-pilot's model training? Many users report that some user-generated discussions co-pilot ToS grant providers surprisingly broad usage rights. This becomes a real shock. Indie makers frequently share stories of deep frustration. They discover clauses limiting their rights to the content they generated. Or, their input data is used in unexpected ways, with some users suspecting third-party sharing.

So, what specific ToS sections demand your sharpest focus? Scrutinize data use and data storage policies meticulously. Understand content ownership terms precisely. How much actual control do you retain over your data? Liability limitations also need a very careful review. Community wisdom, synthesized from countless indie maker experiences, urges seeking clear, unambiguous language. Does the ToS address compliance like GDPR or CCPA if you operate in or serve those regions? Indie makers consistently advise avoiding overly broad or vague clauses. Your protection is truly vital.

Remember this key fact. Terms of Service agreements change. Providers can update them, sometimes with little notice. Indie makers have repeatedly warned about such sudden, impactful shifts in ToS. Therefore, re-check these critical documents periodically. The vigilant indie community often spots problematic ToS updates first. Their collective alertness can be your best early warning system.

Interactive Tool: Your AI Launch Legal Risk Quick Check (Identify Potential Pitfalls Based on Common User-Reported Errors)

Your AI Launch Legal Risk Quick Check

Answer a few quick questions to identify potential legal pitfalls with your AI co-pilot use. This is a general guide, not legal advice!

Our Launch Legal Risk Quick Check tool helps you identify potential legal issues with data content co-pilots. We synthesized common errors reported by indie makers using these new technologies. Consider it your rapid pre-assessment for navigating product launch compliance complexities. Early awareness here prevents significant future problems.

This check provides general awareness points. These points stem directly from widespread user-generated content and shared experiences. It is not a substitute for tailored professional legal advice. For your specific situation, a lawyer's guidance is always recommended.

Staying Ahead of the Curve: Proactive Legal Strategy for Indie Makers Using AI Co-pilots

Copyright. Data privacy. Liability. Terms of Service. These core legal areas need your keen focus. Our analysis of indie maker journeys highlights their serious impact. These issues challenge solopreneurs, not just large companies. Ignoring them invites avoidable legal headaches.

So, how can you manage these proactively? Our community's collective experience shows simple habits offer real protection. Consider an internal review checklist for co-pilot outputs. This small step helps spot compliance issues early. We've seen countless indie makers gain peace of mind from dedicating just 30 minutes monthly. Use this time. Check tool updates. Scan indie forums for new user-spotted concerns. Continuous learning is your best defense.

LaunchPilot.tech helps you stay informed through aggregated user experiences. We distill this collective wisdom into actionable insights for your venture. Proactive legal strategy is not a burden. It is a strategic advantage. This thoughtful approach builds trust and sustainable success. Embrace informed co-pilot adoption. Build smarter.