Your Product's Next Evolution: Why AI Co-pilots Are Your Secret Weapon for Post-Launch Iteration

You launched. Congratulations. The confetti settles. Now the real product journey starts for many indies. This post-launch phase is where true success is forged. Continuous product improvement, based on actual user experiences, is paramount. Our analysis of countless indie stories confirms this critical stage.

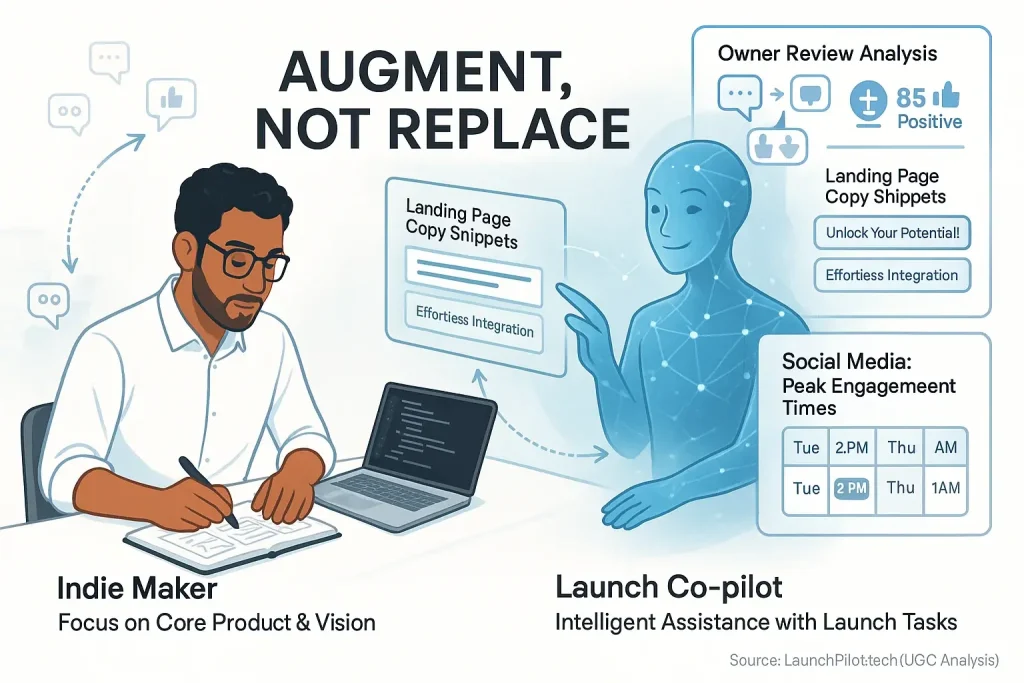

How do you manage this iteration? User-generated feedback co-pilots are an indie's emerging advantage. These tools especially assist resource-constrained founders, our research shows. They transform overwhelming user data into clear, actionable development steps. Consider it like refining a complex recipe after that first crucial taste test by guests. Community discussions consistently reveal co-pilots make this refinement systematic and manageable.

This guide explores that precise advantage for your product's future. We show how these feedback co-pilots help you strategically plan your product's next versions. Your product evolves directly with genuine user needs. Smarter V2s. Better V3s. User insights ensure your product does not just launch; it truly thrives and adapts. The indie community overwhelmingly reports this iterative approach as a key path to sustainable, long-term growth.

Interactive Tool: Your AI-Powered Product Iteration Priority Matrix

Prioritize Your Next Product Features

Key Principles for AI-Assisted Iteration: Moving Beyond Guesswork

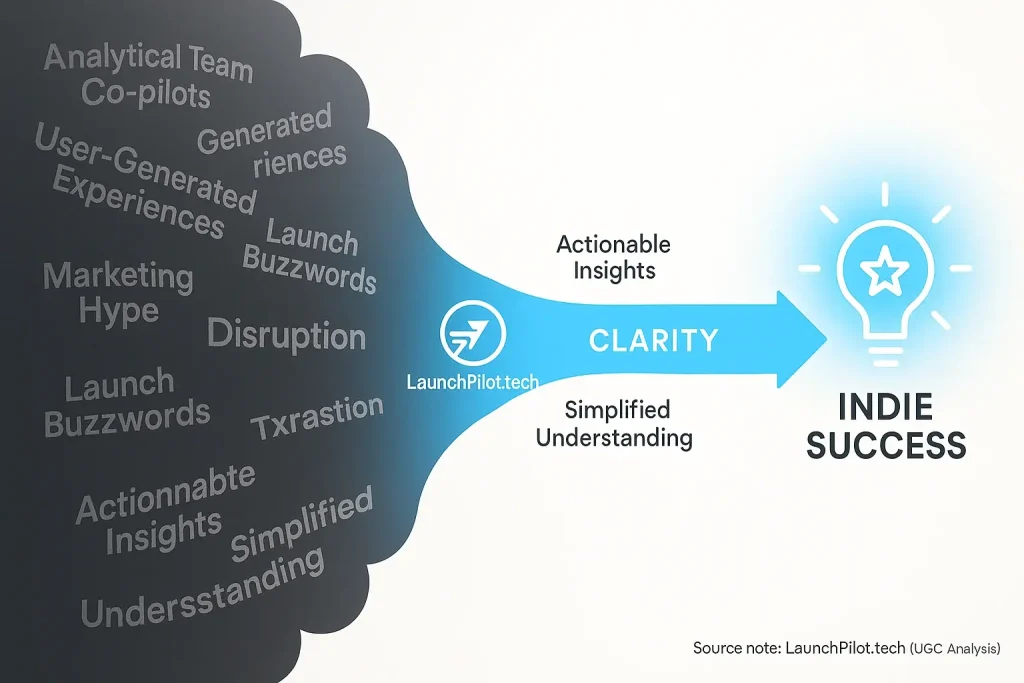

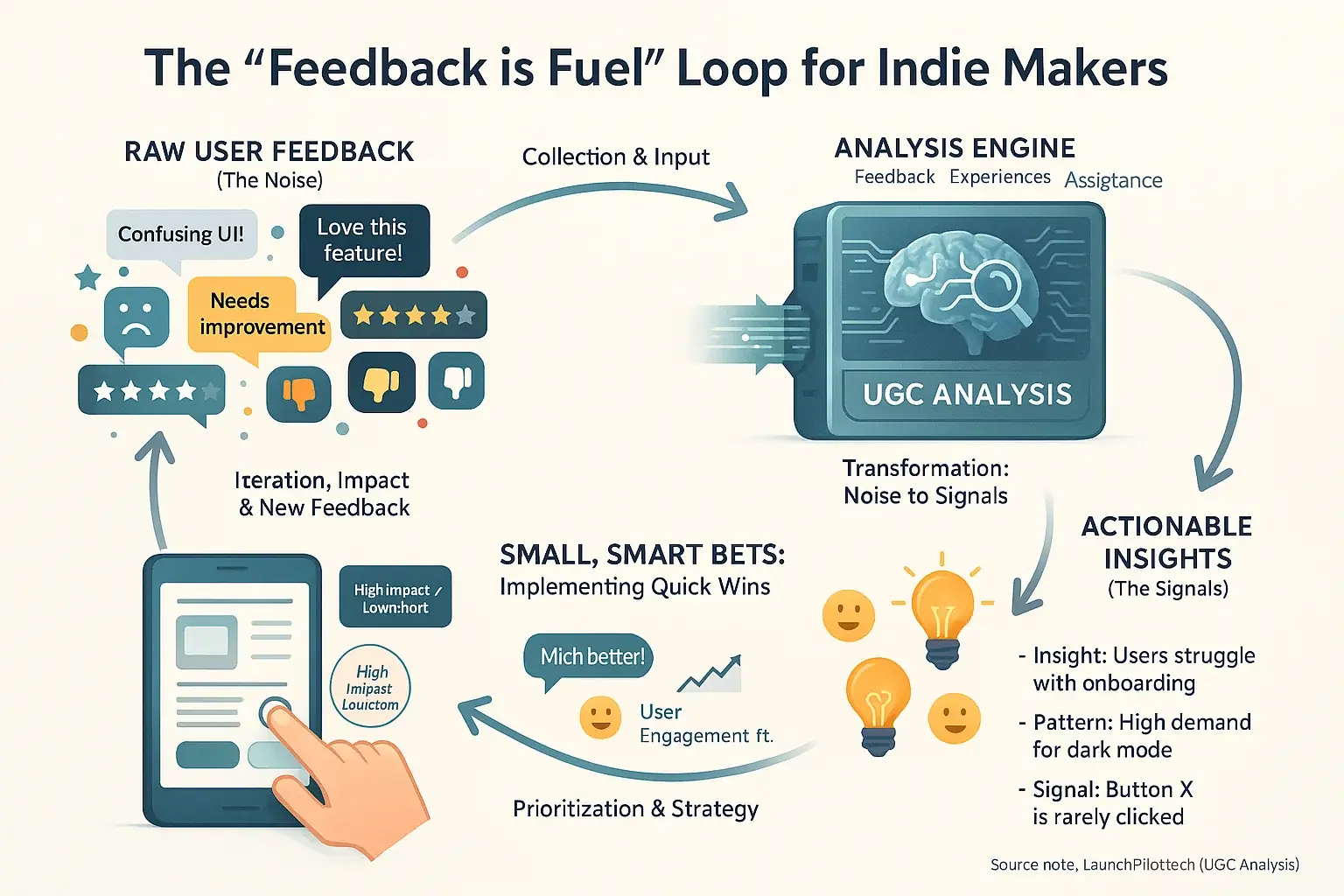

User feedback fuels product iteration. Absolutely essential. Yet, raw feedback often overwhelms indie makers. We see this constantly in community discussions. One developer, buried under negative reviews, felt paralyzed. The sheer volume was crushing. Then, a shift. Analyzing their user-generated content revealed clear patterns, not just random complaints. This analytical view transformed noise into actionable signals. It removed the emotional weight. Decisions became data-driven.

Many indie makers chase huge, complex features. A common misstep. Synthesized indie maker feedback points to a savvier approach. Focus on small, smart bets. These are the 'quick wins'. They deliver high impact with low development effort. Think of the maker who postponed a simple UI fix. They planned a massive redesign instead. Users just wanted the easy improvement. That quick win, missed for months, could have significantly boosted their engagement. Prioritizing these identified wins builds crucial momentum. It also keeps your user base satisfied.

Tracking iteration success is vital. No surprise there. But what you measure? That changes everything. This insight echoes through countless user discussions. Too many indie developers fixate on superficial numbers. Downloads are a classic example. One founder celebrated ten thousand downloads. Their app's user retention, however, was abysmal. A leaky bucket. Truly relevant Key Performance Indicators tell the unvarnished truth. They validate your iteration's actual impact. Measure what reflects genuine user value. And sustainable growth.

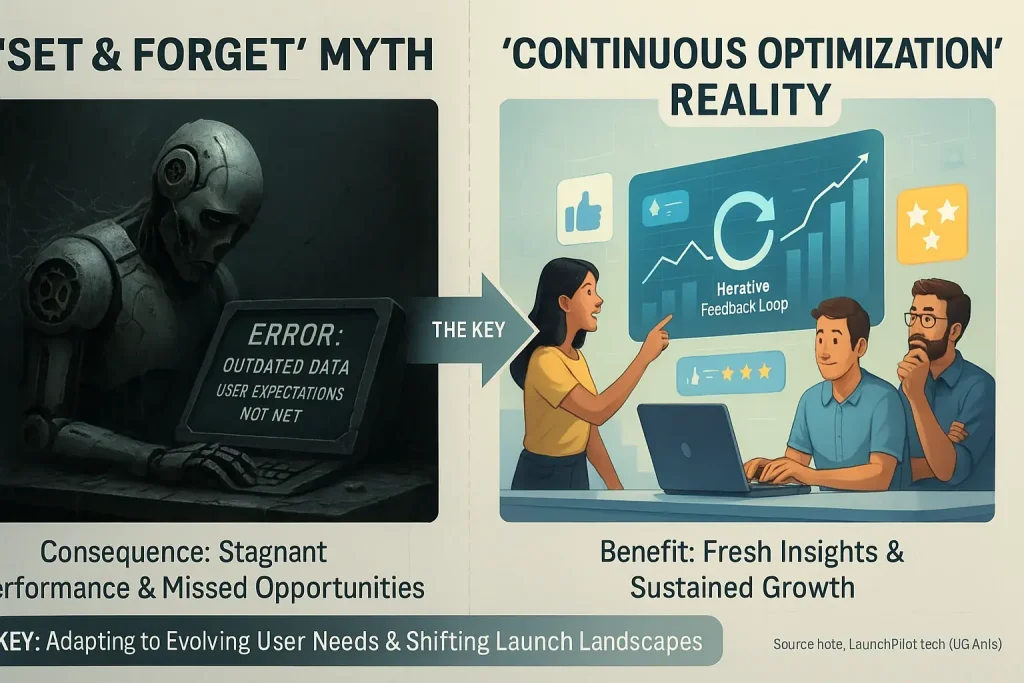

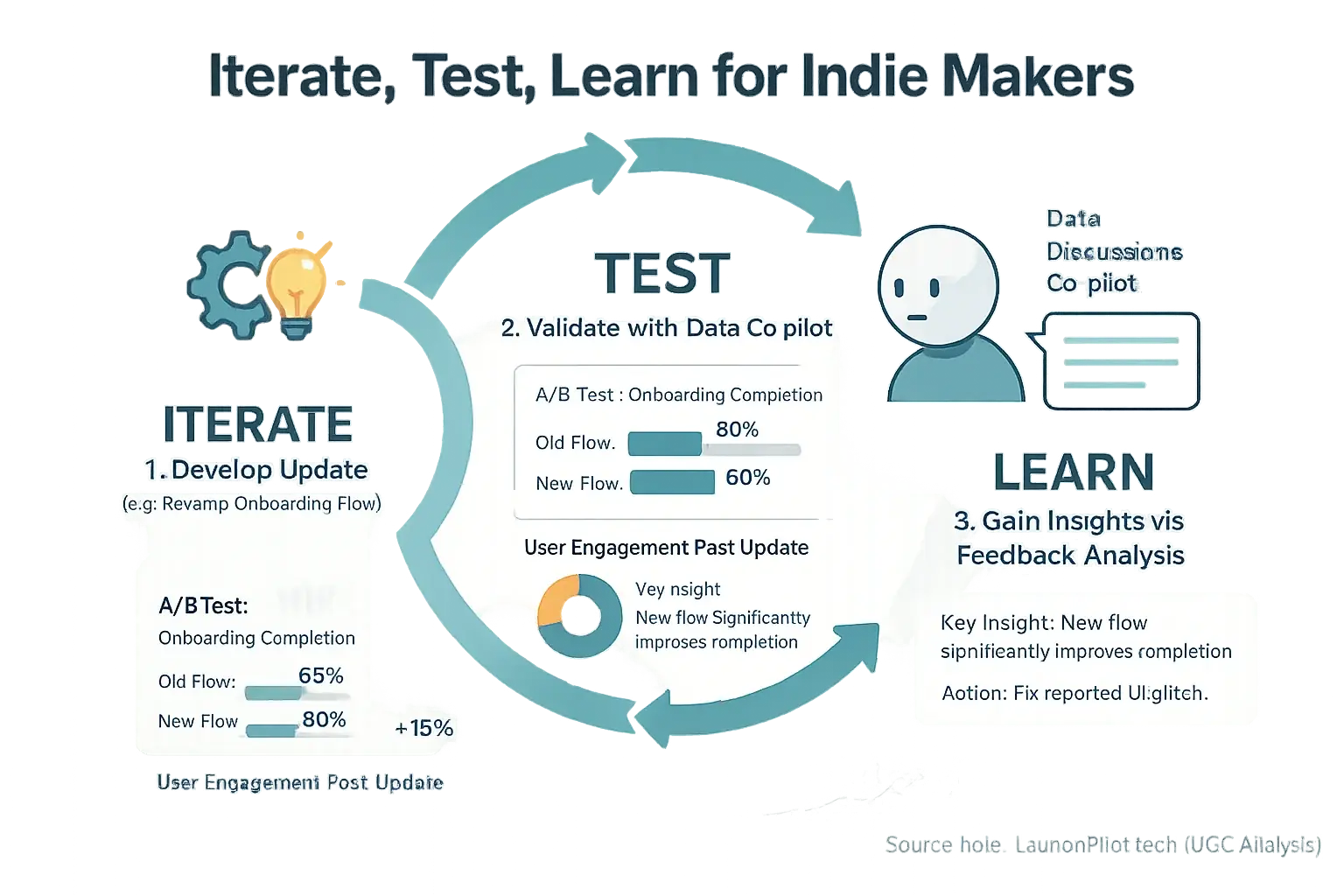

The endless quest for perfection often stalls progress. Indie makers consistently report this frustration. Our analysis of owner discussions highlights a core truth. Iterative speed trumps flawless delay. This means rapidly moving through the build-measure-learn cycle. Agile in practice. Releasing a solid, if imperfect, version generates faster learning. Real users offer invaluable, immediate data. Waiting for 'perfect' is often waiting too long. The market shifts. User needs evolve. You must keep pace.

Roadmapping Your Product's Future: How AI Co-pilots Help Build V2, V3 & Beyond

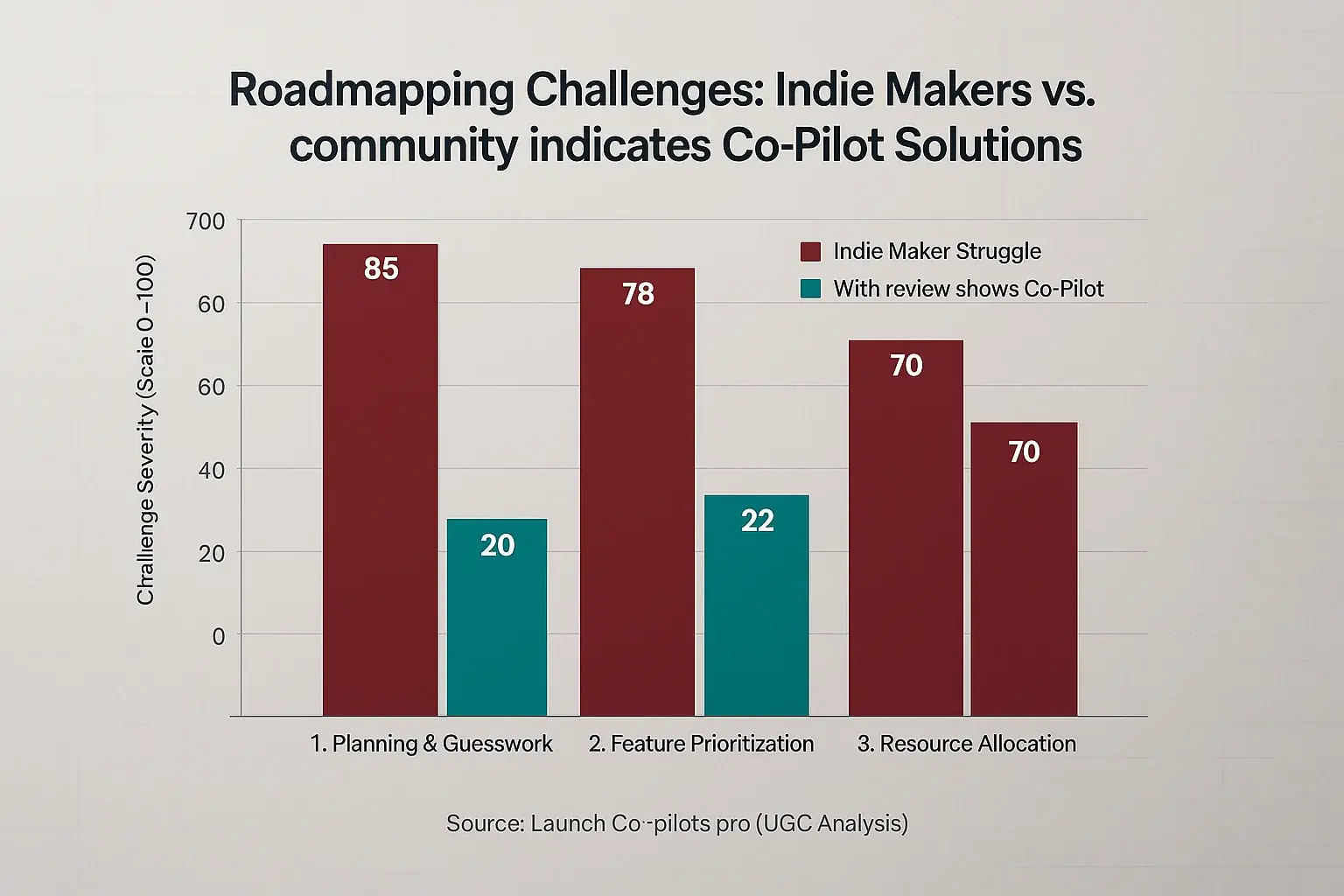

Product roadmapping defines your product's strategic evolution. It is not merely a feature checklist. This planning can feel immense for indie makers. User-generated process co-pilots offer a strategic partnership here. They bring data-driven clarity to future versions.

These co-pilots dissect complex information. This includes synthesized user-generated content. They can analyze market trends, if you provide that data. Competitor movements also become input, with available intelligence. Patterns observed across extensive user discussions often reveal feature clusters. Development themes for your V2 or V3 can emerge directly from this analysis. Imagine feeding user requests and available competitive insights into such a co-pilot. It then suggests prioritized themes for your next development cycle. Guesswork diminishes.

User analysis co-pilots assist in visualizing the product's journey. Potential timelines become more concrete. Feature dependencies surface early in planning. Some tools can even help draft initial user stories for prioritized features. Crucially, the indie maker retains ultimate control. The product vision remains yours; the co-pilot provides data to inform it. Smart decisions follow.

A vital insight from synthesizing countless indie discussions is this: these tools powerfully identify what not to build. This is a game-changer. Low-impact features, often born from assumptions, get flagged by data. High-effort, low-reward items stand out before you commit resources. This focus saves indie developers precious time. Budgets stretch further. You avoid building 'vanity features' that users never truly valued.

Communicating Product Updates: AI-Drafted Announcements That Keep Your Indie Community Engaged

Product update communication builds strong user loyalty. Regular updates keep your community deeply engaged. Yet, many indie makers find drafting announcements a constant time drain. User feedback analysis platforms can become your dedicated communication co-pilot here. That's a common report from the community.

These systems draft release notes from your raw feature descriptions. They can generate initial email announcements for your waiting list. Our synthesis of user discussions reveals co-pilots also create short social media posts. No more staring at a blank page, right? Imagine needing to announce that tiny but crucial bug fix immediately. Your feedback-informed co-pilot prepares a draft in moments, keeping users promptly updated.

Community feedback analysis helps adapt messages for specific user groups. For instance, power users might get different details than brand-new subscribers. Many creators then refine these drafts for different communication channels. A human review pass is still crucial. Your personal touch adds brand personality and checks all facts carefully.

Here’s a powerful insight from extensive user experience data. Deep analysis of past community comments uncovers what genuinely captures your audience's attention. This knowledge lets your announcements spotlight benefits that genuinely resonate with people. Your product updates then connect with far greater impact. Users notice this.

Testing Your Iterations: How AI Co-pilots Validate Impact & Guide Your Next Move

Launching your update feels good. That success is only half the journey. Measuring an update's actual impact truly separates progress from mere motion. For indie makers, setting up robust testing often seems overwhelming. Resource limits frequently hinder proper validation. Our analysis of community discussions shows analytical tools can act as your validation co-pilot. They offer a clearer path forward.

How do these Launch Co-pilots specifically assist? Many indie creators report using their feedback discussions features to structure A/B tests. These tools help monitor key performance metrics after a new release. User behavior analysis also becomes significantly more manageable. Did that new feature make a genuine impact? Your co-pilot's feedback analysis helps you find out. It quickly shows if users are engaging with the update. Or is it just gathering digital dust? This insight is vital for your next step.

Gathering usage data is one part of the puzzle. Interpreting that data accurately is quite another. Indie maker experiences, shared widely, indicate Launch Co-pilots help decipher complex results from iterations. They clearly highlight which changes resonated with your users. These platforms also pinpoint what adjustments fell flat, and often suggest why. This process establishes a strong data-driven feedback loop. Continuous product improvement absolutely relies on this iterative cycle.

Here is a practical truth many independent creators discover. Updates sometimes bring unintended consequences. Our deep synthesis of user-generated content reveals this pattern frequently. Analytical capabilities within Launch Co-pilots often excel at spotting these unexpected side effects. Imagine a sudden dip in activity on an established feature post-update. Or perhaps new frustrations appear in user comments about a seemingly unrelated area. These co-pilots can flag unusual user behavior patterns. This allows for swift course correction. Addressing such issues before they escalate protects your users. It also safeguards your product's hard-won reputation.

The Iterative Indie Maker: Your AI Co-pilot as a Long-Term Growth Partner

Continuous iteration fuels sustainable indie growth. This pattern emerges clearly from countless maker stories. Your analytical analysis co-pilot champions this ongoing process. It extends far beyond your initial launch. Think of your feedback reviews co-pilot not as a one-time launch assistant. Imagine it as that trusted co-founder. This co-founder perpetually crunches data. It delivers those crucial whispers for your product's next big leap.

The collective wisdom from indie maker forums is undeniable. These analytical co-pilots empower you, the indie maker. You can make smarter, faster, data-driven decisions. User sentiment directly shapes tangible product improvements. This becomes a powerful cycle. It is the path to evolving your creation and sustaining growth. So, are you ready to build iteratively?