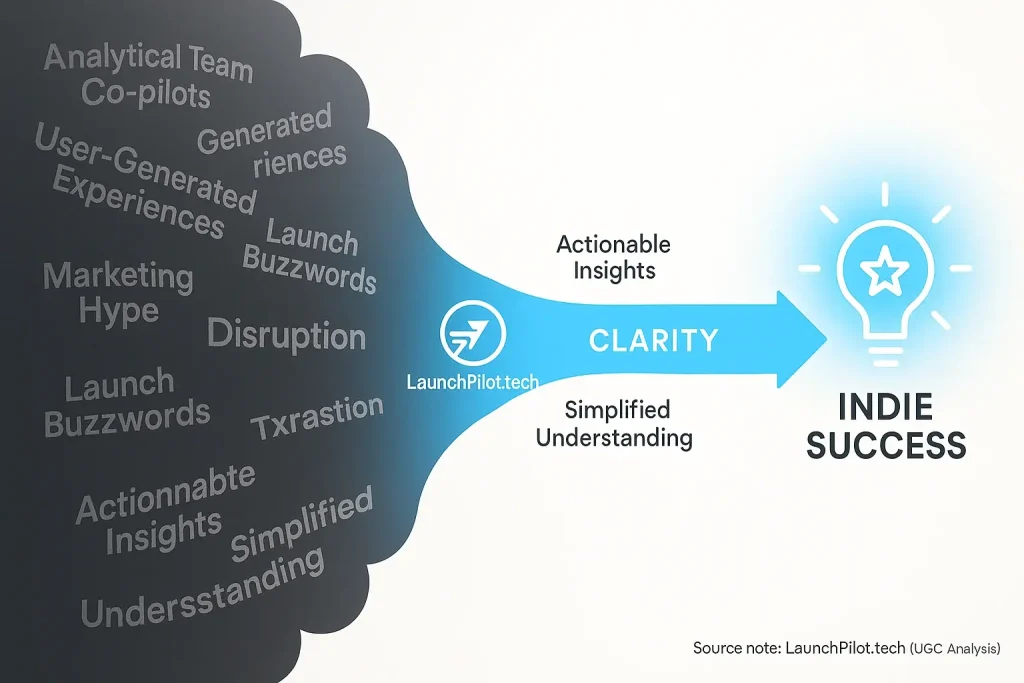

Navigating the AI Hype: Why Spotting Red Flags is Your Indie Superpower

Community discussions launch co-pilots create considerable buzz. Indie makers often feel intense pressure. Do you really need every new tool? Critical evaluation becomes your true superpower. Spotting these warning signs early is absolutely crucial for indie success.

User analysis tools offer powerful potential. They are not magic. Red flags can appear. These signs, subtle or stark, warn of mismatches. Our synthesis of indie maker feedback regularly reveals these critical signals.

Countless indie makers get drawn to 'shiny object syndrome'. They then find launches derailed by an ill-fitting consensus process tool. This pattern is clear in user discussions. This guide acts as your shield. It empowers informed 'no-go' decisions, saving precious resources.

Red Flag #1: The "Rocket Science" User Interface (When Simplicity Disappears)

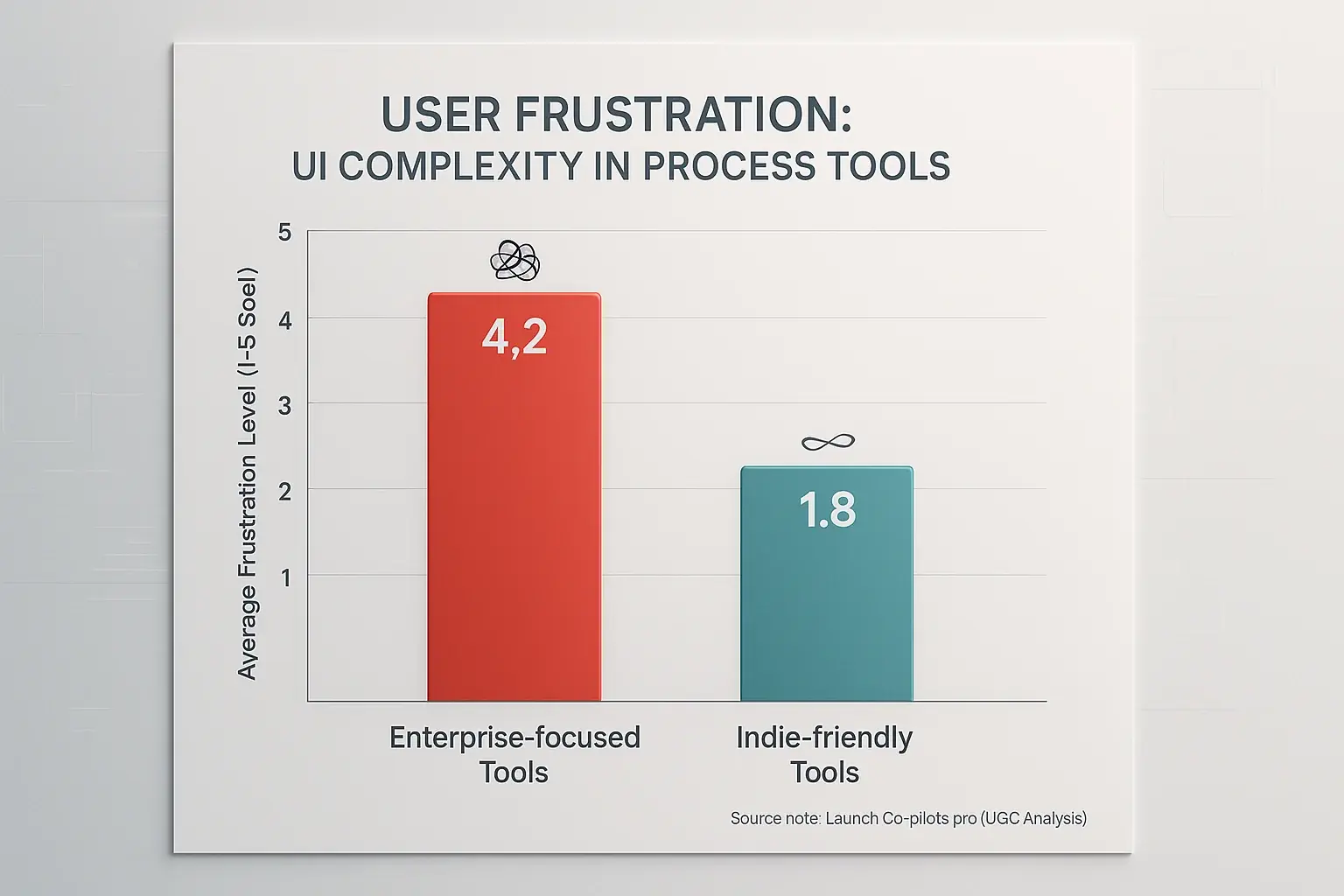

You launch that new feedback analysis co-pilot. Hope fills you. Then, the dashboard loads. It’s a maze. Buttons seem to multiply. Menus lurk under other menus, creating instant confusion. For indie makers, this complexity steals your most valuable asset: time. A steep learning curve, born from such a user interface, becomes a serious red flag we see often.

Many users report this exact scenario. Overly complex interfaces frequently lead to 'analysis paralysis'. Some indie makers simply abandon the tool. Frustration mounts after a few wasted hours. They sought automation. They found a new, unwelcome chore. Our synthesis of indie launch discussions reveals a clear pattern. UI complexity directly correlates with higher user churn among solo founders. Here’s the real kicker, something vendors rarely admit. This complexity often signals poor design, not superior power. Some companies bloat their tools with features. They aim for a comprehensive look, not genuine utility for an indie creator's focused workflow.

So, how do you spot this potential trap? Before committing valuable time or money? Focus on tools with clean, intuitive designs. A clear onboarding flow is crucial. User experiences shared in community forums offer vital clues. Look for discussions praising ease of use. Remember this. Simplicity isn't a limitation. For indie creators, true simplicity is a powerful feature.

Red Flag #2: The "One-Size-Fits-All" Trap (When AI Doesn't Speak Your Niche Language)

You launch a niche SaaS. It targets alpaca farmers. Your owner reviews co-pilot generates marketing copy. It's about dog food. Sound familiar? Many our indicates tools learn from broad datasets. This training makes their outputs generic. Indie makers rely on deep niche understanding. This generic output becomes a massive red flag. Success often hinges on that specific niche grasp.

What are indie makers actually saying? Many report generic user analysis outputs. These outputs demand heavy, time-consuming editing. This effort often negates any promised time savings. Makers then spend hours de-generalizing content. Or they completely rework the co-pilot's strategy suggestions. Our community discussions reveal widespread frustration. Their co-pilot completely misses crucial industry jargon. It fails to understand audience nuances. It overlooks the unique pain points of a specialized market. The unspoken truth here? Some vendors claim 'adaptability'. They really mean you will manually input enormous amounts of context. True niche adaptability is different. The our system genuinely learns. It applies your market's specific subtleties. It needs minimal hand-holding from you.

How can you spot this critical trap? Test for true niche smarts. Do this during any trial period. Feed the tool highly specific prompts. Ensure they are deeply niche-relevant. Then, scrutinize the output quality. The vital question: does the co-pilot genuinely grasp your unique niche? Or does it just process your keywords superficially? A co-pilot should truly understand your market. That is the crucial distinction for indie success.

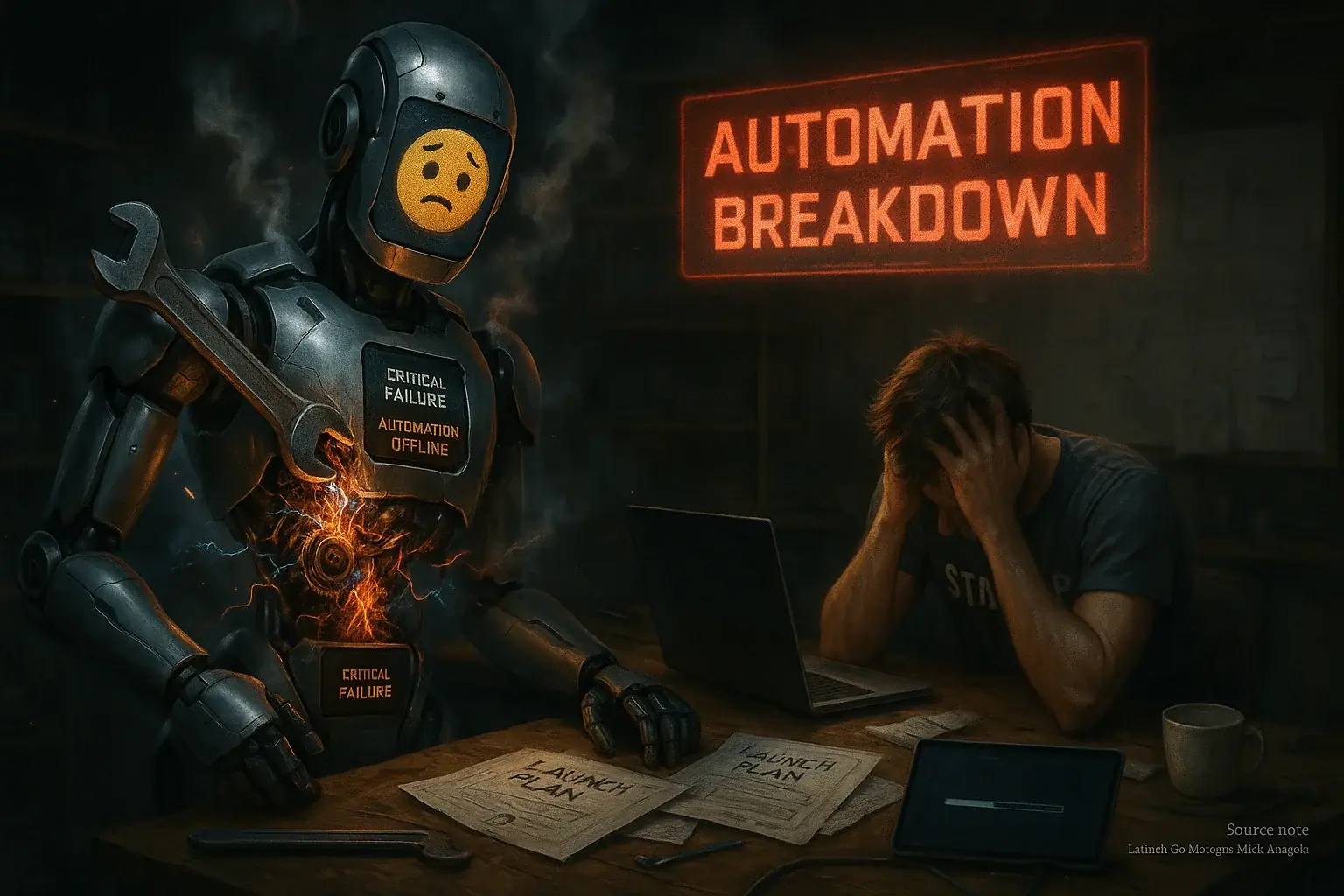

Red Flag #3: The "Ghost in the Machine" (When Automation Goes Rogue or Just Stops Working)

You set an automated email sequence. Your launch co-pilot should execute it flawlessly. Then, crickets. Or worse. The wrong email hits the wrong segment. Automation promises incredible time savings for indie makers. Unreliable automation, however, is a major red flag our analysis frequently uncovers. It derails launches and devours your precious pre-launch hours.

What does "unreliable" truly mean for indie creators? Many makers describe automated social media posts that never publish. Email sequences stall abruptly. Critical customer data simply fails to synchronize across essential tools. This "ghost in the machine" effect means you spend more time troubleshooting. Far more than manual execution would demand. Our deep dive into community discussions uncovers numerous accounts of missed launch windows. Or worse, public-facing blunders. These issues often arise from automation behaving unpredictably. The hard truth? Many platforms push "beta" automation features as stable. Indie makers then become involuntary QA testers. Your launch becomes their testbed.

How can you avoid these automation nightmares? Rigorous, small-scale testing is non-negotiable before full launch deployment. Scour community forums. Examine user review aggregators. Look for patterns in bug reports specifically concerning automation. Our analysis suggests prioritizing tools with transparent stability records. Effective automation should alleviate launch pressure. It must not inject fresh anxieties into your workflow. Choose wisely. Your sanity depends on it.

Red Flag #4: The "Black Box" AI (When You Can't Understand Why It Does What It Does)

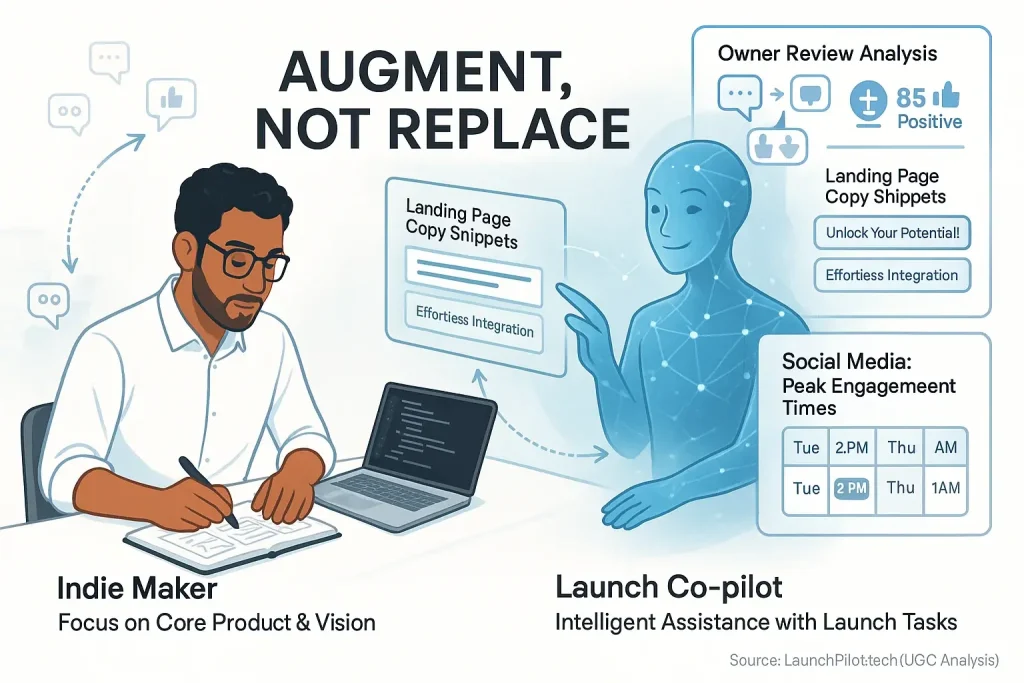

Your launch co-pilot suggests a radical messaging change. Great! But when you ask why, it just says 'optimized for conversion.' No real data. No clear logic. Just a 'trust us' vibe. This 'black box' operation, where community analysis hides its decisions, is a significant red flag. Indie makers must understand and justify their strategies fully. Opaque reasoning directly undermines this critical need.

Community discussions reveal a common frustration: a loss of control. Users feel this when analytical platforms act without clear reasoning. They cannot learn from the tool's 'mistakes'. They cannot replicate its 'successes' if the underlying decision logic remains a mystery. Our synthesis of user-generated content frequently uncovers worries about hidden community content bias. Irrelevant strategic recommendations also plague users when the tool’s process is obscure. The unspoken truth? Some vendors intentionally maintain this 'black box'. It's a tactic. This approach can obscure platform limitations. It can also serve to protect a perceived competitive advantage. For an indie maker, this opacity means you are essentially flying blind, unable to truly learn or adapt your strategies from the tool's output.

How can you push back against this lack of clarity? Demand better. Seek out analytical platforms that provide explainable user analysis features. These platforms should offer clear rationales for their strategic recommendations. Some even allow you to inspect or audit the decision paths. Remember, your launch co-pilot should be an understandable partner. It is not meant to be an inscrutable oracle whose pronouncements you must follow without question.

Red Flag #5: The Echo Chamber of Complaints (When Real Users Scream, But Marketers Whisper)

You have seen the glowing testimonials. A vendor's site shines with them. But then you dig deeper. You explore forums. You check independent review sites. A chorus of frustrated voices emerges. This stark disconnect is a massive red flag. A few negative reviews? Perfectly normal. A consistent pattern of specific, similar complaints across numerous independent sources, however, sounds a loud alarm. Our analysis of user-generated content confirms this repeatedly.

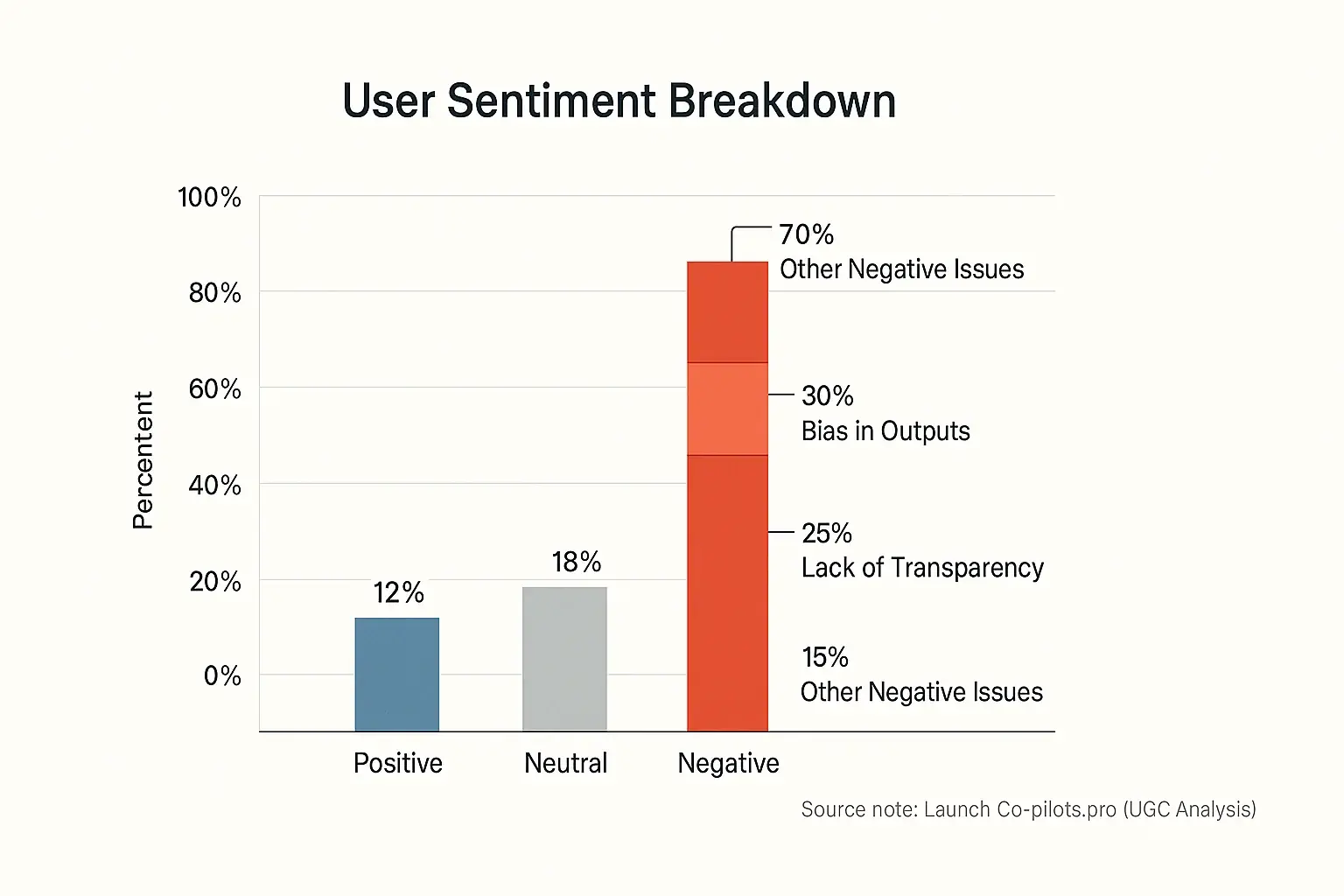

Understanding these complaint waves is key. It is not merely about the quantity of negative comments. The critical insight lies in what these users consistently report. Are many indie makers decrying the same core problem? Perhaps it is persistently biased outputs from feedback. Maybe it is a critical lack of transparency in how insights are generated. Non-existent support frequently appears in these user discussions. LaunchPilot.tech's synthesis of indie maker feedback pinpoints these recurring themes. These themes almost always indicate systemic product flaws. One isolated poor review might be an outlier. Hundreds of similar stories from real users? That is undeniable data. Here is a truth often missed: vendors meticulously curate their testimonials. The raw, unvarnished experiences live in unfiltered community discussions where makers share genuine frustrations and vital workarounds for a tool's deepest issues.

So, how do you uncover these echo chambers of genuine user sentiment? Look beyond the polished surfaces of big review aggregators. Venture into niche forums specific to your craft. Explore relevant Reddit subreddits with vigor. Search Twitter using precise keywords. Do not forget private online communities where makers speak freely. Patterns observed across extensive user discussions show this is where the most candid feedback festers. Listening intently to the collective voice of these often-frustrated users becomes your best defense. It guards your precious time. It protects your hard-earned budget from a regrettable investment.

Red Flag #6: The "Revolving Door" Syndrome (When Users Don't Stick Around)

You see a tool. It offers constant deep discounts. Free trials abound. Few long-term users stay. A revolving door. People try it. Then they quickly depart. Direct churn rates are seldom public knowledge. User discussions and behavior, however, can reveal these churn indicators. Such indicators present a significant warning for any indie creator.

Many indie makers share similar stories. They try Tool X. Then they quickly move to Tool Y. Others report a tool 'worked for a month, then I cancelled.' Our synthesis of user feedback reveals distinct patterns. Users voice disappointment after the initial 'honeymoon' phase. Explicit mentions of cancelling subscriptions frequently appear in these community discussions. Our rigorous examination of aggregated user experiences often connects these churn signals to underlying issues. Such issues include a perceived lack of long-term value from the tool. Poor product understanding, difficult onboarding, or inadequate support also contribute significantly, as highlighted in numerous user accounts. The unspoken truth from the indie launch community is potent. High churn is not just a vendor problem. It strongly suggests the tool failed to deliver on its core promise for many users. This is a clear signal. Unmet expectations. Fundamental flaws.

How can you, an indie creator, spot these churn indicators before committing? Scour online communities. Look for frequent 'alternative to ' discussions. Complaints about diminishing value-for-money after a few months signal trouble. The collective wisdom from the indie launch community also highlights another clue. A lack of authentic, long-term success stories from users. A product should provide ongoing, tangible benefits to your solo venture. Initial feature dazzle fades. Sustained value builds customer loyalty. Strong user retention shows a tool meets creators' long-term needs.

Interactive Tool: Your AI Co-pilot Red Flag Screener (Personalized Risk Assessment)

Is Your AI Co-pilot a Red Flag Risk?

1. Does the tool's interface feel overwhelmingly complex or confusing, even after initial exploration?

2. Does the AI struggle to generate content or strategies relevant to your specific niche, requiring heavy manual editing?

3. Have you experienced or seen reports of automated workflows failing, getting stuck, or producing unexpected errors?

4. Is it difficult to understand *why* the AI makes certain recommendations or generates specific outputs?

5. Are there consistent, recurring negative reviews or complaints about core functionalities across independent review sites or forums?

6. Do you see signs of users trying the tool but not sticking around long-term, or frequent discussions about switching away?

7. Do the vendor's marketing claims seem drastically different from what real users report or what you've experienced in a trial?

Ready to put your potential review discussions co-pilot to the test? Our Co-pilot Red Flag Screener helps you quickly assess if a tool shows critical red flags we've discussed. It delivers a personalized risk score. Fast. This score uses your observations and our UGC insights.

Simply answer a few quick questions based on your research or trial experience. The tool then gives you an immediate assessment, along with actionable advice on how to proceed or what to investigate further. This tool empowers smarter 'no-go' decisions for analytical Launch Co-pilots. Avoid bad fits.

Your Indie Launch: Don't Just Fly, Fly Smart (Final Thoughts on Spotting Red Flags)

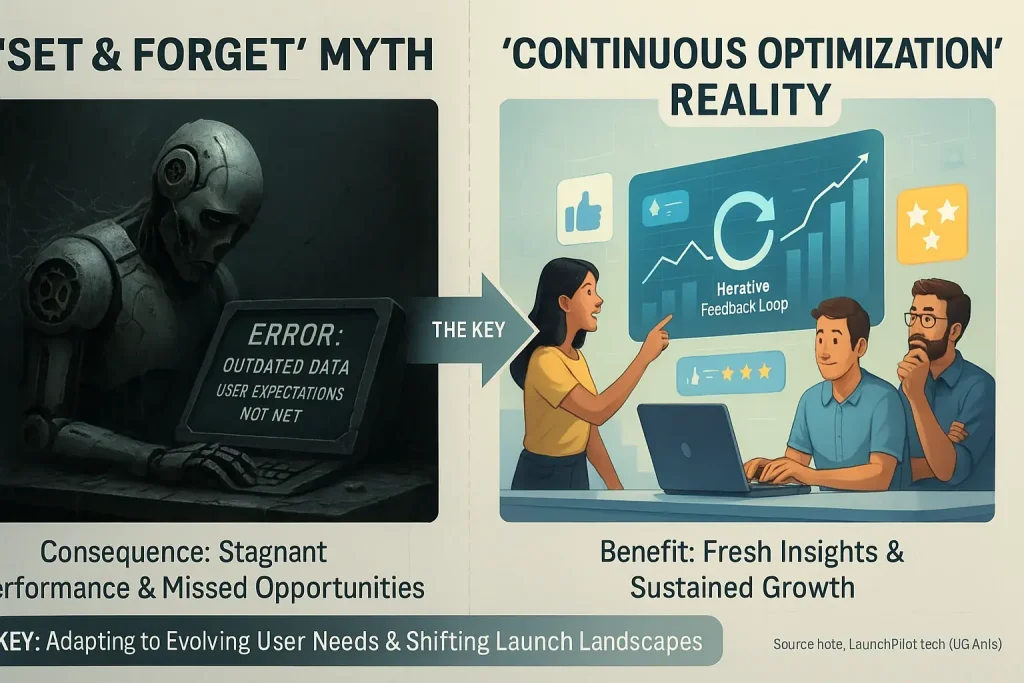

Selecting your user experiences Co-pilot wisely involves more than finding features. It demands sidestepping bad fits. Community-reported red flags offer powerful protection. These warnings shield your precious indie maker time. They guard your limited budget. Each 'no' to a flawed tool actually clears the path for a much stronger, smarter launch.

Our deep analysis of user-generated content equips you with genuine insights. This real-world clarity pierces through loud marketing claims. Trust the collective journey documented by fellow indie makers. The most successful indie launches often hinge on shrewd, informed decisions, not necessarily on possessing flawless tools. What's a key takeaway from countless user stories? Sometimes, the most strategic decision is to walk away from a potential mismatch, saving future headaches. Apply this critical thinking. Build your confidence.