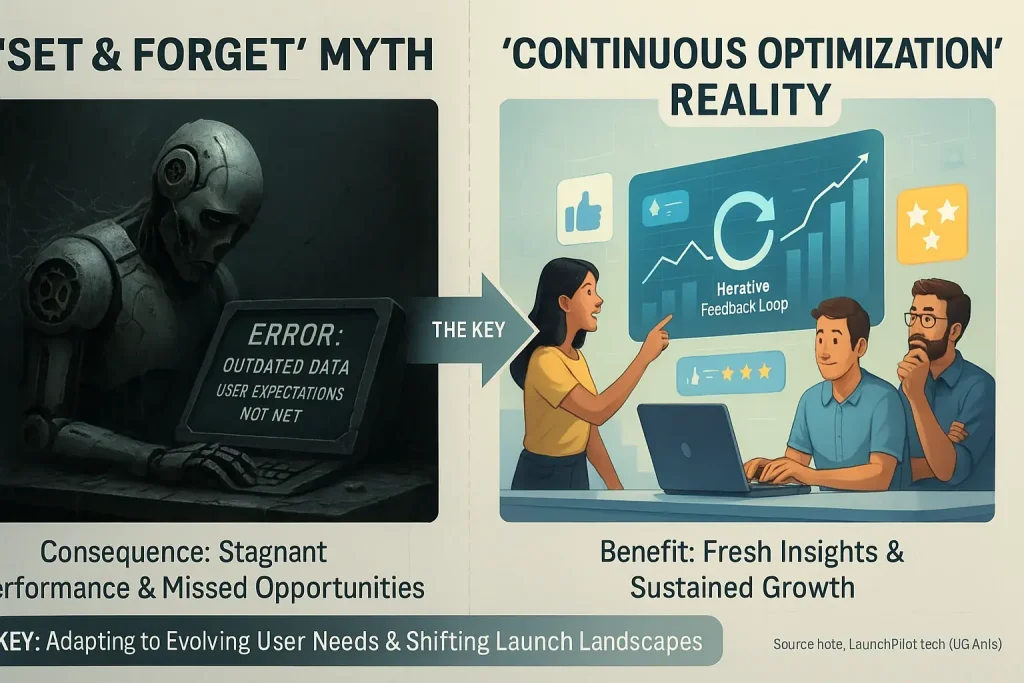

The Siren Song of "Set and Forget": Why It's So Tempting (And So Dangerous) for Indie Makers

Imagine pressing a button. Your launch just... happens. No fuss. No late nights. This automation dream powerfully attracts indie makers. Time is a precious, finite resource for them. Overwhelm often becomes a daily reality. The allure of hands-off systems is therefore immense.

But here is the critical insight from user experiences. That "set and forget" promise for data content Launch Co-pilots? It is often a marketing mirage. Community discussions reveal a starkly different operational truth. Many indie makers quickly discover these tools require constant human "babysitting." This unexpected demand for oversight is a crucial reality check.

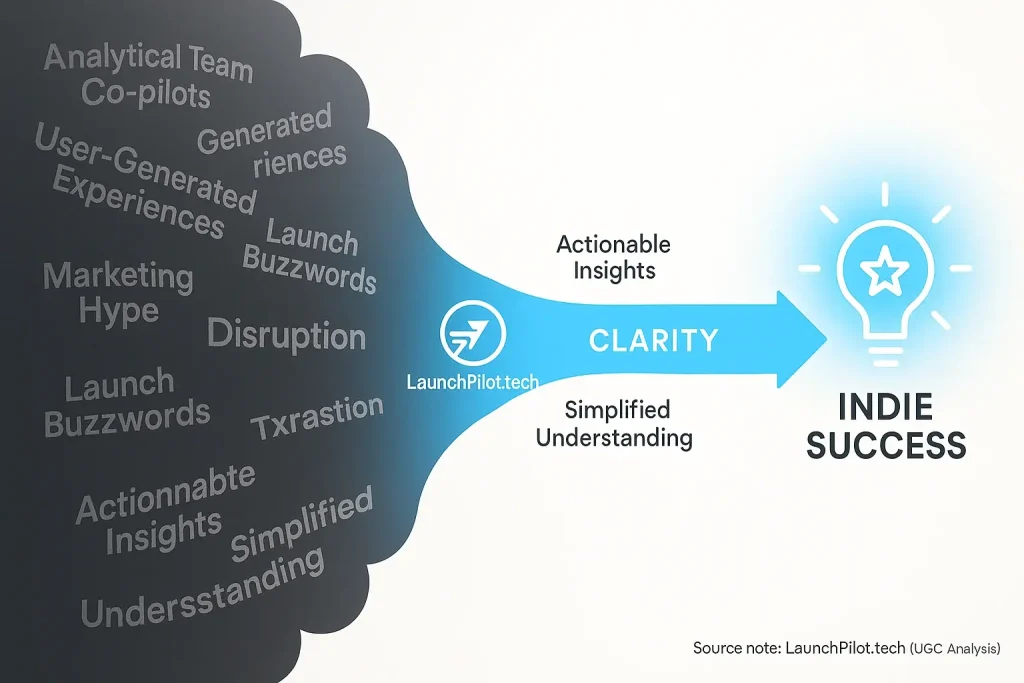

Understanding why this alluring myth persists is vital for new creators. Even more important is learning what real indie makers do. They successfully manage their consensus process co-pilots despite these challenges. Their collective wisdom offers genuinely practical guidance for navigating this landscape.

The Unseen Work: Why Your AI Co-pilot Demands Constant Performance Monitoring

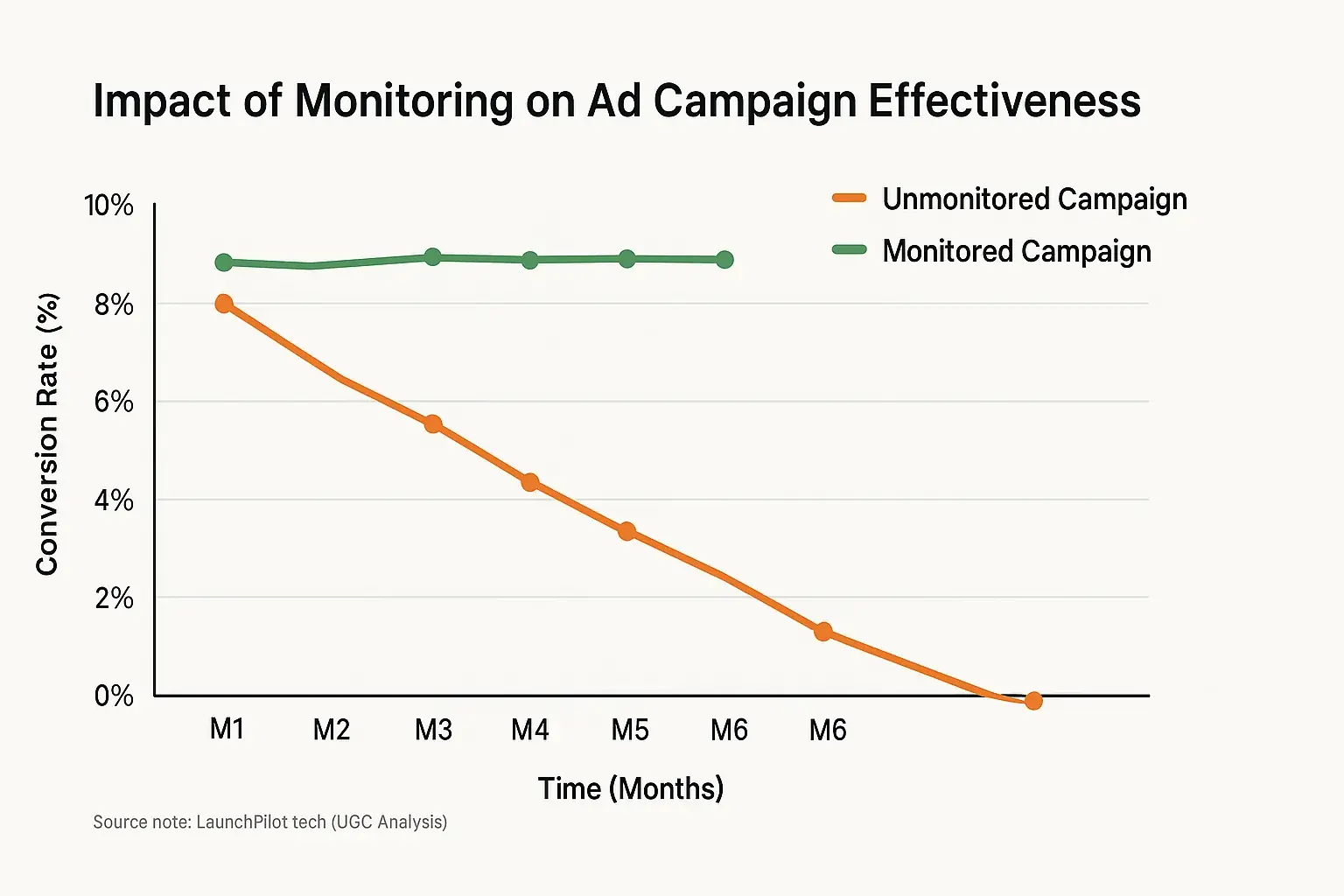

Your analytical content co-pilot needs consistent oversight. It is not a set-and-forget solution. Think of your platform's co-pilot as a highly intelligent, but still learning, assistant. It learns. Yet, patterns observed across extensive user discussions show its performance can drift over time. These tools might misinterpret data as user experiences evolve. Their effectiveness can diminish without diligent checks. This is a common trap many indie makers unfortunately discover too late.

What metrics demand your vigilant attention? Our comprehensive synthesis of indie maker feedback suggests: nearly everything crucial to your goals. Many users report that proprietary analytical process content quality can subtly degrade. This decline happens if not regularly evaluated. Ad campaigns might start targeting the wrong audience, as feedback experiences drift. Human oversight is key to prevent this. Scrutinize your ad spend efficiency. Track audience engagement patterns closely. Are they still responding as expected?

Ignoring these performance indicators? That is like flying blind into a storm. The consequences can be severe, a lesson echoed in countless reviews. Wasted ad spend is a frequent, painful outcome reported by users. Off-brand content can emerge from unmonitored analytical tools, damaging your reputation. Missed growth opportunities become all too common. Consistent monitoring is essential preventative maintenance. It saves precious resources. It protects your brand integrity.

Adapting to the Unexpected: Why Your AI Launch Strategy Needs Human Adjustment

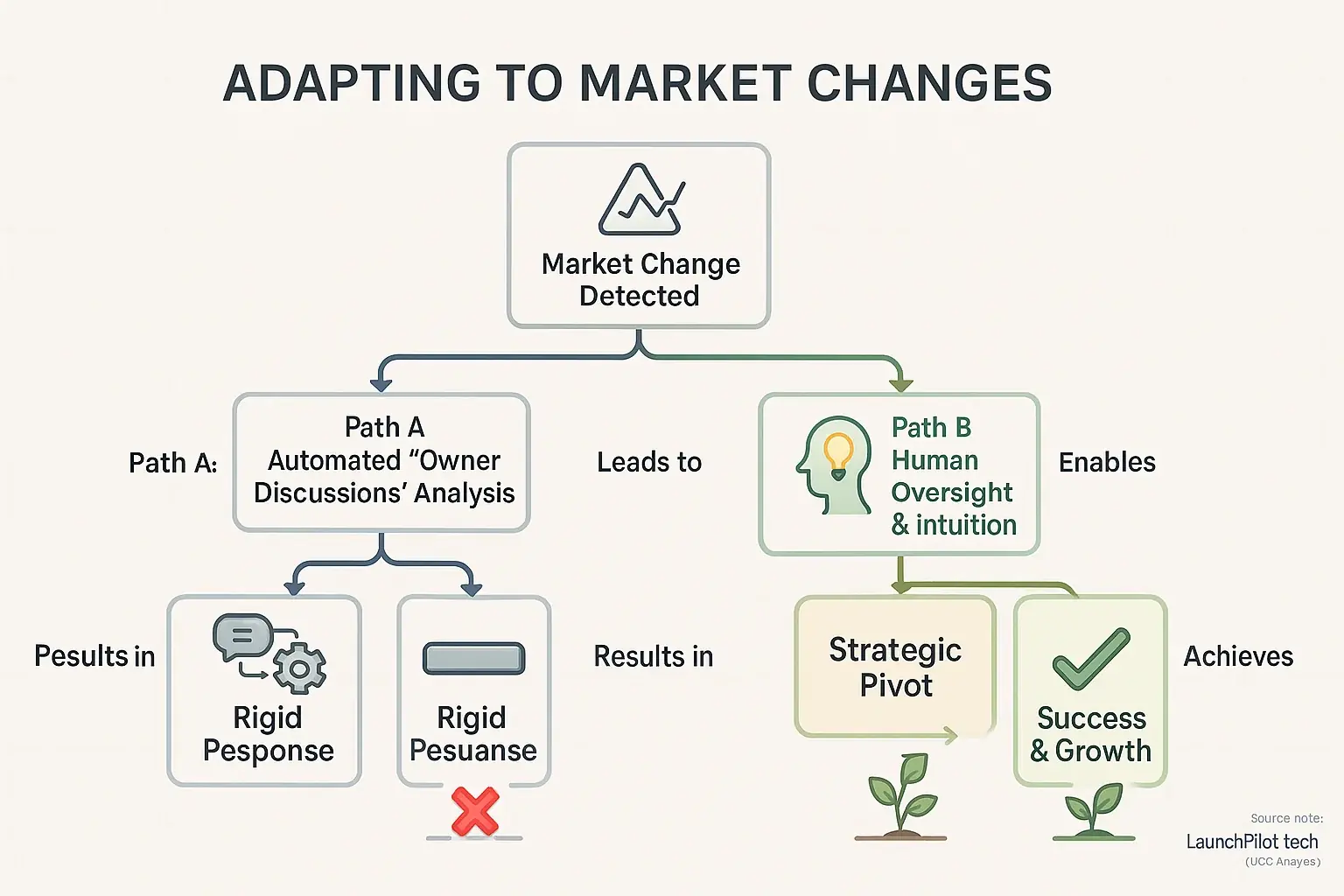

The launch landscape? It's a moving target. Your feedback Launch Co-pilot offers potent data from user reviews. Community-reported experiences, however, highlight a crucial lesson. Automated strategies, our analysis of indie maker feedback suggests, can falter with truly unexpected market tremors or subtle human sentiment. They require your steady hand.

Think about sudden market shifts. Unexpected competitor moves can catch automated plans off-guard. Even nuanced user feedback, the kind rich with subtext, sometimes baffles pure analytical content. We've seen countless indie accounts where a feedback analysis campaign hit a wall. The market zagged. The co-pilot kept zigging the old message. A human maker spotted the disconnect. They pivoted. Our deep dive into user-generated content confirms: your intuition is irreplaceable for these vital strategic adjustments.

So, how do you steer this powerful tech effectively? Successful indie makers consistently report the need for regular human oversight of their feedback co-pilot's strategic direction. You must interpret complex feedback. You guide the emerging consensus content. They advocate for manually adjusting co-pilot suggestions when real-world outcomes deviate from digital projections. This active prompt refinement, based on live results, keeps your launch message sharp and truly adaptive.

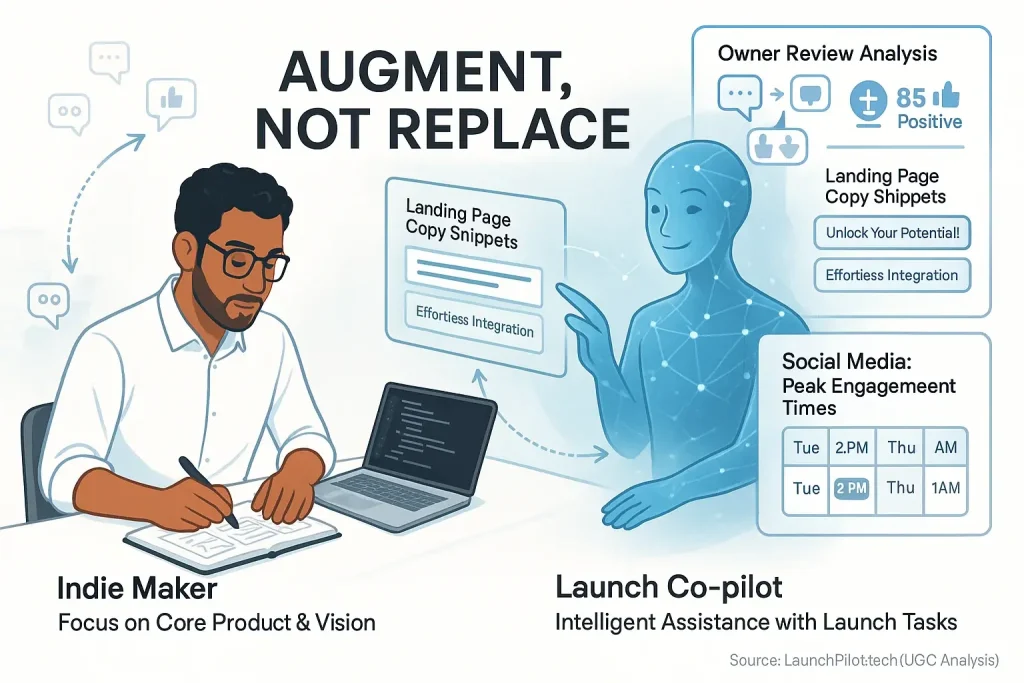

AI as a Tool, Not an Employee: Setting Realistic Expectations for Your Co-pilot

Your analytical feedback co-pilot is a powerhouse tool. It is not a new, autonomous employee. The collective wisdom from the indie launch community consistently highlights this distinction. Treating your co-pilot like a hands-off team member frequently invites disappointment. This common misunderstanding wastes precious indie resources.

What does treating your co-pilot as a tool truly mean? Tools require clear direction. They need a skilled operator. Successful indie makers, those really nailing their launches, get this. They often describe their co-pilots like high-performance drills: incredibly powerful, yet entirely dependent on human guidance for effective use. Our rigorous examination of aggregated user experiences shows these systems excel at data processing and task execution. Humans, however, drive strategy. Humans inject creativity. Humans provide essential oversight and make the final calls.

So, embrace this powerful assistant. But never abdicate the pilot's seat. The most impactful product launches, according to a wealth of indie maker feedback, combine the co-pilot's efficiency with irreplaceable human ingenuity. Your active engagement makes the difference. This partnership unlocks real launch potential.