Why Your Small Indie Team Needs a Clear AI Co-pilot Game Plan (Avoiding Chaos, Maximizing Impact)

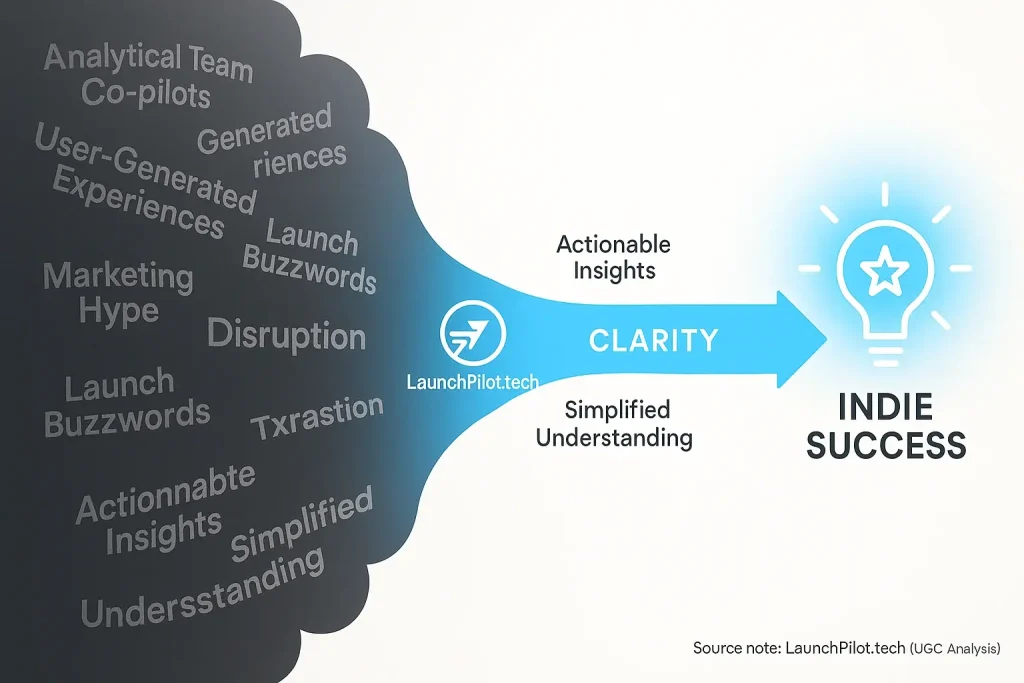

Ever introduced a new tool to your small team? Did it create more confusion than clarity? Our synthesis of indie maker feedback reveals a telling pattern. Powerful analytical analysis co-pilots, without a clear strategy, quickly breed chaos in micro-teams. It's an unspoken truth we've seen patterns of repeatedly. Teams adopt these consensus shows tools. But crucial role definition gets missed. Duplicated efforts then drain resources. Missed opportunities become a painful reality.

Imagine this common scenario reported by indie teams. One member uses the owner shows for social media copy. Another tackles email sequences with the same tool. Neither coordinates their efforts effectively. Your brand voice? It suddenly feels disjointed across channels. Worse, teams often report paying for duplicated our analysis usage. This lack of coordination, a frequent finding in user discussions, transforms a potential data indicates advantage. It becomes a frustrating, resource-draining time sink.

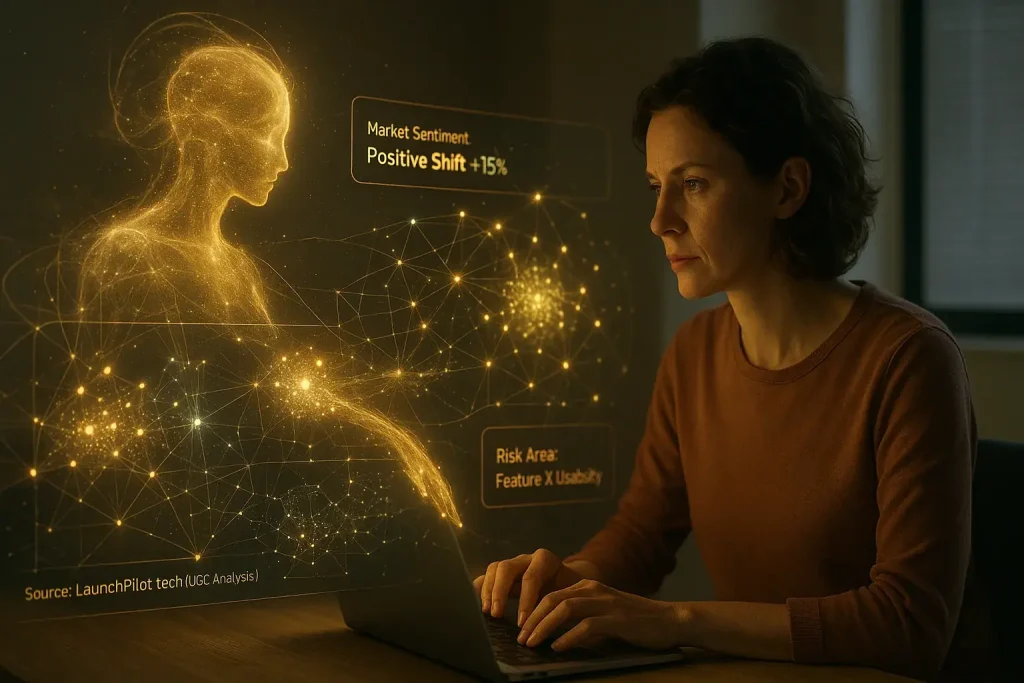

So, what transforms community discussions co-pilot integration from chaos to clarity? This page delivers practical, UGC-backed strategies. We focus on defining roles. We detail clear team responsibilities. We help streamline workflows for small indie teams using these tools. The aim, echoed in countless maker success stories, is simple. Your user-generated feedback co-pilot becomes a true force multiplier. It stops being a source of team friction. A clear game plan ensures your chosen tool genuinely elevates your team's impact.

Who 'Owns' the AI? Defining Roles & Responsibilities in Your Indie Team (No More Guesswork, Just Clear Lines)

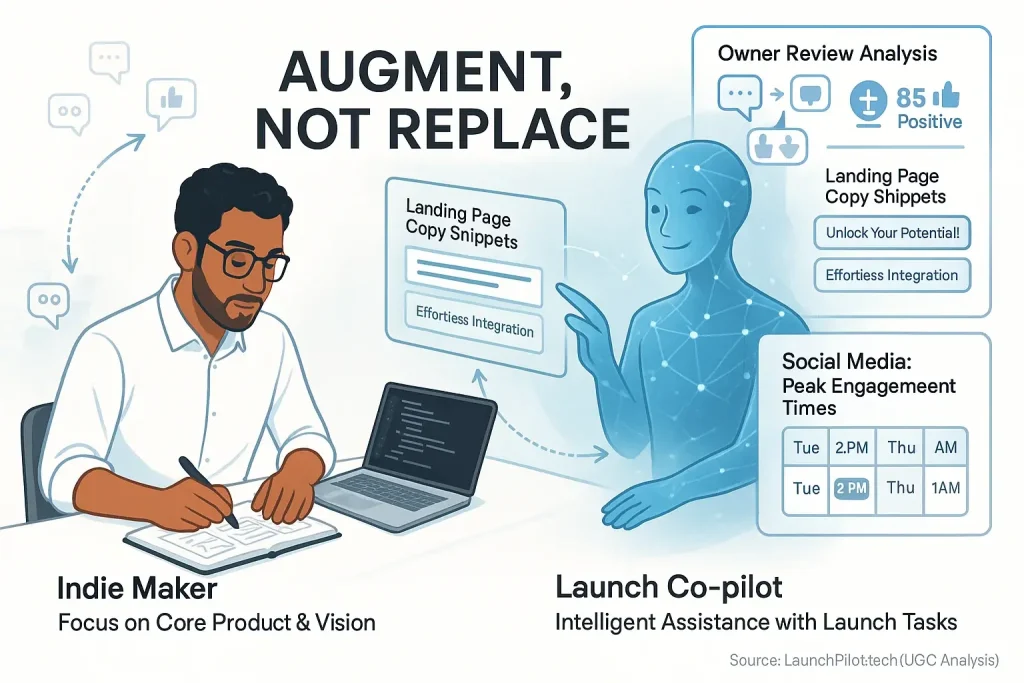

Small indie teams juggle many tasks. But analytical co-pilots demand clear ownership. Without defined roles, powerful feedback co-pilots often gather dust. Or worse, teams misuse them. This pattern emerges frequently from collective wisdom in indie creator communities.

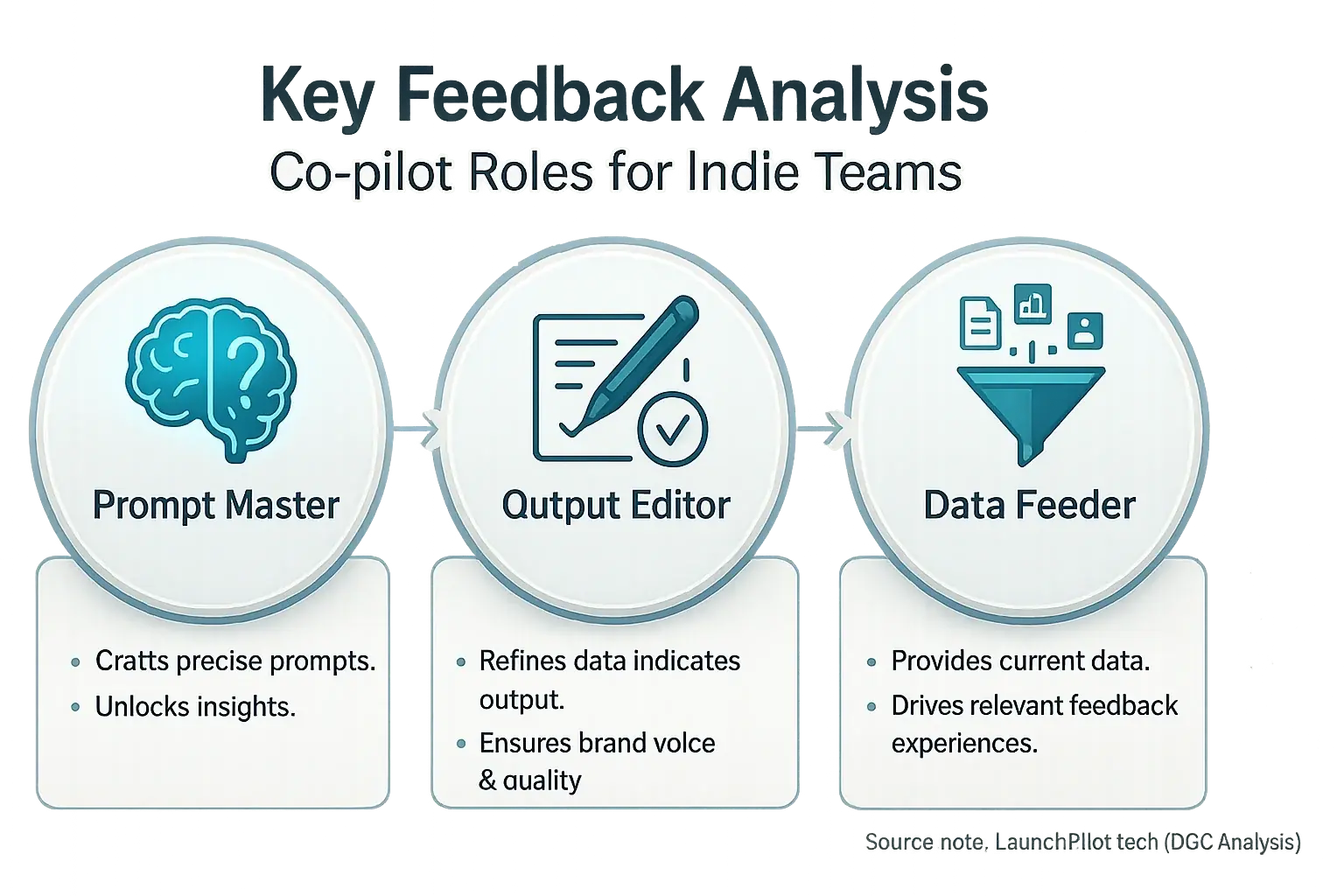

Our examination of indie experiences reveals common successful roles. The 'analytical feedback Prompt Master' deeply understands the co-pilot. They craft precise questions. This skill unlocks relevant insights from your co-pilot. Next, the 'analytical content Output Editor' refines the co-pilot's generated text. They ensure it matches your brand's unique voice. Quality control is their focus.

Consider the 'user-generated analysis Data Feeder' next. This role feeds the co-pilot current, accurate information. Your product. Your audience. Your market. Indie makers constantly report: quality input dictates co-pilot output relevance. These roles can be shared. They can rotate. Clear definition still remains essential for effective co-pilot use.

Defining these roles is not corporate procedure. It is a survival tactic for lean teams. Clarity here makes your analytical co-pilot a powerful ally. Friction reduces. Efficiency climbs. Your focused efforts then directly impact launch success. Real results. That's the goal, right?

Streamlining Your Collaborative AI Workflows (Shared Prompts, Review Loops & Avoiding Bottlenecks)

Clear roles mark the beginning. Efficient teams then build workflows. These workflows make insights from user discussions a seamless part of daily operations. Real efficiency gains happen here. Think of it as choreographing a dance between your team and our process tools.

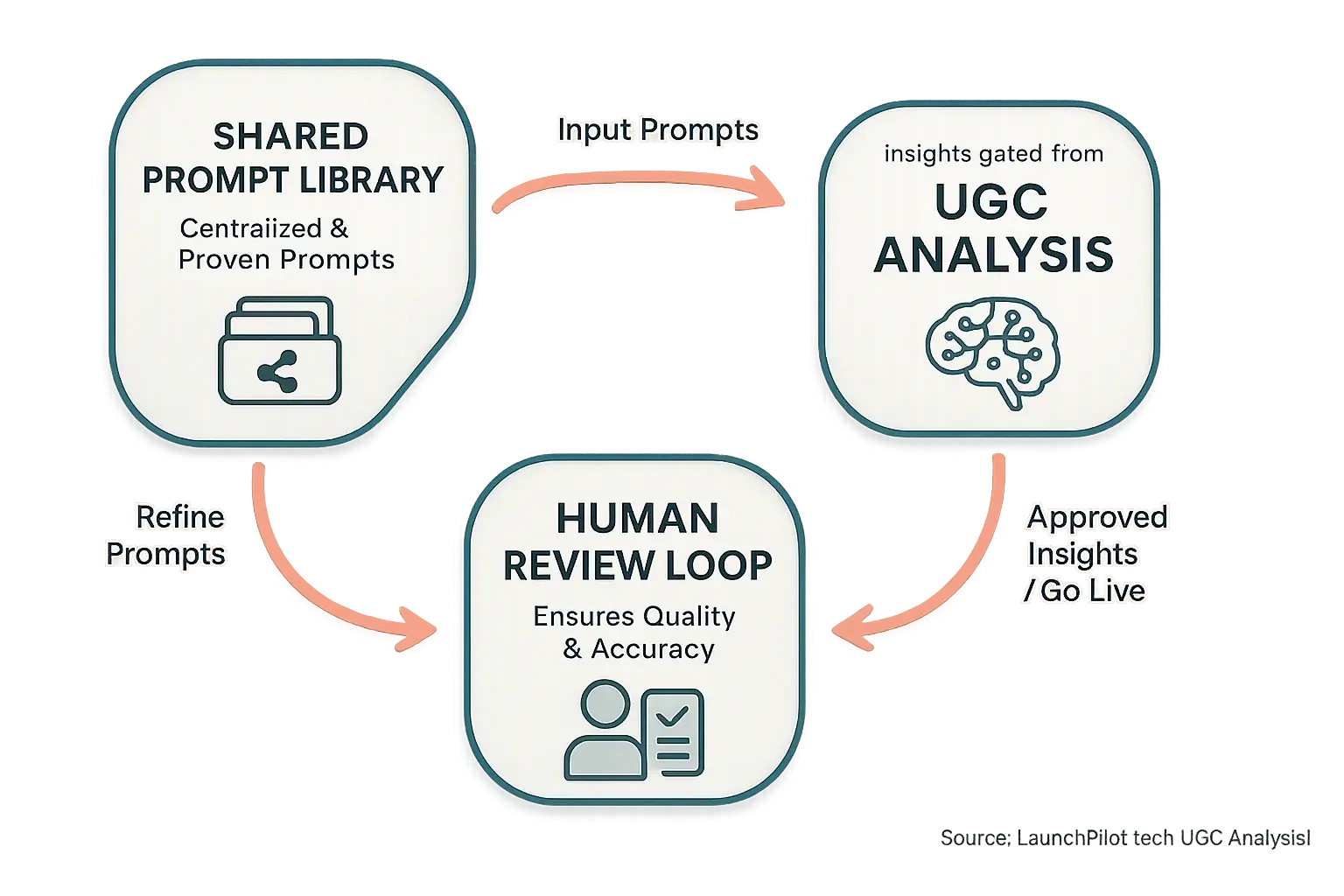

Many successful indie teams champion a shared prompt library. This library is a central hub. It houses your best prompts for our process tools. These prompts cover social media, email, even brainstorming. This practice stops constant 'reinventing the wheel'. It ensures consistent quality in the insights derived from user discussions. That consistency addresses a common frustration we've observed in indie maker feedback. No more "what prompt did you use for that?" questions. Everyone accesses proven, effective prompts.

Our process tools deliver powerful starting points. Human review, however, remains absolutely vital. A robust human review loop is crucial for quality. This means designated team members check content derived from user discussions. They verify brand voice. They confirm accuracy. They assess overall quality. This check must occur before any content goes live. Indie makers consistently report that skipping this step often leads to preventable errors or generic output.

Shared prompts streamline creation. Clear review loops maintain high standards. Implementing these elements helps your small team leverage insights from user-generated content. You can do this without creating new bottlenecks. This systematic integration is what we see separating highly efficient indie teams. It frees precious human time. Your team then focuses on creative, strategic tasks. Those are the tasks only your team can truly own.

Interactive Tool: Define Your Indie Team's AI Roles & Responsibilities (Get Your Customized Task Matrix)

Define Your Team's AI Roles

Answer a few quick questions to get a customized AI task matrix for your indie team.

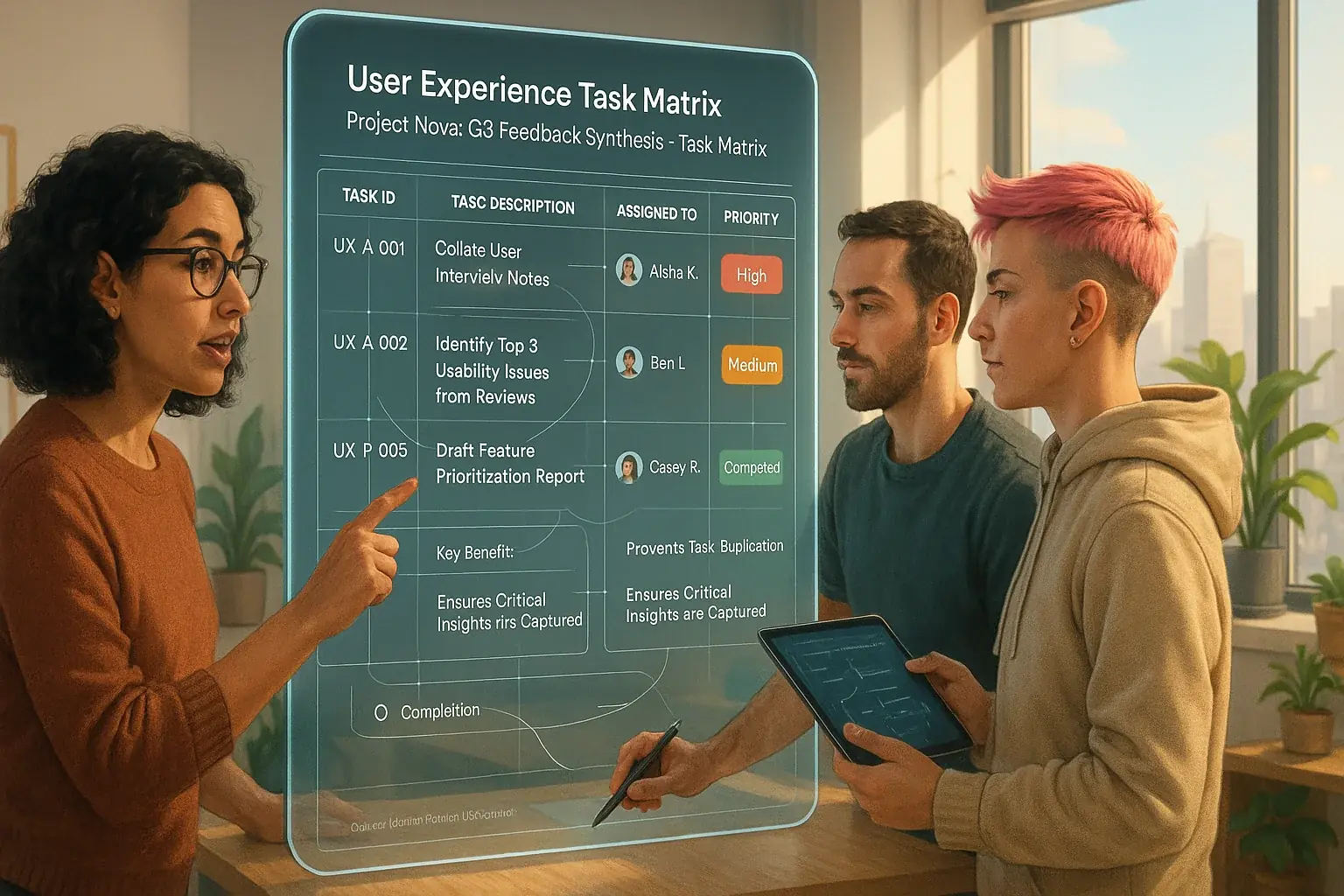

So, you've got your customized analytical reviews task matrix. This isn't just a fancy chart. It is your team's blueprint. Seamless owner analysis integration is the goal. Indie makers often report that unclear responsibilities derail feedback efforts. This matrix directly addresses that widespread challenge. It prevents duplicated work. It ensures critical insights are not missed. This clarity, based on collective indie wisdom, transforms feedback processing.

Now, share this matrix with your team. Discuss each role. Clarify all expectations. Agree on how you will communicate about data analysis tasks. This step is crucial. Many successful indies emphasize constant dialogue for team alignment. Remember, the best plans are living documents. Adapt them. You will learn. You will grow. Continuous refinement is what synthesized indie maker feedback shows separates thriving teams from struggling ones when handling community reviews.

Defining these roles upfront offers huge benefits. You avoid headaches. True. You also build a foundation. This foundation supports truly scalable the information gathered by our team launches. This proactive approach, as many indie teams discover, pays dividends. Time saved. Stress reduced. That is the power of clear delegation, a consistent theme we see in successful indie product stories.

Maximizing Efficiency: Optimizing Your Micro-Team's AI Integration for Launch Success (UGC-Proven Strategies)

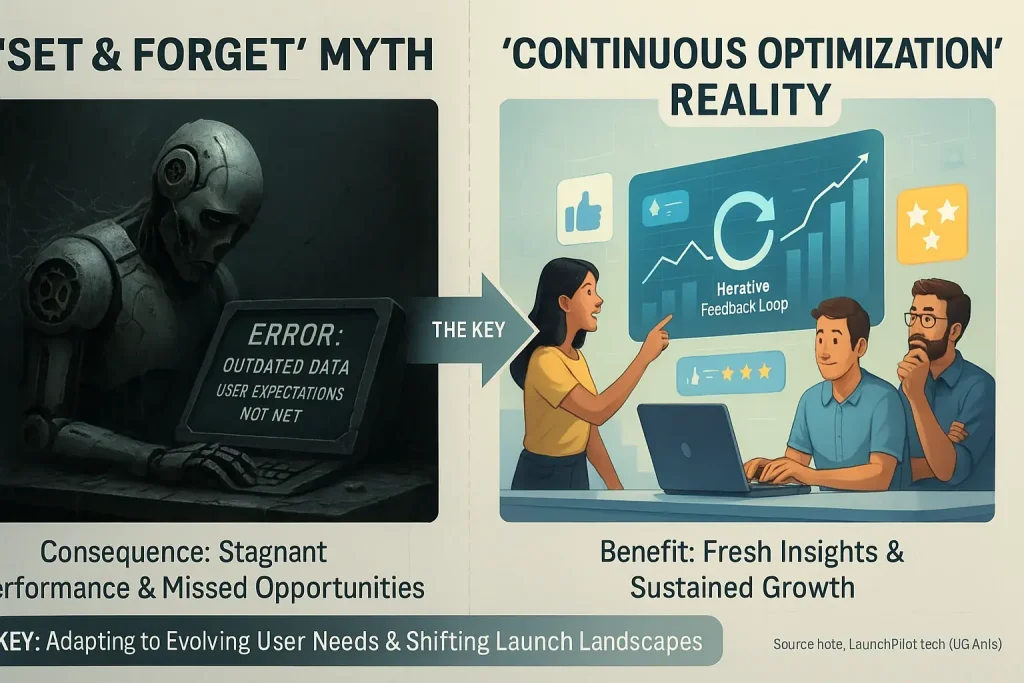

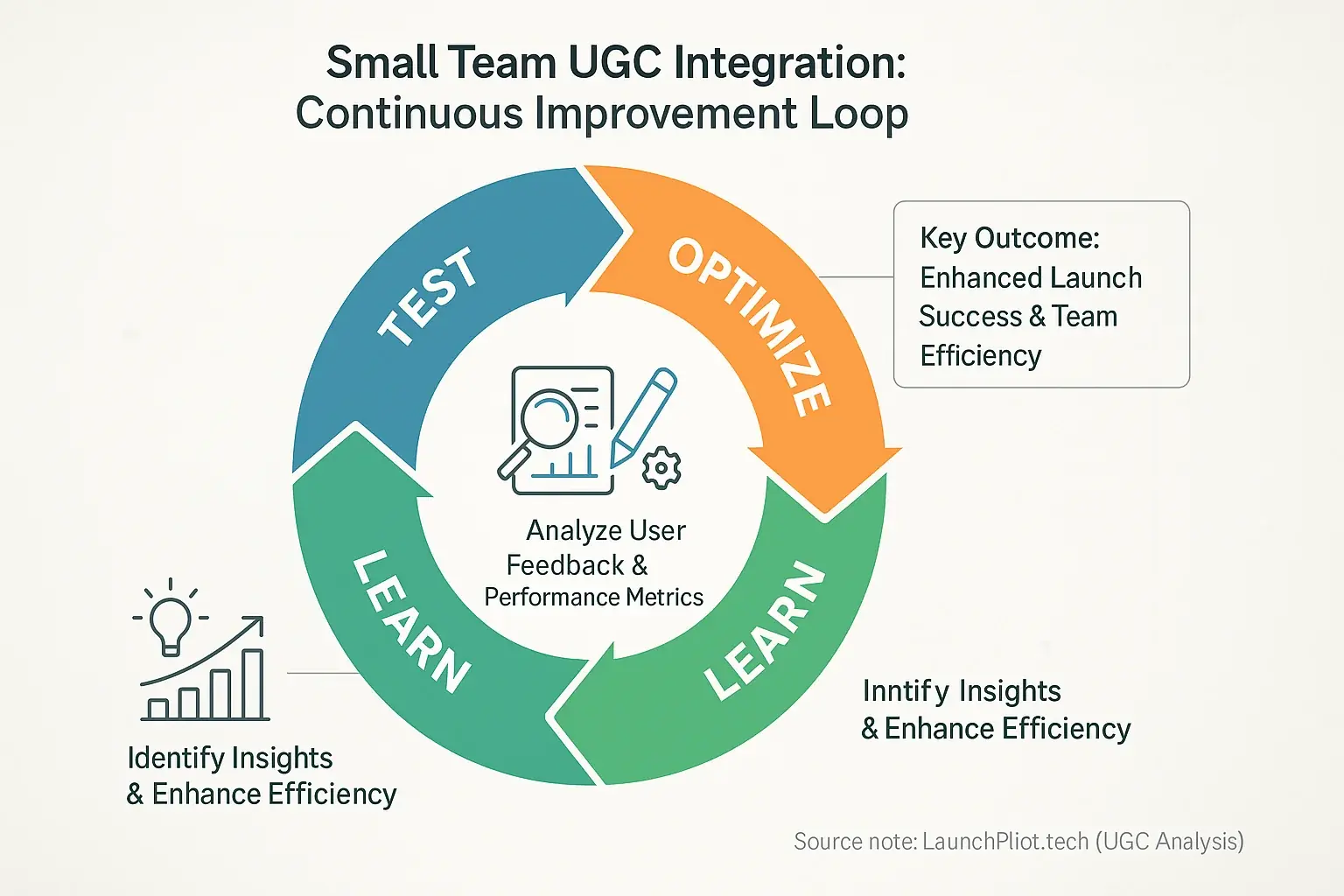

Your team's user discussions tool integration is not a one-time setup. That is just the first step. Real launch efficiency grows when you continuously optimize its use. Think of it like this. You constantly fine-tune your launch engine for peak performance. This iterative approach to user-generated indicates integration is vital for small teams. Indie makers widely report this ongoing process unlocks sustained benefits.

Successful indie teams build strong feedback loops. They regularly check user process output quality. They monitor impact on launch metrics. The team discusses what works. Or what does not. User experiences highlight a key pattern. Creators refine prompts based on real-world results from their analytical tools. This practice often leads to dramatically better consensus analysis assistance for launch tasks.

Consider periodic 'user team Performance Checks' for your micro-team. Ask key questions. Does this our internal data analysis task still save significant time? Is the output quality from your feedback analysis tool consistently high? Are you using the tool’s full capabilities? These checks need not be complex. A quick weekly review often reveals big improvement areas. It also spots where owner process integration might underperform. This proactive stance prevents tool effectiveness from declining over time.

Embrace this iterative improvement cycle. Your small team can evolve with its analytical process co-pilot. This commitment helps your data content tool remain a powerful launch asset. It becomes a true partner. Constantly learning. Adapting alongside you. Many creators report this transforms their workflow, making sophisticated analysis genuinely accessible.