The Unseen Risk: Why AI Co-pilot Data Security Keeps Indie Makers Up at Night

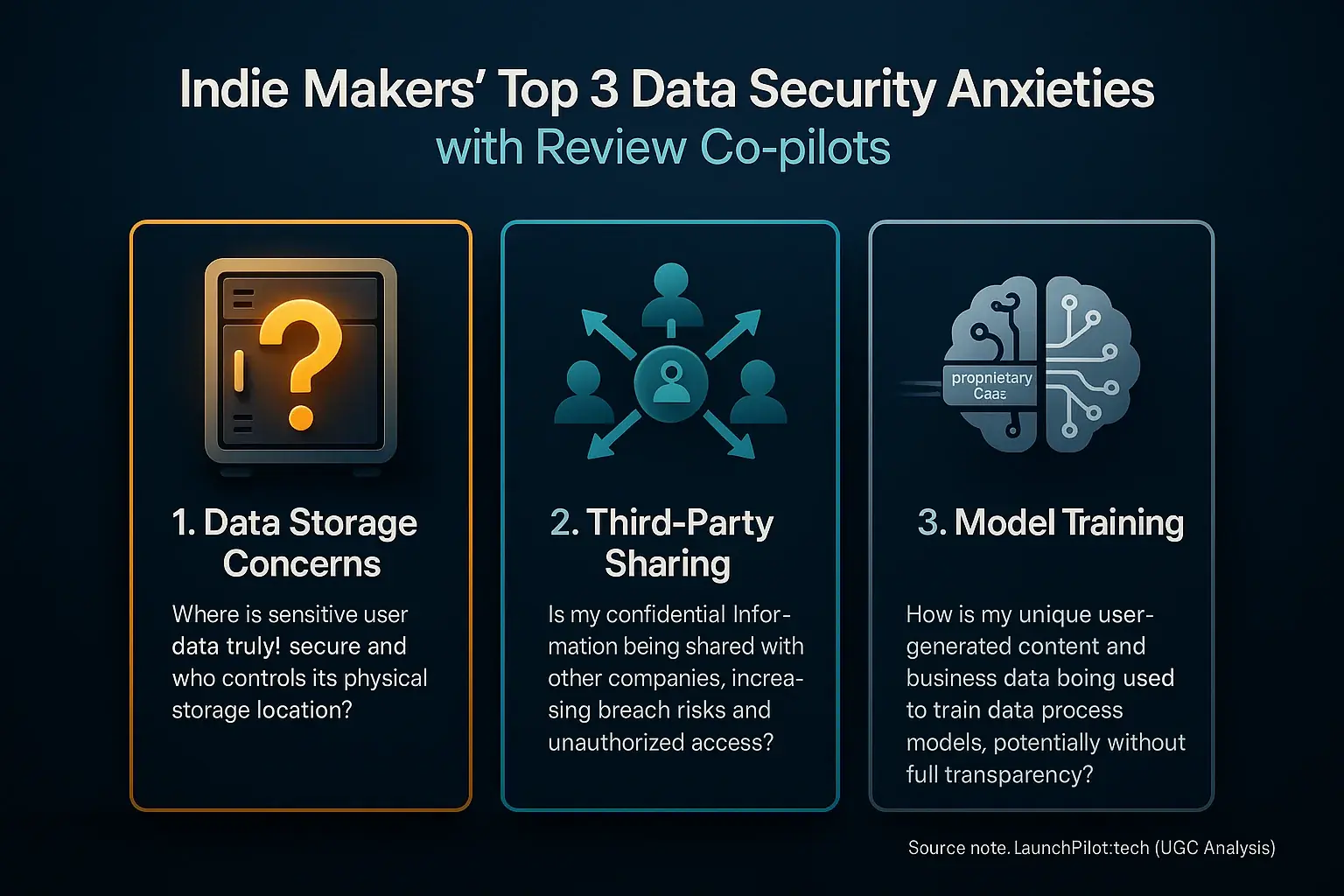

You've poured your soul into your product. You gathered precious early customer data. Now you're feeding it into a launch co-pilot. But wait. Where exactly is all that sensitive information going? And who else sees it? This isn't just paranoia, fellow indie maker. Our deep analysis of user-generated content confirms these are widespread, legitimate anxieties. These Indie Maker Concerns ripple through online communities when discussing user reviews Co-pilot Data Security.

Many indie creators report feeling a distinct unease. Their launch strategies, customer lists, proprietary product details – this is sensitive data. The big question looms: where does it all truly reside? Who actually controls access to your information? The marketing for these platforms often highlights benefits. The potential Privacy Risks? Less discussed. Our investigation into community experiences digs into these unspoken truths about information control and data handling.

LaunchPilot.tech exists to pull back this very curtain. We meticulously synthesize indie maker feedback to illuminate genuine data security vulnerabilities with these tools. This is not about spreading fear. It is about fostering crucial awareness. Understanding these often hidden risks empowers you. You can then manage your data more effectively. You can make smarter, safer decisions for your venture.

The Black Box Problem: Where Does Your Data Actually Go? (Data Storage Policies)

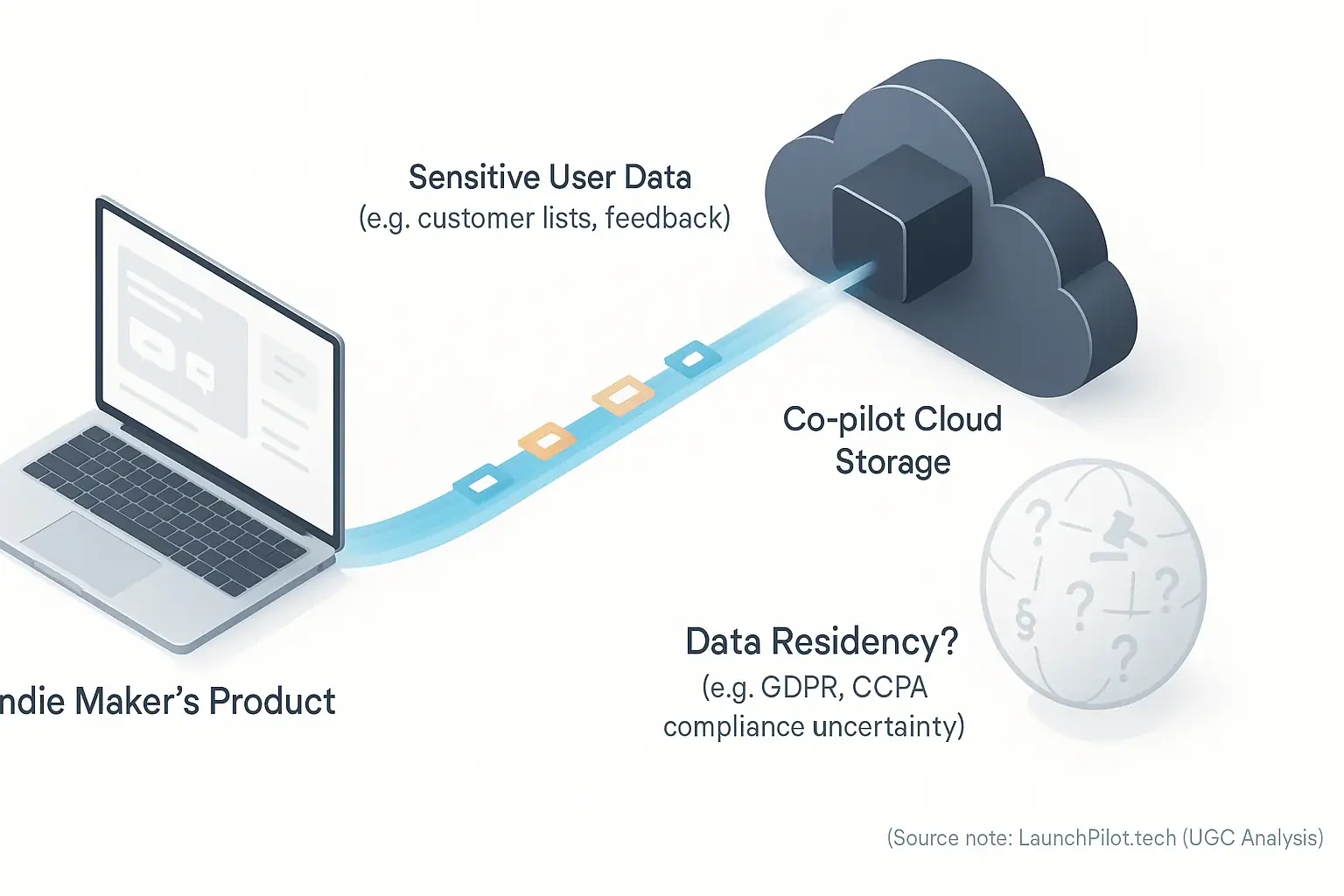

Many review process co-pilots store your data using cloud servers. This is common. This approach, however, creates significant anxiety among indie makers. The core issue? Control. Questions about data jurisdiction immediately surface. Our synthesis of indie maker feedback shows a persistent question: "Where exactly does my data live?"

Data residency deeply concerns many users. This isn't just legal jargon; it's a practical headache. Compliance with regional regulations like GDPR or CCPA often hinges on data location. Imagine your customer list. Carefully built over years. Now, picture that sensitive information residing on a server in a country with minimal privacy safeguards. That exact scenario is a recurring nightmare voiced in countless indie maker discussions.

The lack of clear data deletion policies is another prominent frustration found across user forums. Indie makers frequently ask: "What happens when I terminate my service?" Or, "How long is my data truly retained?" Vague responses, or worse, silence on these points, significantly erode trust. Our analysis indicates transparent deletion protocols are a major factor for indie confidence.

Some analytical tools do offer on-premise or private cloud storage options. These alternatives can provide greater jurisdiction control. That's a plus. The problem? Cost. These solutions typically target enterprise budgets. For most indie makers and small teams, such options remain financially prohibitive, a common point of disappointment in user reviews.

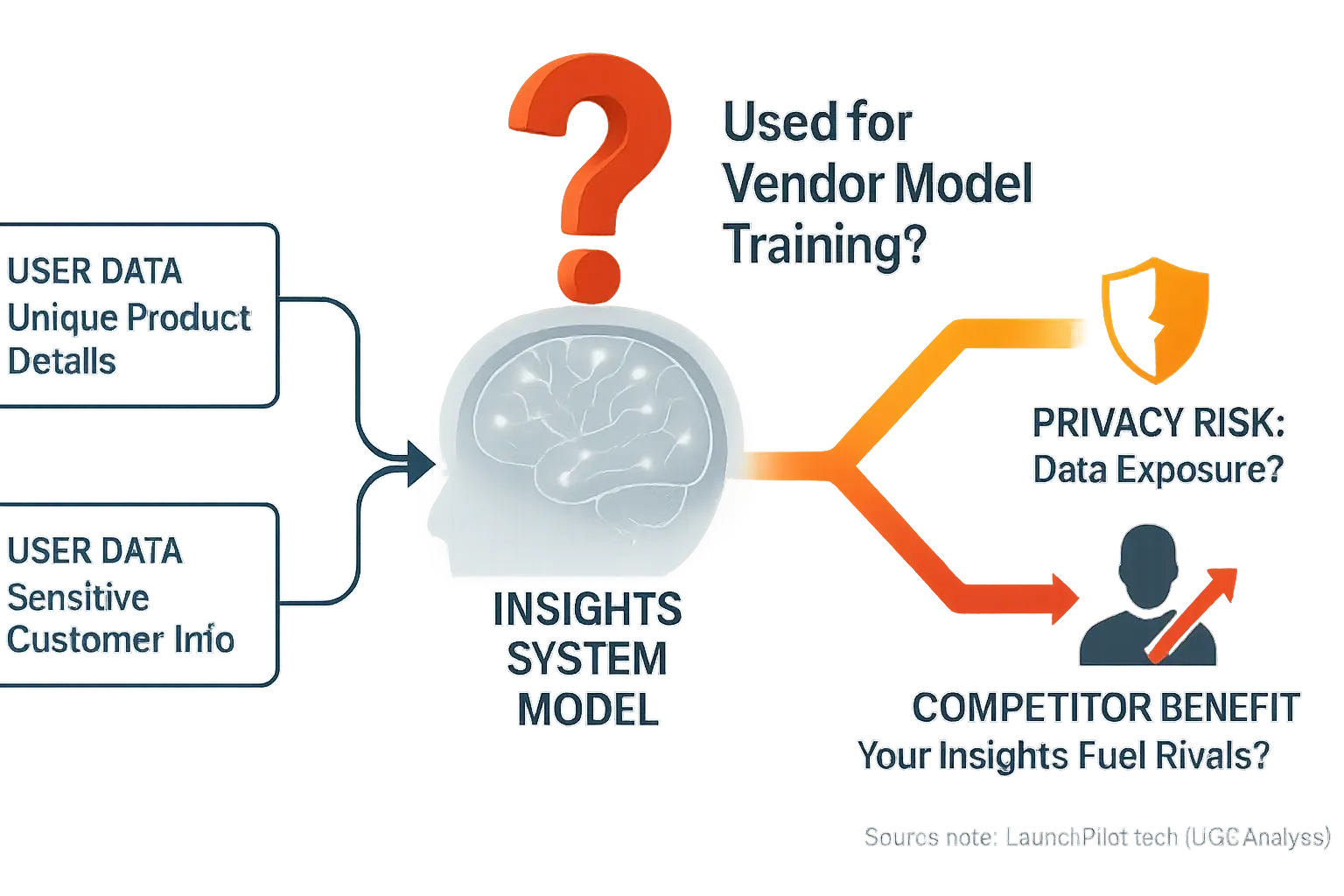

The AI's 'Meal Plan': What Happens When Your Data Trains Their Models? (AI Model Training Data)

What truly happens when your data feeds feedback content models? This question troubles many indie makers. Our comprehensive synthesis of indie maker feedback reveals a core anxiety. Your unique product details. Your sensitive customer information. Does this input train the vendor's general community discussions?

This possibility raises stark fears about proprietary data. Could your hard-won insights become public? Worse, might they inadvertently arm your competitors? Many users report this concern. The potential loss of competitive advantage is a genuine risk.

Vendor transparency on analytical content model training data often falls short. Indie makers consistently report vague policies. Clear answers about data usage can be elusive. This opacity fuels distrust. It's a major problem.

Some services mention 'opt-out' options. Private model training exists. But patterns observed across extensive user discussions show a catch. These safeguards are frequently expensive, enterprise-level features. This leaves many small teams feeling vulnerable.

The Unseen Handshakes: Third-Party Data Sharing & Sub-Processors (UGC Suspicions)

Many indie makers discover a troubling reality. Your data's journey with analytical team co-pilots is rarely straightforward. Our rigorous examination of aggregated user experiences uncovers widespread suspicion about these "unseen handshakes." You adopt a new data indicates tool. You feed it your valuable customer insights. You believe that data stays put. Secure. But what if it doesn't? Feedback from the indie launch community suggests your data might silently travel. Passed to a cloud provider you didn't choose. Shared with an analytics service you've never heard of. Perhaps even processed by another analytical indicates model entirely. The critical question from users: did you consent to this hidden data flow?

This hidden network of data sharing is a significant concern. Analytical tools, especially sophisticated co-pilots, often rely on sub-processors. These are third-party services. Think cloud infrastructure. External analytics platforms. Specialized feedback shows components. Each one represents another node in your data's journey. Another potential point of vulnerability. The collective wisdom from the indie launch community highlights intense frustration here. Vendor agreements frequently obscure these relationships. Or they omit them. This lack of transparency about sub-processors raises immediate red flags for countless creators managing sensitive information.

The opaqueness in vendor agreements fuels deep user distrust. Indie creators report spending hours trying to decipher complex legal documents. They search for clarity on third-party data sharing. Often, they find vague clauses. Or no mention at all. This isn't just an annoyance. It's a critical risk assessment failure point. When a tool's data handling practices are murky, how can you confidently assess compliance? How can you protect your users' data? The patterns in community discussions show this is a major hurdle for solopreneurs who lack dedicated legal teams.

These unclear data sharing practices can lead to serious consequences. Unexpected data exposure is a primary fear echoed in countless user forums. A sub-processor experiences a breach. Suddenly, your customer data is compromised. Non-compliance with regulations like GDPR or CCPA becomes a real threat. For an independent creator, the fallout can be devastating. Financial penalties. Reputational damage. Lost user trust. Therefore, meticulous due diligence on any tool's complete data sharing ecosystem isn't just advisable. It's essential for survival.

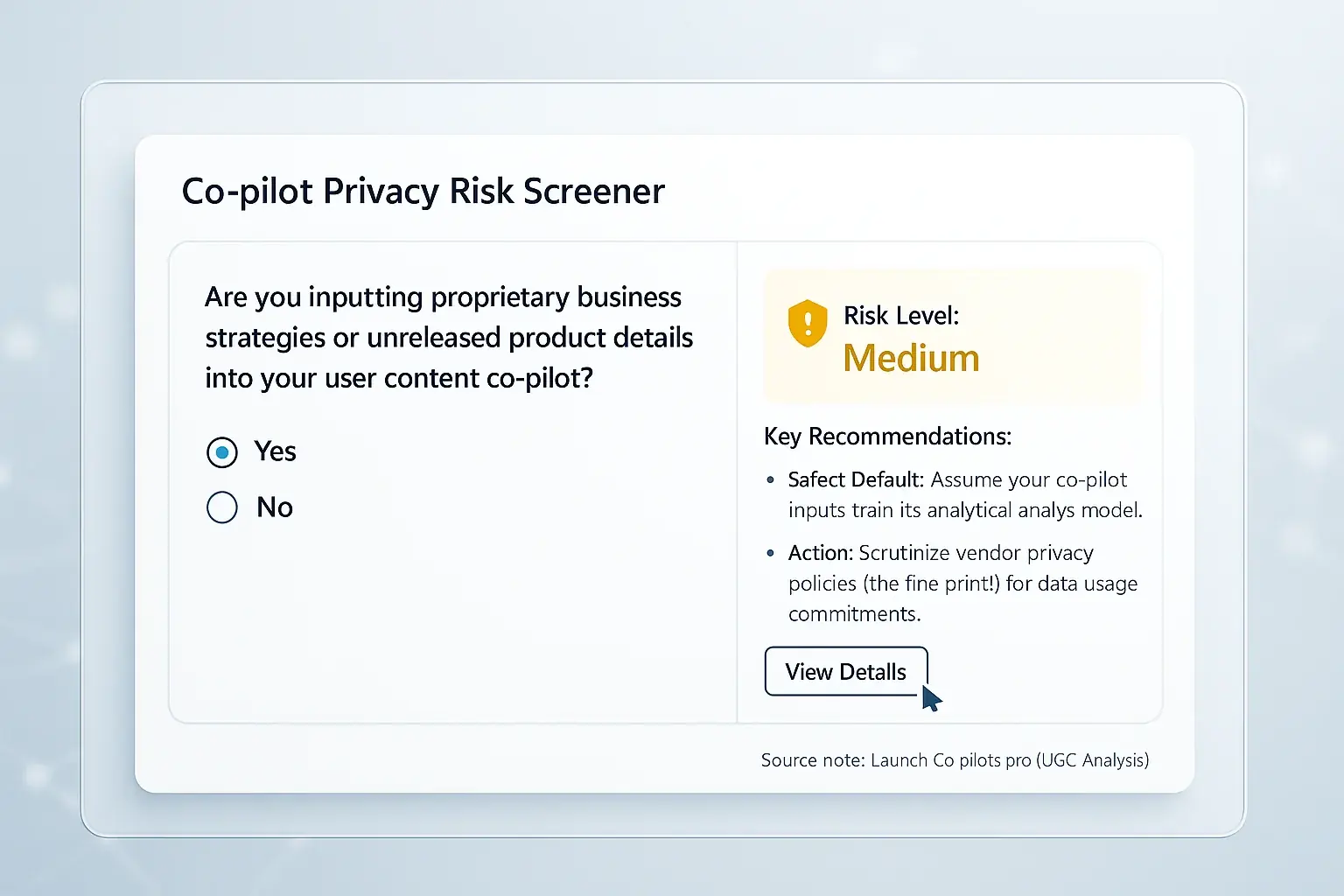

Interactive Tool: AI Co-pilot Privacy Risk Screener (Identify Your Vulnerabilities)

AI Co-pilot Privacy Risk Screener

Quickly assess potential data privacy risks with your AI Launch Co-pilot. Answer a few questions to get personalized insights.

1. Do you upload sensitive customer data (e.g., email lists, personal info, financial details) directly into your AI co-pilot?

2. Is your product or service in a regulated industry (e.g., healthcare, finance, education) where strict data privacy laws apply?

3. Does the AI tool's privacy policy explicitly state that your input data is NOT used for training their general AI models?

4. Do you know if the AI co-pilot shares your data with any third-party sub-processors (e.g., other cloud services, analytics providers)?

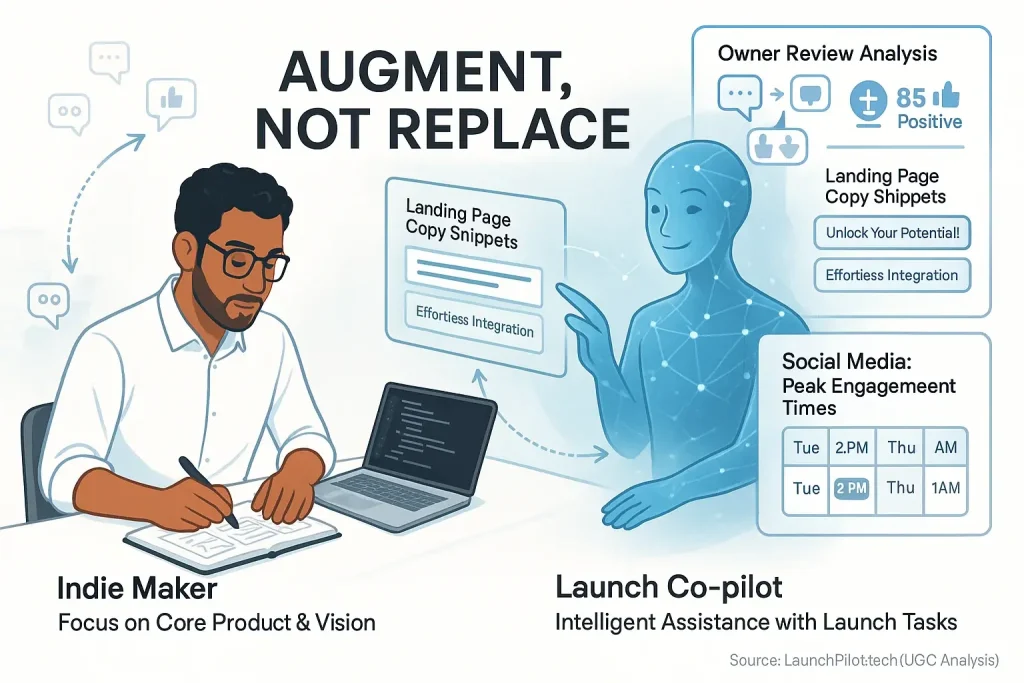

This screener quickly illuminates potential privacy risks with your consensus content co-pilot. It distills wisdom from countless indie maker experiences. Understanding your specific vulnerabilities is fundamental. This clarity empowers proactive data protection. Real safety starts here.

Proactive data management is a cornerstone for every indie creator. A key insight from aggregated user experiences: assume your data inputs help train the co-pilot's model. This is the safest default. Vendor privacy policies—the detailed ones, not marketing blurbs—must explicitly state otherwise. Always scrutinize that fine print.

Your Data, Your Rules: Empowering Indie Makers with Control & Compliance (GDPR, CCPA & Beyond)

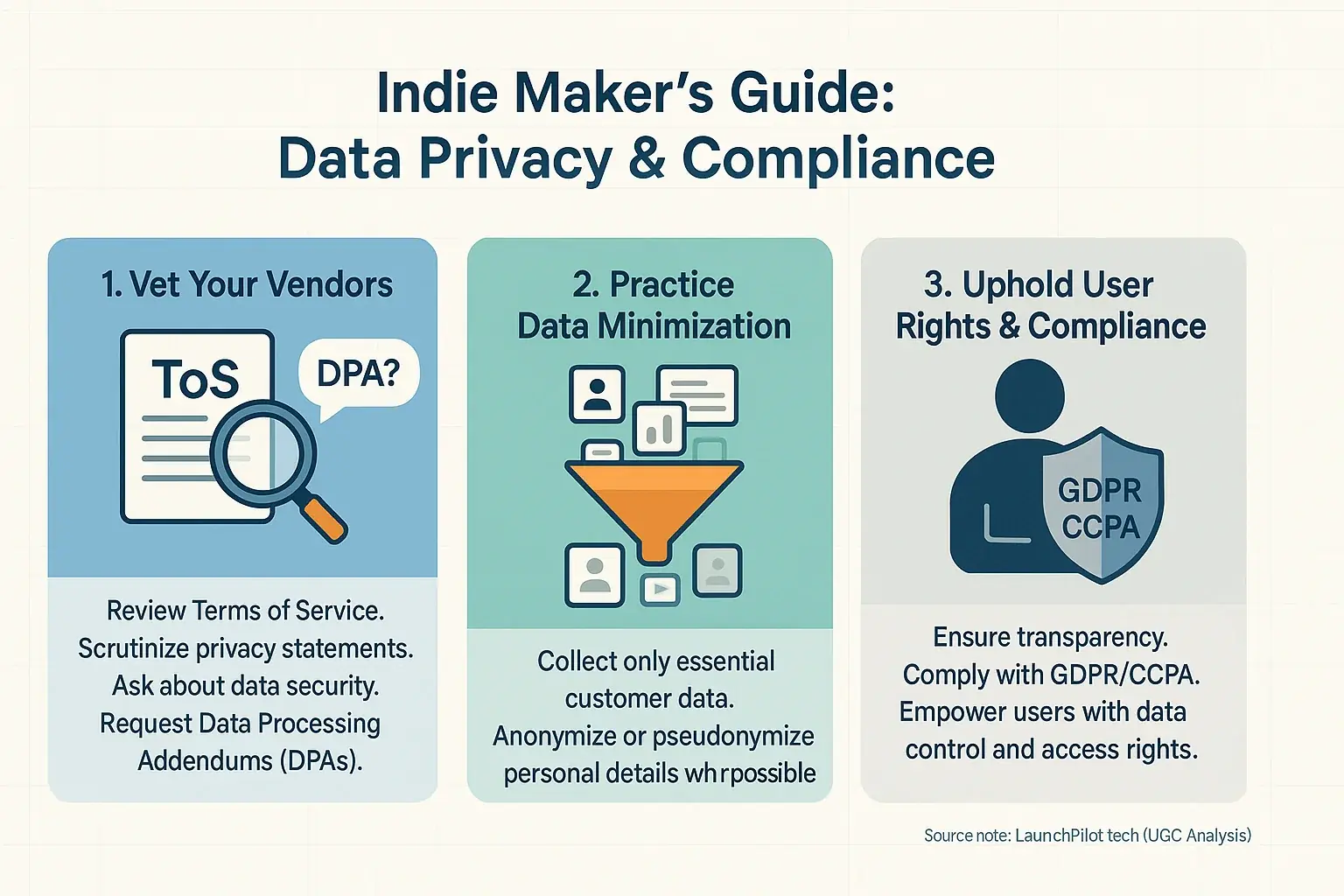

Your data demands your rules. Indie makers must control customer information. This applies to you. Data privacy laws like GDPR and CCPA are not just for giants. You have a loyal customer base. They trust you with their data. That trust extends to the insight tools you use. Protecting their information is vital. Community experiences underscore this growing responsibility for solo founders.

How do you maintain control? Start with vendor policies. Always review any co-pilot's terms of service. Scrutinize their privacy statements. Do not blindly click 'accept'. User-generated content reveals painful lessons learned from policy neglect. Demand clear answers from vendors. Ask about data processing locations. What security measures protect user data? Can they provide a Data Processing Addendum (DPA)? Transparency is non-negotiable.

Implement smart data practices now. Data minimization is a core principle. Collect only essential customer data. Anonymization offers another protection layer. Explore tools that pseudonymize personal details. Understand user rights thoroughly. Your users retain rights over their data. This holds true even with third-party platforms. Proactive management builds trust. It ensures compliance. Our analysis of indie maker discussions confirms these steps are crucial.

Navigating the Data Frontier with Confidence

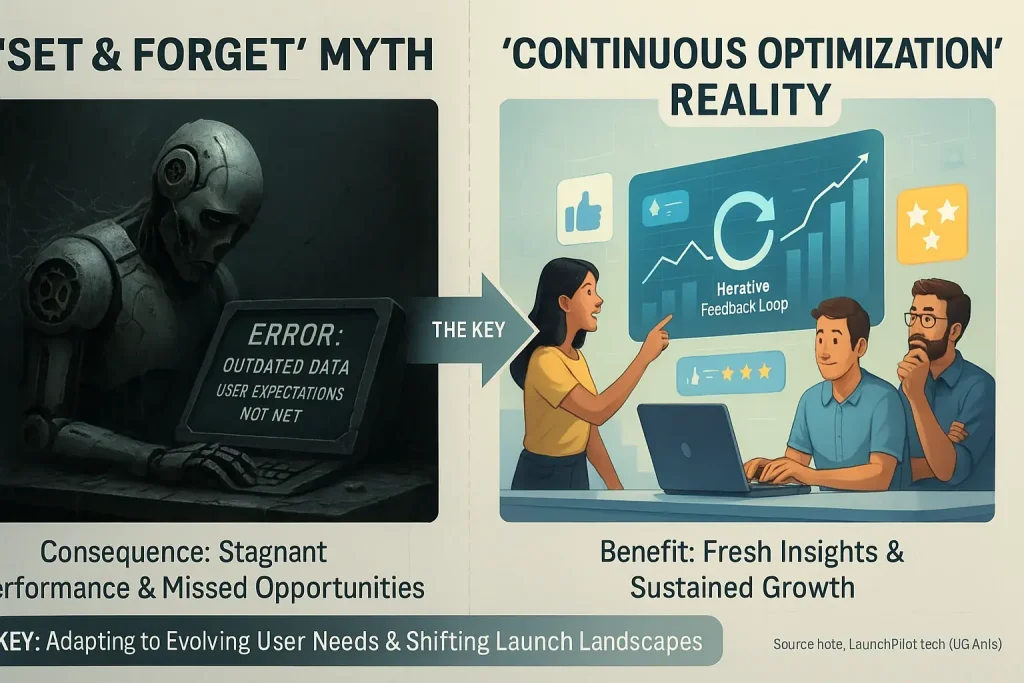

Feedback co-pilot data security often feels complex. We get it. User discussions highlight this initial hurdle. But many indie makers discover a powerful truth. Data security builds essential user trust. This trust forms your business's strong foundation. It is not a roadblock. It is an opportunity. Proactive understanding transforms anxiety into genuine advantage for your venture.

Your users value their data. Indie maker communities constantly echo this sentiment. Protecting that information builds powerful user confidence. This confidence directly translates into brand loyalty. Think of it. Stronger connections. Sustainable growth. These are not just buzzwords. They are outcomes reported by makers who prioritize security, turning a potential concern into a competitive edge.

The tech frontier always evolves. New co-pilot features bring new questions. That’s natural. LaunchPilot.tech stays vigilant for you. We continuously synthesize indie maker experiences. We distill practical guidance from extensive user discussions. Our commitment is clear. We provide transparent insights. You navigate this space with clarity. Always.