The Indie Maker's Growth Dilemma: Why AI Co-pilot Scalability Isn't Just a 'Big Business' Problem

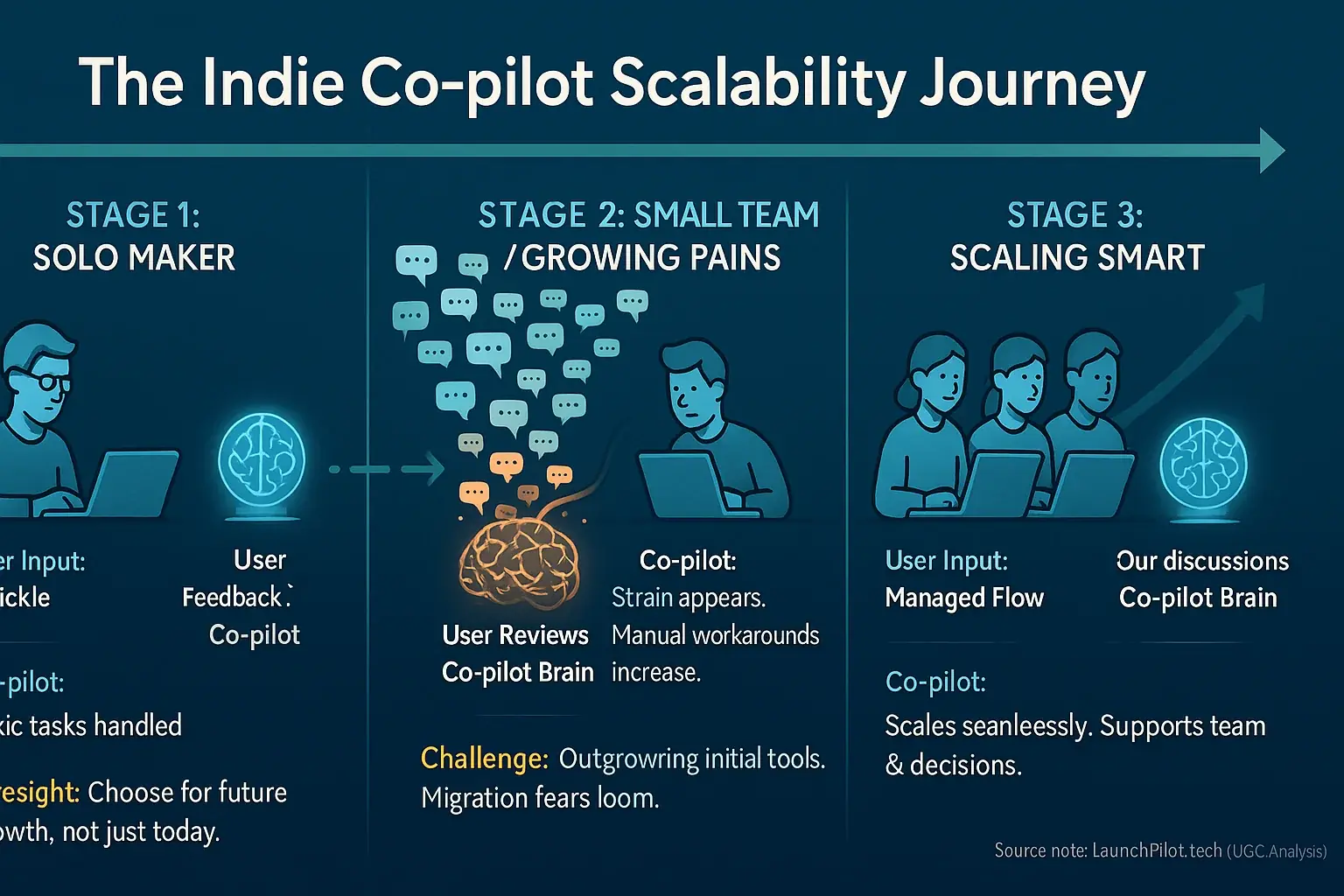

"Scalability" sounds big. Corporate even. Not for you, the indie maker, right? Wrong. Many makers discover this truth much too late. You launched your indie SaaS. Users trickle in. Your user feedback co-pilot handles it fine. But what happens when that trickle becomes a flood? Or when you onboard your first team member? For indies, true scalability means their chosen tool grows with them, not simply for them, avoiding that painful 'outgrown' stage. This foresight prevents costly, time-consuming migrations later.

This challenge uniquely impacts solopreneurs. Small teams feel the pressure intensely. Big companies often throw dedicated IT departments at scaling roadblocks. You probably lack that specific resource. Indie makers require tools adapting seamlessly. No massive re-investments. No complex, frustrating migrations. The unvarnished truth emerges from user experiences; our synthesis of countless discussions reveals which feedback co-pilots genuinely flex to meet real growth. Vendor marketing materials frequently paint a much simpler, rosier picture.

So, what does practical scalability look like for an indie setup? This article digs into that. Our analysis synthesizes actual indie maker journeys. We identify common warnings. The core focus: real-world scalability attributes of user feedback co-pilots, judged by those in the trenches. These are practical insights, not theoretical vendor promises. We are examining how these tools truly perform when your project momentum builds.

Beyond the 'Speed' Claims: How AI Co-pilots Really Handle Increased Workload (UGC Insights on Performance Limits)

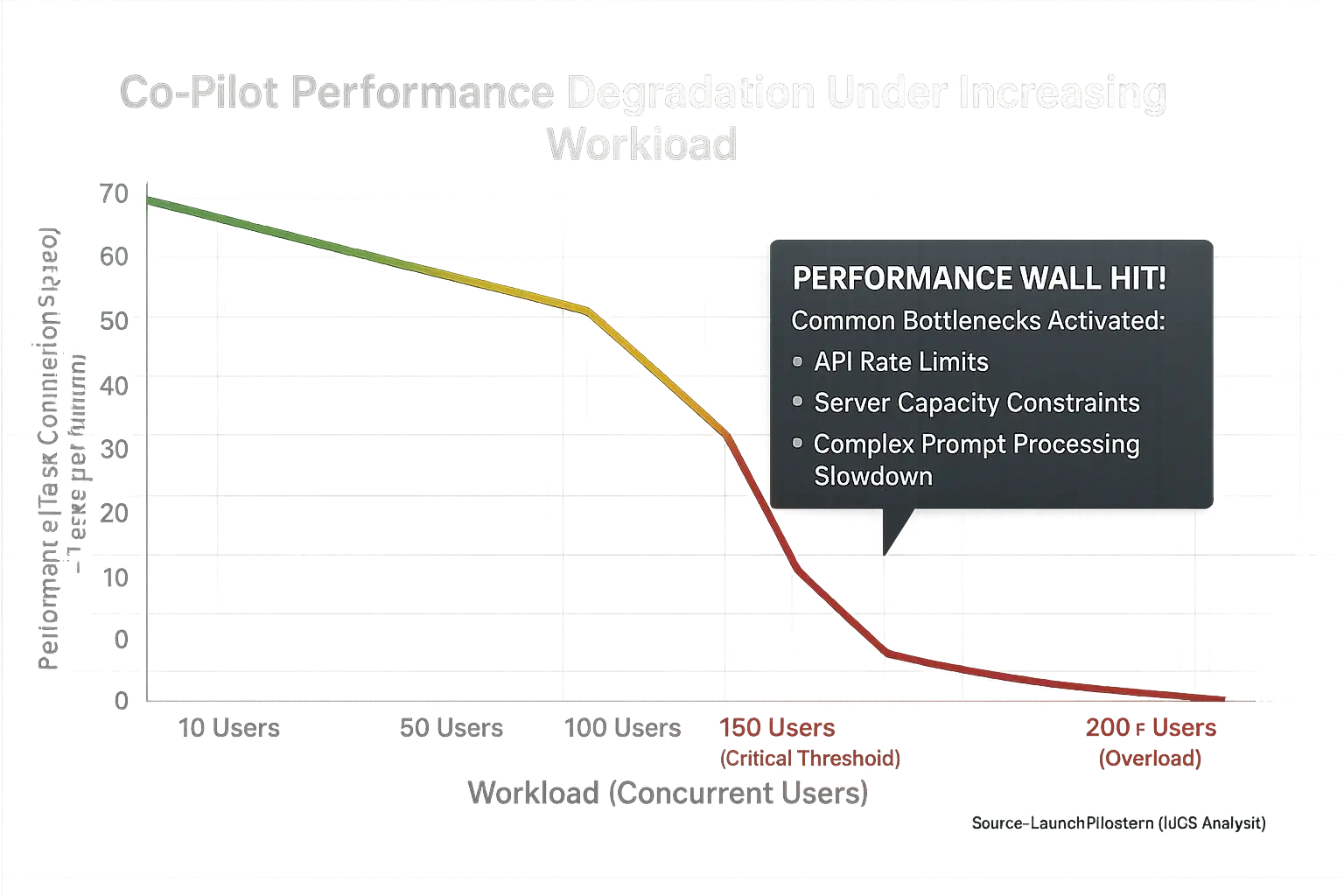

Many consensus reviews co-pilots advertise blazing speed. Initial speed often impresses users. Real indie project growth truly tests these performance claims. Scalability under increasing workload defines long-term value. Imagine: 50 new beta testers onboard. Your feedback synthesis tool starts lagging noticeably. Your content generator now takes ten minutes. Not ten seconds. This scenario is common. Our analysis of indie maker experiences confirms this pain point.

Let's explore co-pilot performance limits. These are unspoken truths for indie creators. Our UGC investigation reveals a consistent pattern. Many tools manage small data volumes well. Sudden demand spikes cause many to hit a performance wall. Common bottlenecks emerge from widespread user feedback. API rate limits frequently throttle operations. Cloud-based tools can face server capacity constraints. Complex prompts also slow processing for certain co-pilots. These issues often stay hidden. They typically surface during a critical launch phase.

What can indie makers do? Users in community discussions share practical strategies. Batch processing tasks helps manage API call volume. Optimizing prompts for greater efficiency reduces the co-pilot's computational load. Some experienced makers preemptively upgrade service tiers before anticipated demand surges. Understanding these performance ceilings before a critical launch moment is key. This foresight prevents system failures. It supports smoother project scaling.

Co-pilot architectures vary. Some designs inherently scale better. For instance, serverless models often manage demand bursts more gracefully. Older, monolithic systems might present more scaling challenges. User-reported experiences frequently highlight these architectural impacts. The crucial takeaway for indies is clear. Test your chosen co-pilot under simulated peak load conditions. This preparation is invaluable.

From Solo Flight to Small Crew: Do AI Co-pilots Support Team Collaboration? (What Indie Teams Really Need & Find)

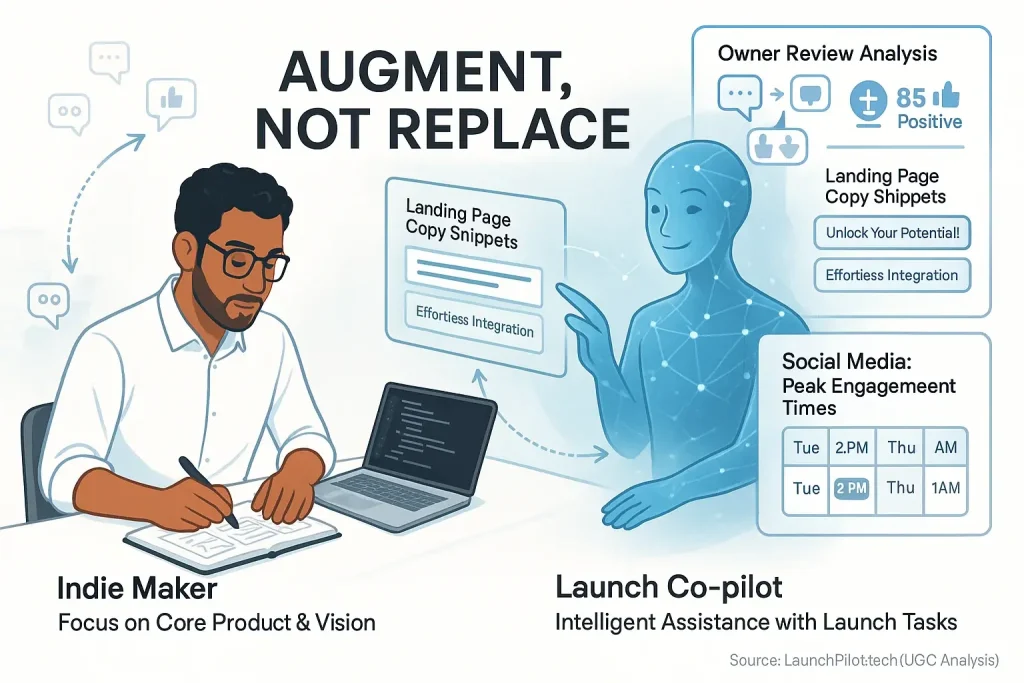

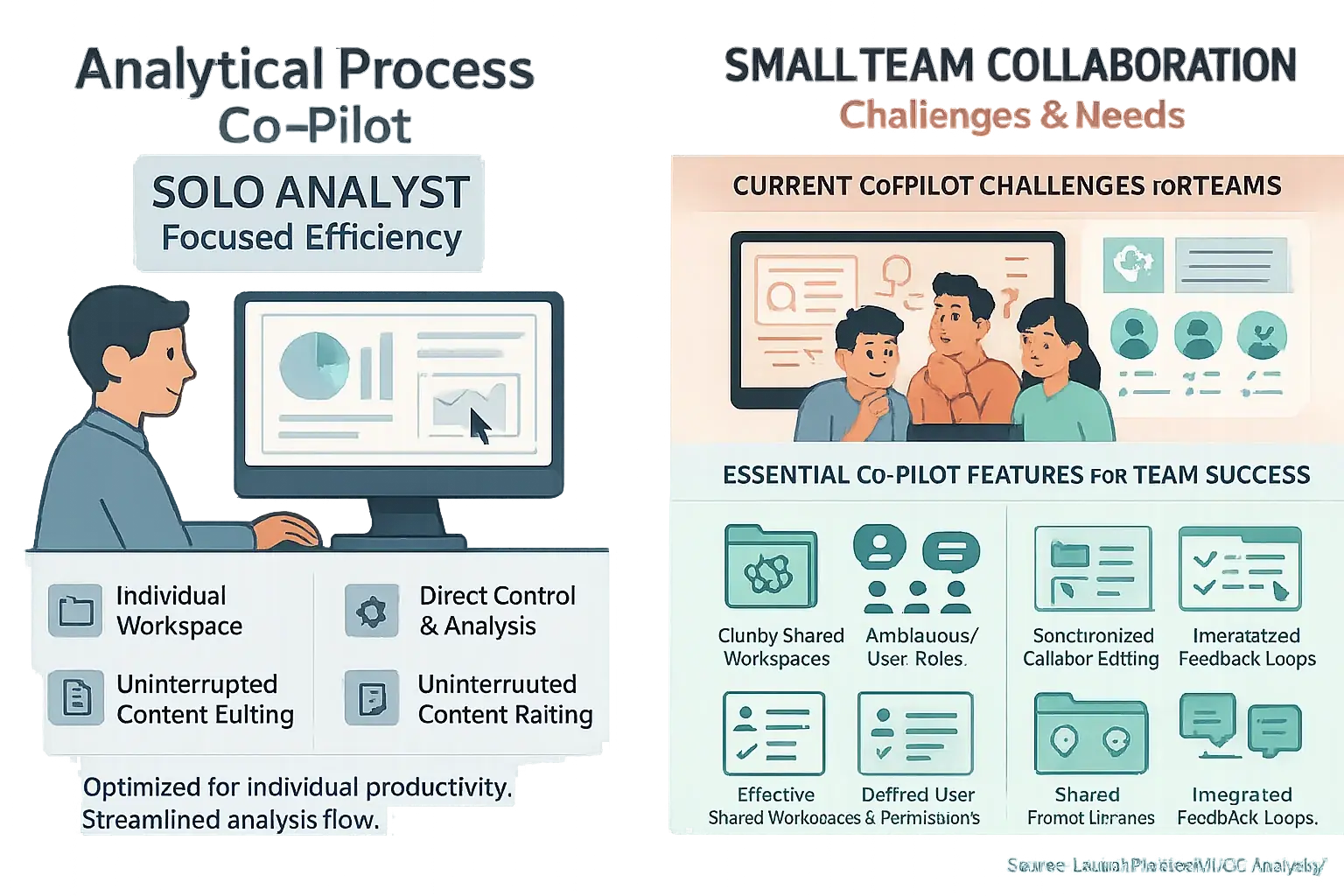

Your indie project grows. You bring on help. Will your user content co-pilot adapt? Many feedback analysis tools serve solo flyers best. Team features often feel like an afterthought. This shift tests co-pilot usability for expanding teams.

What about shared work? Users report real struggles. Shared workspaces can be clunky. Granular user roles? Often missing. Simultaneous content editing creates chaos. Imagine two makers refining insights. They use a tool built for one. Drafts get overwritten. Ideas vanish. This scenario, echoed in user-generated content, causes real headaches for small teams.

So, what do indie teams truly need? Community members often highlight several key features for their user analysis tools:

- Shared prompt libraries for consistency.

- Clear user permissions to simplify workflows.

- Integrated feedback loops for generated content.

These elements are crucial. Some makers build custom dashboards. Others use clever tagging systems. These workarounds show real ingenuity from the indie community.

Many teams bridge these gaps. They use external collaboration tools. Think Notion. Or Google Docs. They manage user analysis outputs there. This bypasses the co-pilot's limits. It’s an extra step. But it keeps projects moving forward.

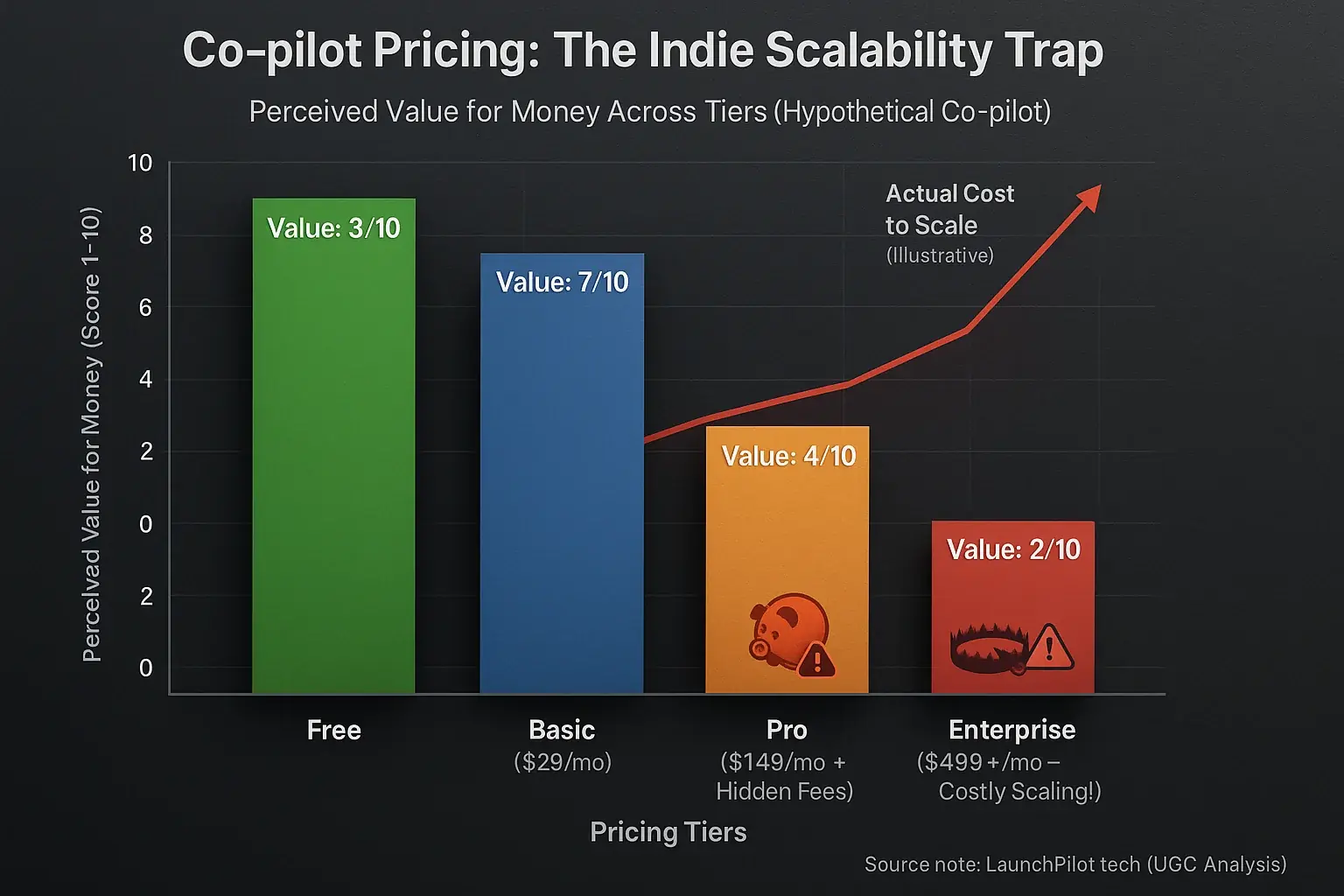

The Scalability Trap: AI Co-pilot Pricing Tiers & Hidden Costs as Your Indie Project Grows (UGC Warnings)

Affordable user analysis co-pilots attract many indie makers. Their low initial price seems ideal. A scalability trap, however, often exists within these pricing structures. That appealing monthly subscription looks great when you are working solo. What happens when your user base suddenly explodes, or you need to add two team members? Your 'cheap' user analysis co-pilot can unexpectedly become a significant expense. Our synthesis of indie maker feedback shows pricing models frequently conceal sharp cost increases. Usage-based fees then become prohibitive for growing projects.

Let's uncover the unspoken truths about co-pilot pricing, as reported by users. Indie makers frequently encounter unexpected overage charges. These often stem from API call limits or data processing thresholds. Premium feature unlocks can be disproportionately expensive. Team pricing is another common pain point. It often fails to scale linearly. This creates surprisingly high costs for expanding teams. One indie developer shared a common story: their 'unlimited' plan had a 'fair use' clause. A viral product launch triggered this clause, leading to a massive, unforeseen bill. User discussions highlight these hidden financial burdens.

How can you spot these potential financial traps? Indie makers must scrutinize the fine print. Understand all usage limits. Question everything. Look for transparent, predictable pricing models. Projecting future usage is absolutely vital. Consider your potential team size growth too. These proactive steps help evaluate the true long-term cost, not just the attractive entry price. Patterns observed across extensive user discussions strongly advise this diligence before committing.

Some indie makers discover effective workarounds. They report stacking multiple cheaper, specialized analysis tools. This approach can avoid reliance on one expensive, all-encompassing co-pilot. Others strategically offload high-volume, repetitive analysis tasks to simpler systems. These strategies, frequently shared within the community, help maintain cost-effectiveness as a project scales. Careful planning is key.

The Data Diet: When Your AI Co-pilot Chokes on Too Much Information (Indie Maker's Guide to Data Volume Limits from UGC)

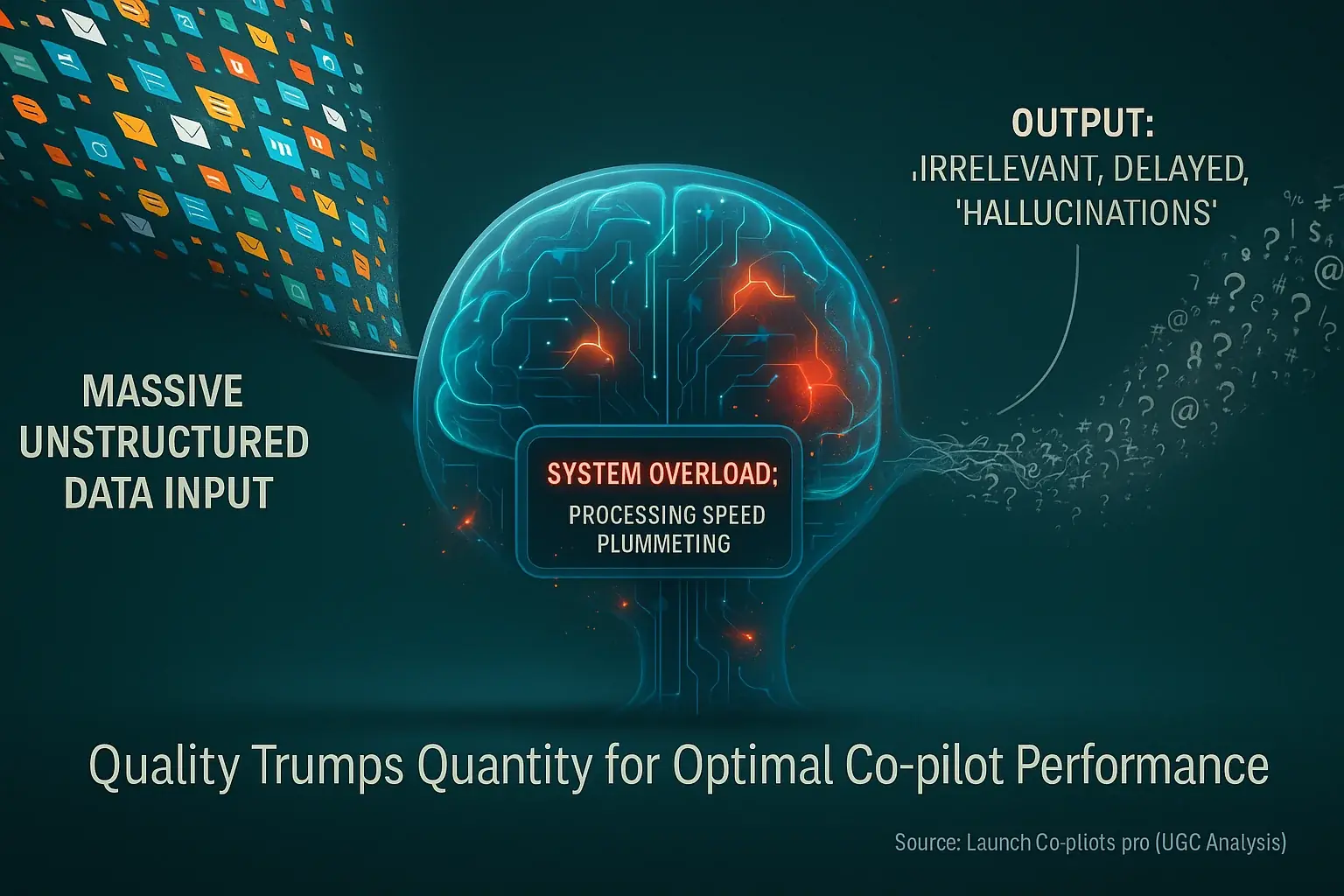

You meticulously fed your launch co-pilot absolutely everything. Every customer email. Every market report. You expected pure genius. Instead? Slow, generic, or irrelevant outputs often appear. What gives? Our deep dive into indie maker experiences reveals a critical pattern. User-generated reviews co-pilots can genuinely struggle with massive, unstructured data volumes. This challenge particularly surfaces for niche indie projects relying on these tools for vital analytical feedback.

Let's uncover some data volume realities. Many indie makers report their user analysis Launch Co-pilots become less effective with data overload. Processing speeds plummet. Output relevance often suffers significantly, according to widespread community discussions. This problem intensifies when these co-pilots attempt to synthesize vast amounts of niche-specific information. The collective experience paints a clear picture. One indie developer shared how their review analysis tool, brilliant for summarizing short customer comments, completely 'hallucinated' product features when fed years of unstructured support tickets. It simply choked.

So, how do savvy indies manage this data deluge for their co-pilots? User-generated content highlights several smart strategies. Curating high-quality input data is paramount; many emphasize this. Others segment their data meticulously for specific co-pilot tasks. Some indie makers even use specialized data preparation tools first. They then feed this refined, focused information to their main owner feedback Launch Co-pilot. The key takeaway from countless reports? More data does not always mean better analytical feedback. Quality trumps quantity. Always.

The 'Outgrowing' Stories: When Indie Makers Hit the Ceiling with Their AI Co-pilot (UGC Lessons & What's Next)

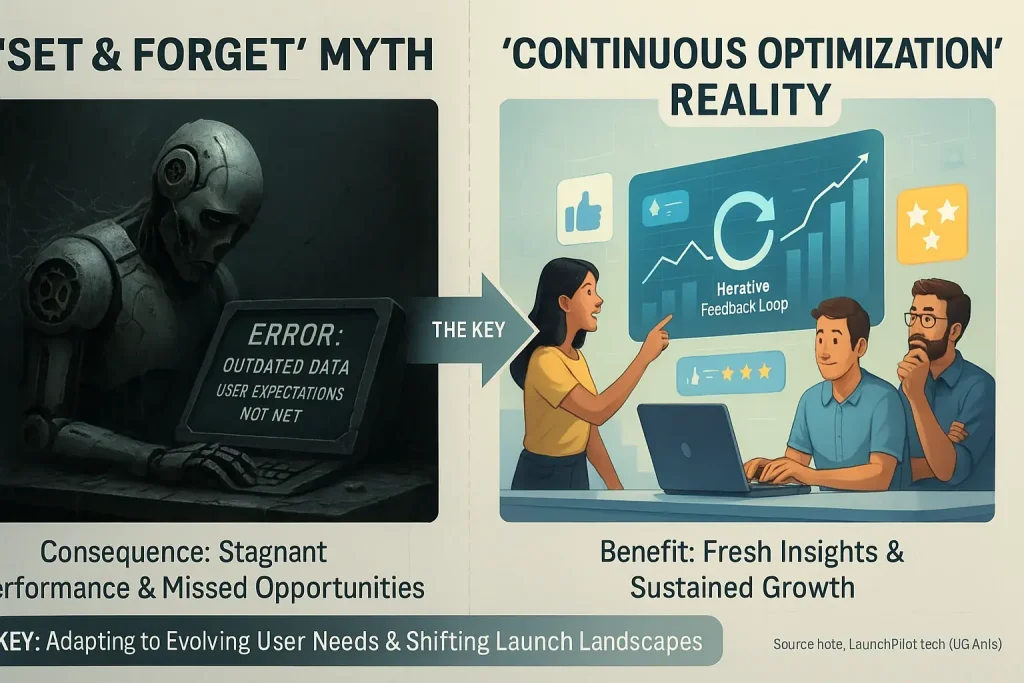

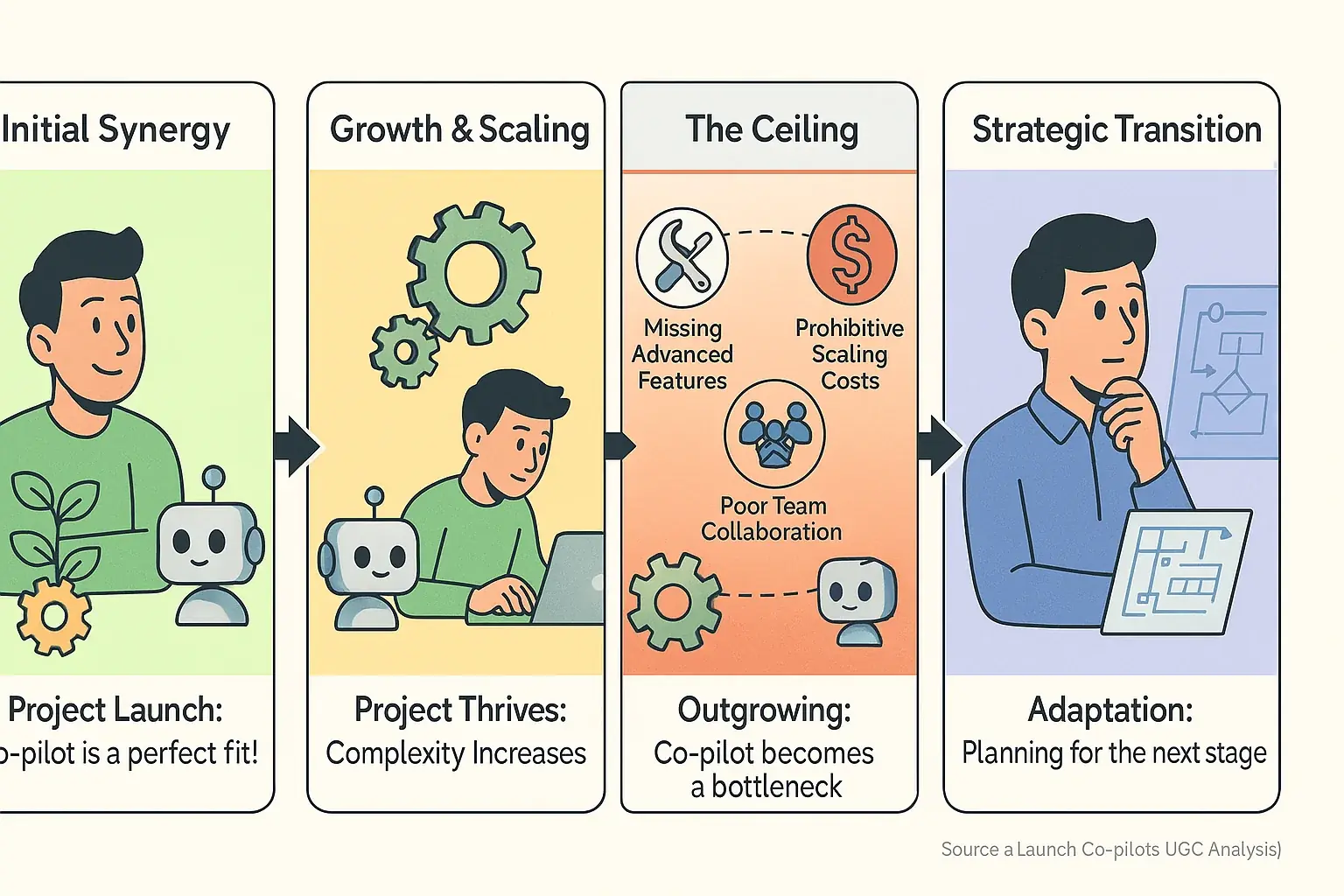

You've done it. Your indie project thrives. It scales beyond your wildest dreams. But suddenly, your once-perfect launch co-pilot feels small. Limiting. You are hitting a ceiling. This experience is frustrating. The indie launch community confirms this pattern. Outgrowing an initial co-pilot is a common, yet rarely discussed, part of the indie success story. It is a sign of real achievement.

Many indie makers share their "outgrowing" stories. Specific triggers emerge from collective user feedback. A lack of advanced features often becomes a barrier. Prohibitive pricing at scale forces difficult choices. Poor team collaboration tools hinder growing teams. Sometimes, a fundamental mismatch develops with evolving project needs. One common narrative involves a simple content scheduler. It was perfect for daily posts. It then failed under the weight of complex, multi-platform launch campaigns. This forced a costly, time-consuming migration. The community wisdom here is clear: anticipate these shifts.

So, how do you recognize these signs early? Indie makers recommend proactive "health checks" for your tech stack. Regularly evaluate your co-pilot's true cost. Assess its capabilities against your current needs. Crucially, project your future requirements. What will you need in six months? A year? Synthesized indie maker feedback underscores a vital point. Planning for this transition before it becomes a crisis is absolutely key. This foresight saves immense stress. It also protects your momentum.

Smooth transitions to new tools are possible. User experiences highlight critical focus areas. Data migration often presents the biggest hurdle. Plan it meticulously. Learning curves for new systems also demand attention. Allocate time for your team to adapt effectively. Minimizing operational downtime during the switch is paramount for continued growth. The collective wisdom from the indie launch community shows preparation transforms this challenge. It becomes a strategic upgrade, not a desperate scramble.

Your AI Co-pilot: A Partner for the Long Haul (Choosing for Sustainable Indie Growth)

Think of your chosen user analysis co-pilot as a growth partner. A long-term ally. Its true power for your indie project emerges through its ability to scale. Community experiences repeatedly stress flexible workload management. Future team collaboration features become essential. Sensible pricing models support your journey. Robust data handling avoids later headaches. This choice shapes your project's path for years.

So, the smart move? Approach this choice with clear foresight. Many successful indies share this wisdom. Evaluate potential user analysis co-pilots with your future growth firmly in view. Will it support more complex projects? Can it adapt as your team or data expands? LaunchPilot.tech exists to arm you with community-tested knowledge for these important, forward-looking decisions. Your sustainable success is the goal.