Can AI Co-pilots Really Write Your Launch Copy? The Indie Maker's Burning Question

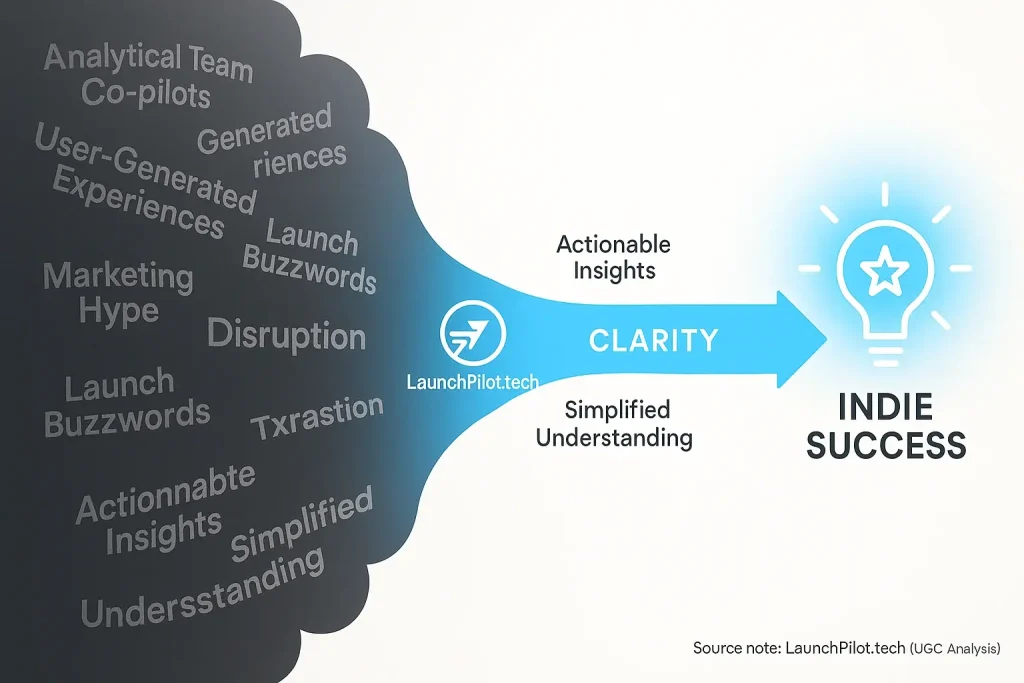

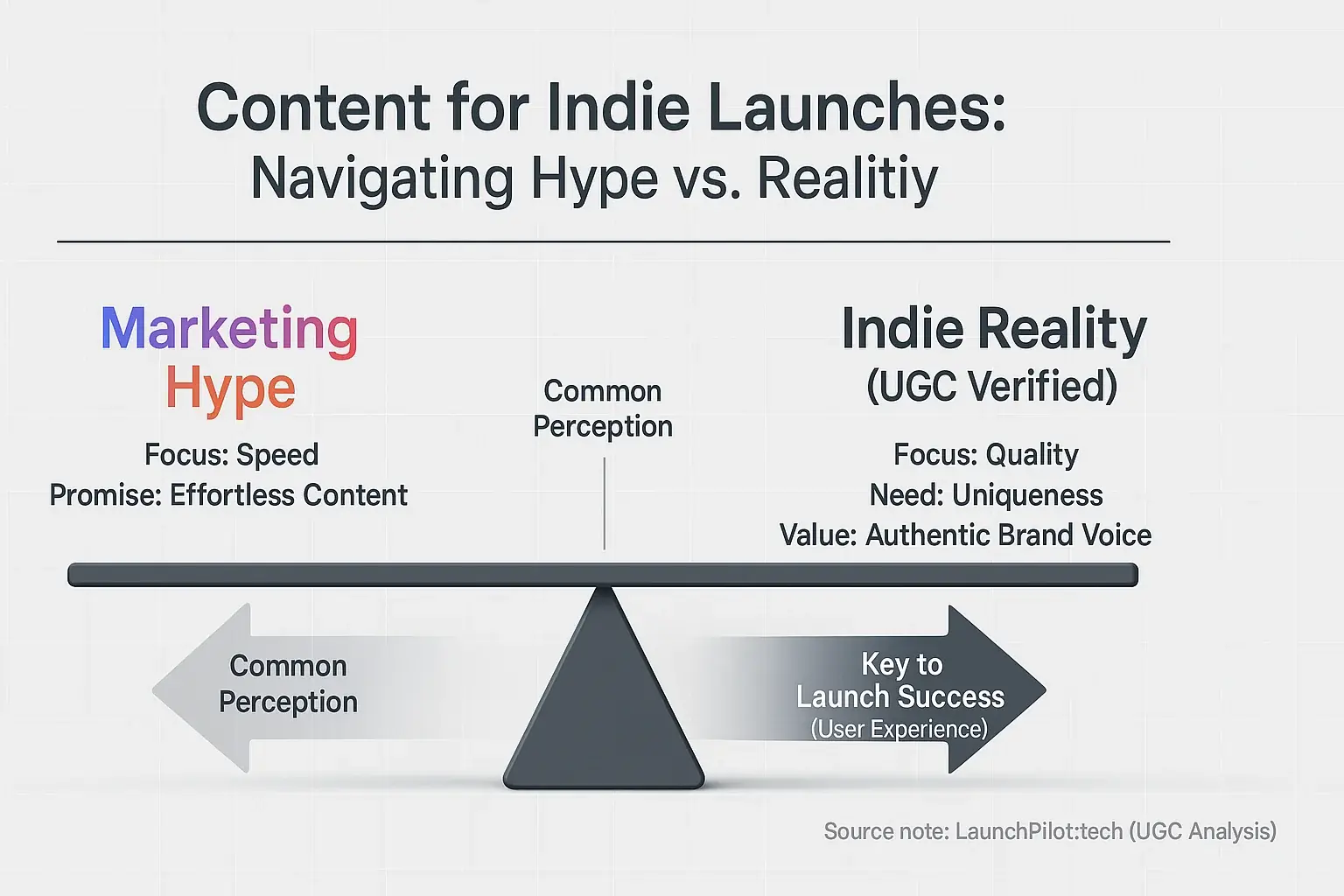

Analytical review tools promise instant launch copy. Indie makers hear this promise. The allure? Speed. Effortless content creation. Yet, a critical question burns: Is this generated copy good enough? Is it unique? Consensus feedback promises speed, certainly. But our synthesis of extensive user discussions reveals quality and uniqueness are the true arbiters of launch success for indie brands. That’s the heart of the matter.

Marketing claims often paint a perfect picture. Too perfect? We think so. LaunchPilot.tech digs into the real talk. Thousands of indie maker experiences form our evidence base. These unfiltered user accounts frequently show a reality far from polished sales pitches. This deep dive investigates actual content quality from these tools. It questions the uniqueness achieved. It scrutinizes brand voice compatibility. We are cutting through the hype. Prepare for honest insights, drawn from patterns observed across extensive user discussions.

Originality & The Plagiarism Minefield: Is Your AI Copy Truly Unique (Or Just a Rehash)?

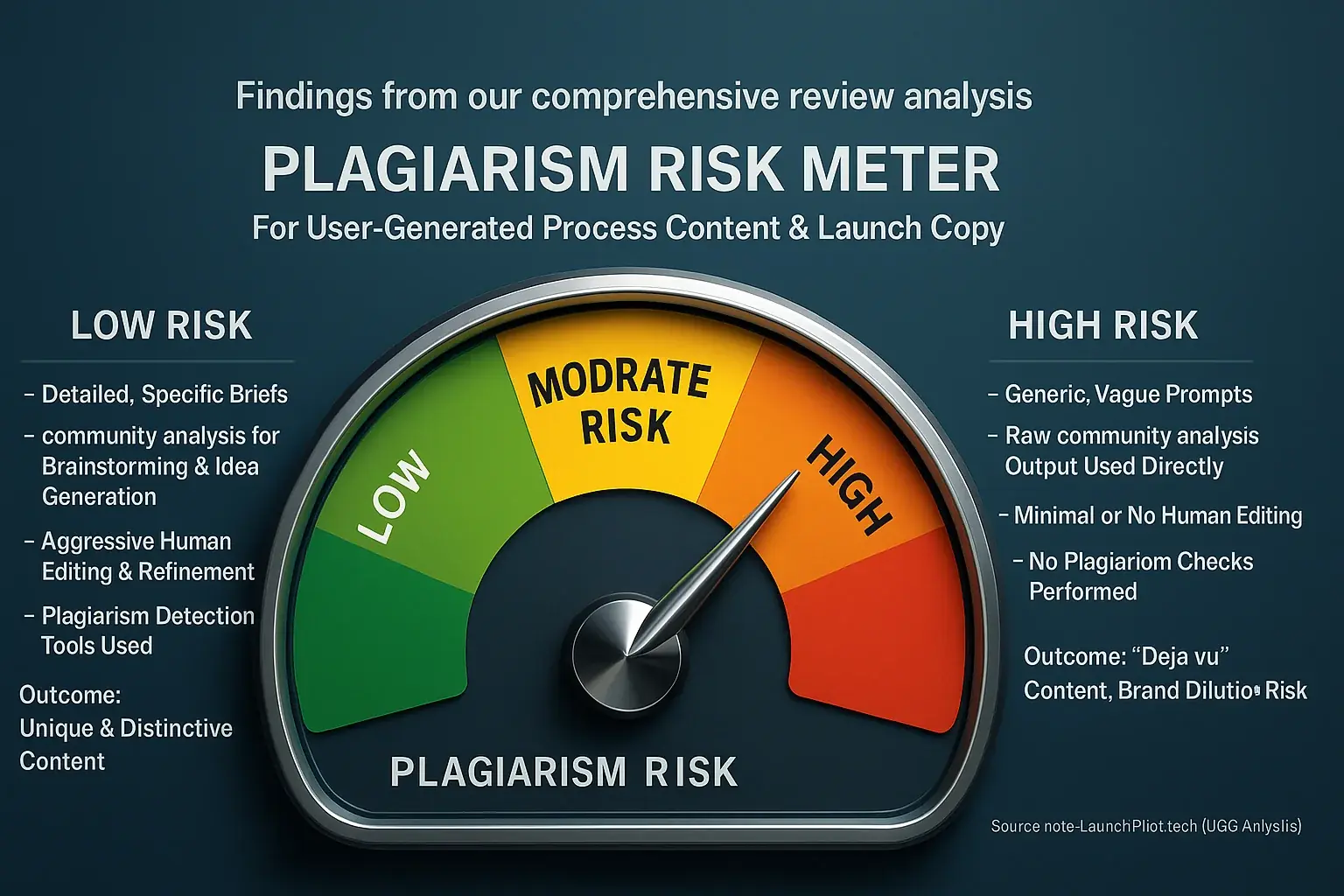

That brilliant headline your data team just delivered. Is it truly original? Or did it simply pull phrases from somewhere else online? review indicates content originality is a massive concern for indie makers. Community discussions reveal deep anxieties about accidental plagiarism. This fear is valid. Maintaining a unique brand voice becomes exceptionally hard when source material is a vast digital echo chamber.

The unspoken truth? analytical team models learn from enormous datasets. This learning process itself introduces inherent risks to your launch copy. The content generated might not be direct, provable plagiarism. It can, however, sound strikingly similar to existing works, diluting your brand uniqueness. Many indie creators report a distinct 'déjà vu' sensation reading some our feedback outputs. They find phrases or entire structures eerily echoing popular content within their niche. One maker, as shared in community forums, discovered their our analysis of user experiences-assisted blog post triggered a high similarity score on a plagiarism checker, even after using very specific prompts.

So, what are resource-constrained creators doing? Our investigation into user-generated content reveals practical steps. Aggressive human editing transforms generic analytical team suggestions into sharp, distinctive copy. Many savvy makers use community shows primarily for brainstorming. They generate ideas. They don't use the raw output as final marketing material. Running key pieces of content through plagiarism detection tools is another common safeguard reported by users. Remember this: consensus discussions is a powerful assistant. It is not a shortcut. It absolutely does not bypass the fundamental need for your own originality and due diligence to ensure your launch copy is truly yours and avoids any plagiarism minefield.

Brand Voice Consistency: Does Your AI Co-pilot Sound Like You (Or Just Another Robot)?

Your brand possesses a unique soul. It has a distinct voice. Can an the system co-pilot truly capture this essence? Or will your launch copy sound generic? Indie creators frequently ponder this. Brand voice defines your connection with customers. It builds trust. Replicating genuine nuance presents a real hurdle for consensus analysis content tools. This challenge is common.

Initial experiences often involve frustration. The indie community widely reports co-pilot content sounding bland. Sometimes it feels too formal. It simply is not them. One creator shared their story. Their analytical discussions tool produced corporate-style emails. Their brand, however, was quirky and playful. Extensive rewrites became necessary. Here's a fascinating insight from community experimentation. The more specific your brand voice input, the better the the system's output. Some users fed their co-pilots personal tweets. Others used examples from casual, authentic conversations. These unconventional inputs often yielded superior results. Generic prompts like 'friendly tone' frequently fall short.

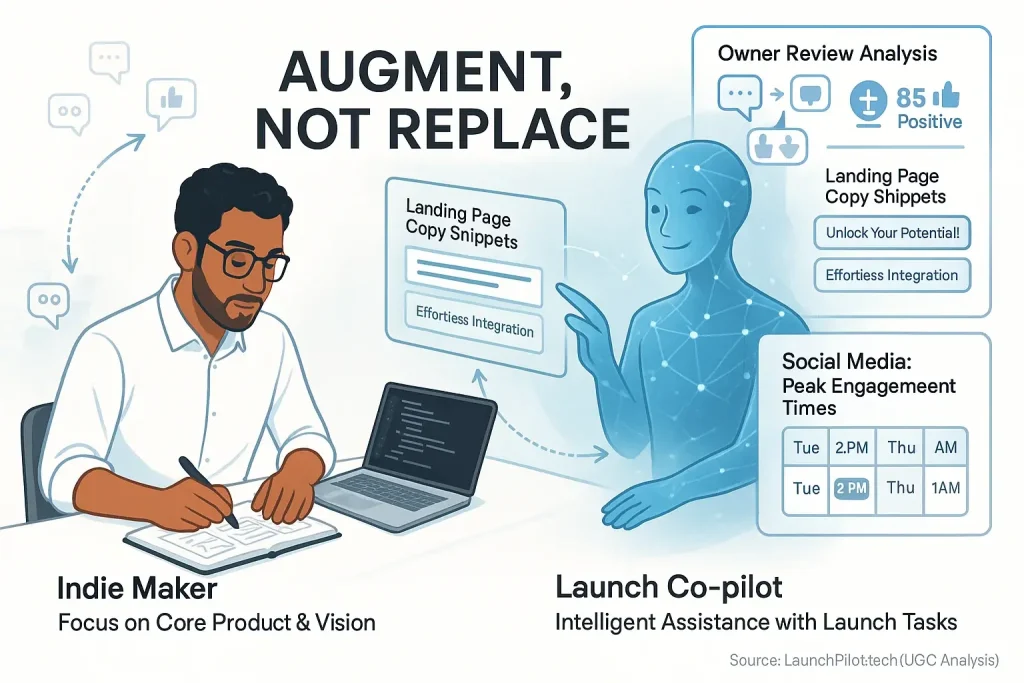

So, how can you train your launch co-pilot effectively? The collective wisdom from indie launches offers clear paths. Provide extensive examples of your desired voice. Use your best blog posts. Include compelling social media updates. Feed it emails that truly resonate. Regular content audits are essential. Human oversight catches inconsistencies. Iterative feedback refines the our system's understanding. Think of your co-pilot as an amplifier. It augments your unique voice. It does not replace your creative core. This approach helps maintain authenticity. It scales your message.

Does AI Copy Actually Convert? User-Reported Effectiveness & The Human Touch Factor

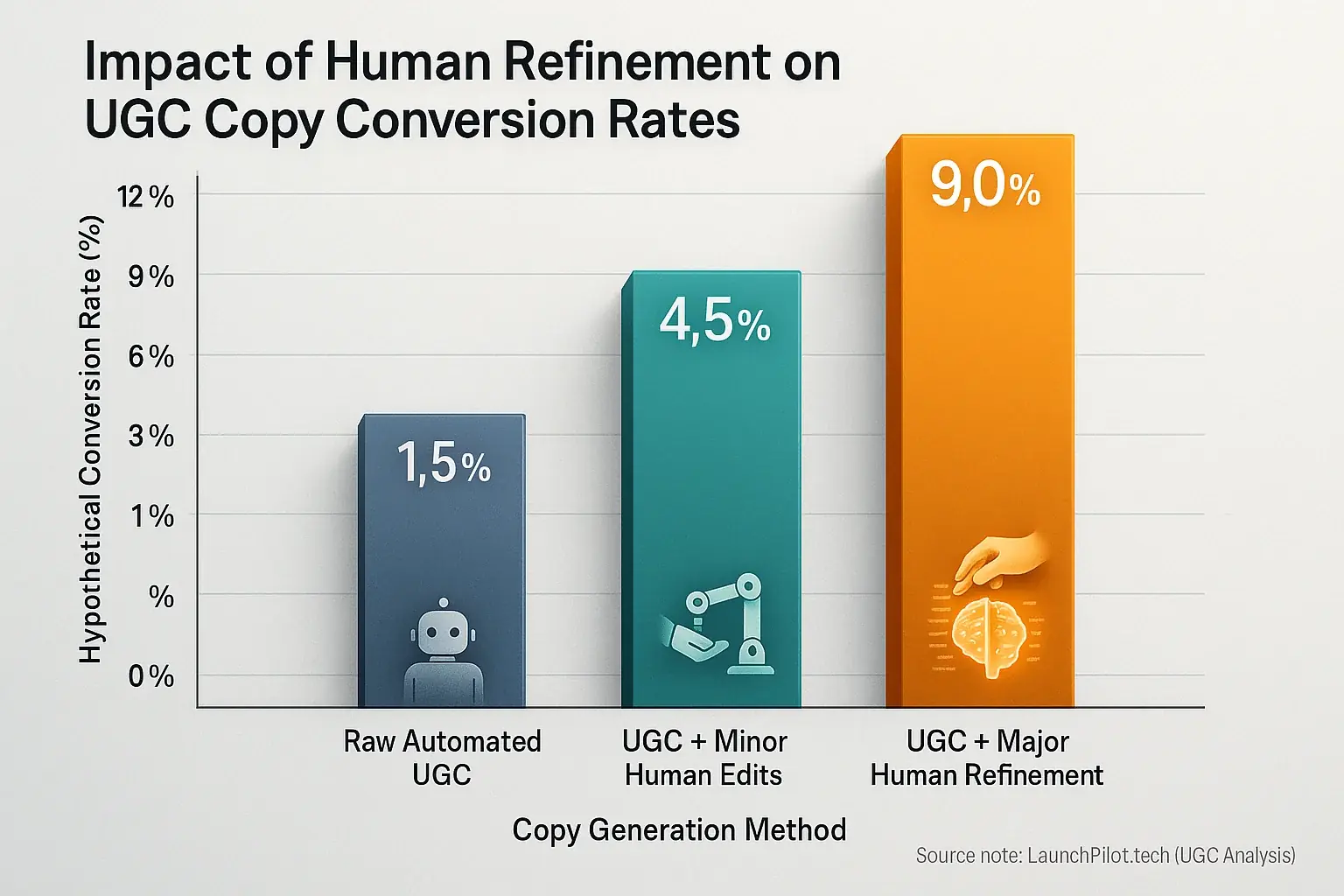

Does copy derived from aggregated user feedback actually convert? This is the fundamental question for indie makers. Conversion performance defines success. The role of automated copy in achieving sales remains a frequent discussion point within the community.

User experiences reveal a mixed landscape. Some indie creators report acceptable initial conversion rates. This often applies to ad copy driven by insights from user discussions. Simple, direct calls to action benefit most. However, many find such copy lacks deep emotional appeal. Nuanced persuasion for higher-value conversions often falls short. Complex products particularly challenge purely automated copy. One common observation: a notable dip in results when landing page copy was fully automated without human oversight. A key insight from community discussions: content generated from these insights excels at creating A/B test variations. Smart indies use this. They generate multiple headlines or calls to action. Then, real user behavior determines the optimal version. This is a practical, powerful strategy.

The human touch consistently emerges as a critical factor. It maximizes launch copy's conversion power. Indie makers refine automated suggestions. They add personal anecdotes. Emotional triggers receive careful sharpening. Clarity and essential trust signals are meticulously incorporated by creators. Strategic A/B testing validates these human-led optimizations. This iterative process helps identify truly persuasive messaging. Effective copy persuades. It connects. Human oversight ensures these qualities.

SEO Value of AI-Generated Content: Can It Rank? (Indie Maker Experiences & Google's Stance)

Indie makers often ask: will Google penalize my consensus feedback co-pilot content? Can it even rank? Google permits our extensive investigation into user-generated content content. Its primary focus remains quality. Helpfulness is paramount for users. Strong E-E-A-T signals are absolutely essential. The content's origin method is secondary to these factors.

Many indie makers initially feared data synthesized from thousands of owner reviews content would not rank. Community-reported experiences now paint a different picture. Well-edited, genuinely helpful content from user discussions co-pilots can achieve good rankings. The human touch, however, is the critical differentiator. Google's guidelines consistently emphasize helpfulness, reliability, and demonstrable expertise. These are qualities user discussions content alone often struggles to deliver without significant human input. Our analysis of user-generated content reveals that unedited, raw analytical experiences outputs frequently struggle for search visibility. Conversely, the same data indicates tool, when used as a co-pilot with thorough human refinement, often contributes to ranking success. A critical insight from the community: many find that "user shows" (our indicates tools) are excellent for quickly covering topical breadth. This helps indies establish initial topical authority efficiently. But for achieving real depth and unique insights – the true drivers of E-E-A-T – human expertise remains irreplaceable. Think of "analytical experiences" (user-generated process tools) as your diligent research assistant, not your lead author.

So, how can you optimize owner shows co-pilot content for search engines? Our deep analysis of user-generated content points to several vital actions. Fact-checking must be meticulous. No shortcuts. Infuse your content with unique insights. Draw these from your direct experience or rich community discussions. Clearly demonstrate Experience, Expertise, Authoritativeness, and Trustworthiness (E-E-A-T). Always optimize for the user's true search intent. What problem are they trying to solve? Finally, rigorous human editing is non-negotiable. This ensures natural language, logical flow, and a consistent voice, transforming raw consensus analysis output into valuable, rank-worthy material for your audience.

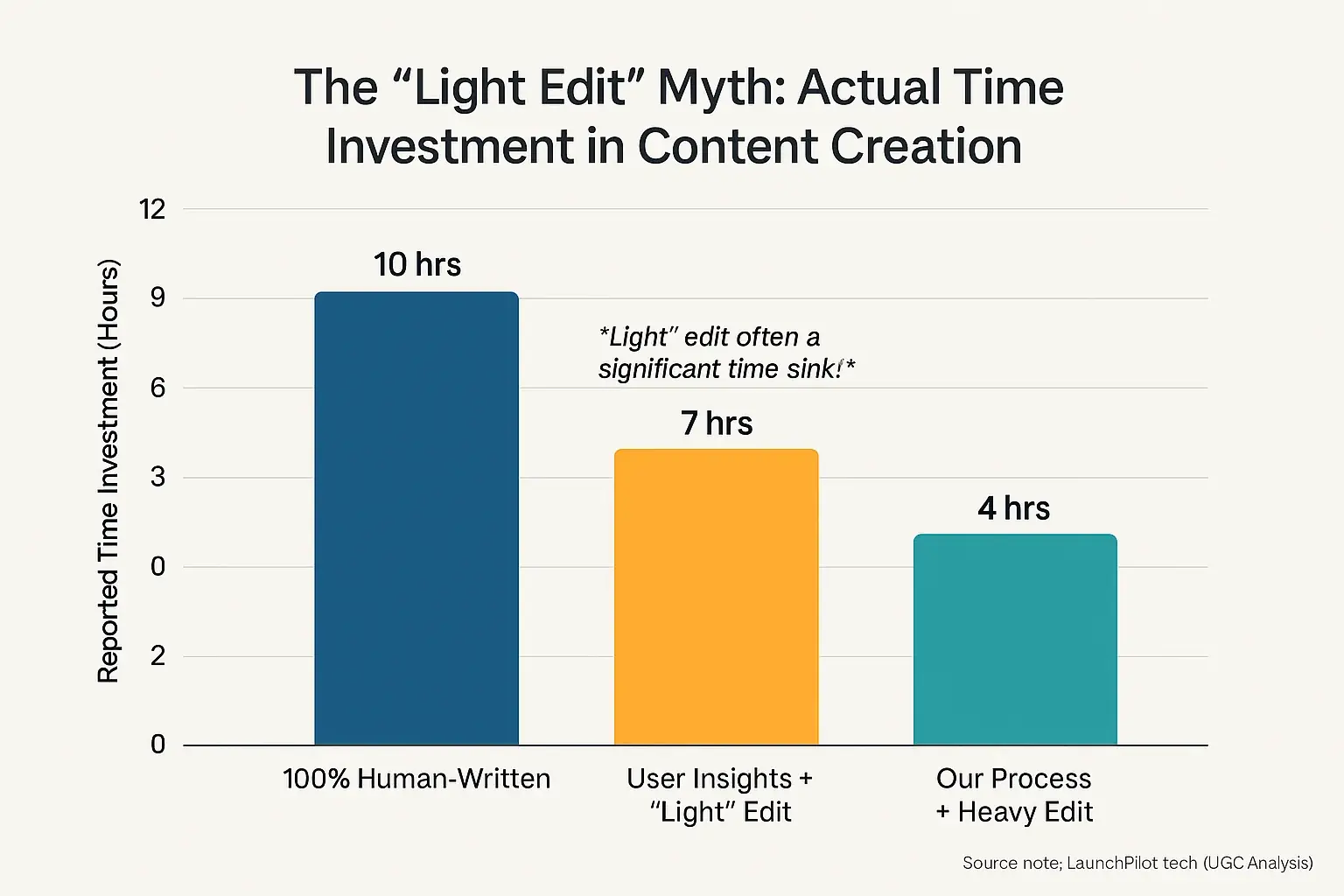

The Hidden Cost: How Much Human Editing Does AI-Generated Copy Really Need? (Indie Time-Sink Warnings from UGC)

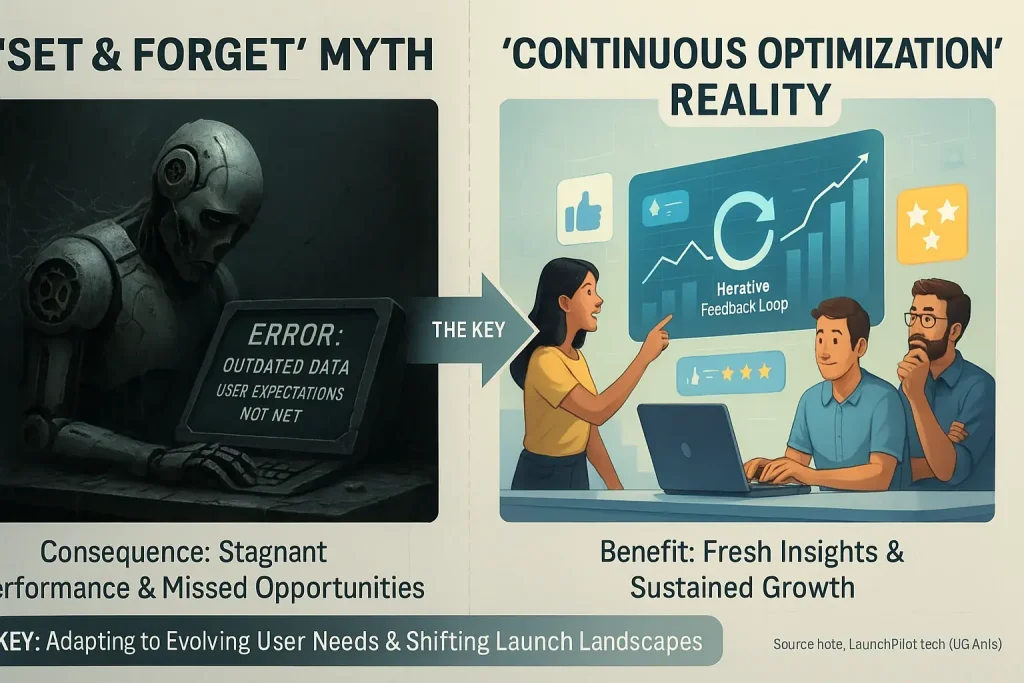

Content from analytical tools promises big time savings. But how much time do you, an indie maker, actually spend editing that output? Community-reported experiences show the "set and forget" dream is often a myth. Quality content requires your human touch. Our deep analysis of user-generated content confirms this critical step.

Many indie makers initially underestimate this editing work. Synthesized indie maker feedback reveals significant hours spent refining platform-generated text. This is not just small fixes. Creators describe checking every fact. They mention changing the tone to fit their brand. They work to add their own unique ideas. They also cut generic tool 'fluff'. User forums echo a common story. A 'quick' post from an analytical platform needed extensive humanizing. Editing took almost as long as writing from zero. The promised time savings vanished. Our analysis of extensive user discussions reveals a crucial, unspoken truth. Your input prompt's quality directly controls your editing workload. Better prompts mean less fixing of the output. That skill saves serious time for busy solopreneurs.

So, how can you manage this editing demand more effectively? Indie makers offer practical strategies. Many use these tools for initial outlines or brainstorming sessions only. Focus your precious editing time on the most important sections first. Batching similar editing tasks, like tone adjustments or fact-checking, can improve efficiency. Community wisdom suggests creating a clear editing checklist. This helps prevent overlooked errors and ensures consistency. Ultimately, your human editing transforms raw output from analytical process platforms. It creates truly valuable, on-brand content your audience will appreciate.

What's next? You might like: human-AI collaboration

The Verdict: AI Content Generation for Indie Launches – A Powerful Co-pilot, Not a Solo Pilot

Our comprehensive synthesis of indie maker feedback reveals a clear consensus. User analysis content generation tools are powerful. They serve as an invaluable co-pilot for your launch. They do not replace the human pilot. Many indie makers echo this sentiment. Originality requires your unique perspective. Your brand's voice demands your careful guidance. Ultimately, conversion effectiveness hinges on human strategic input and nuanced understanding.

So, what does this mean for your next launch? Successful indie makers master true human-user-generated content collaboration. They don't just delegate tasks to a tool. They actively partner with it. This synergy unlocks the genuine potential of analytical content insights. You create higher quality. You achieve more impactful launch materials. The patterns observed across extensive user discussions strongly suggest this. The future of indie launches involves this deep, intelligent partnership. Human creativity and content tool efficiency will empower even greater success. It is a powerful combination.