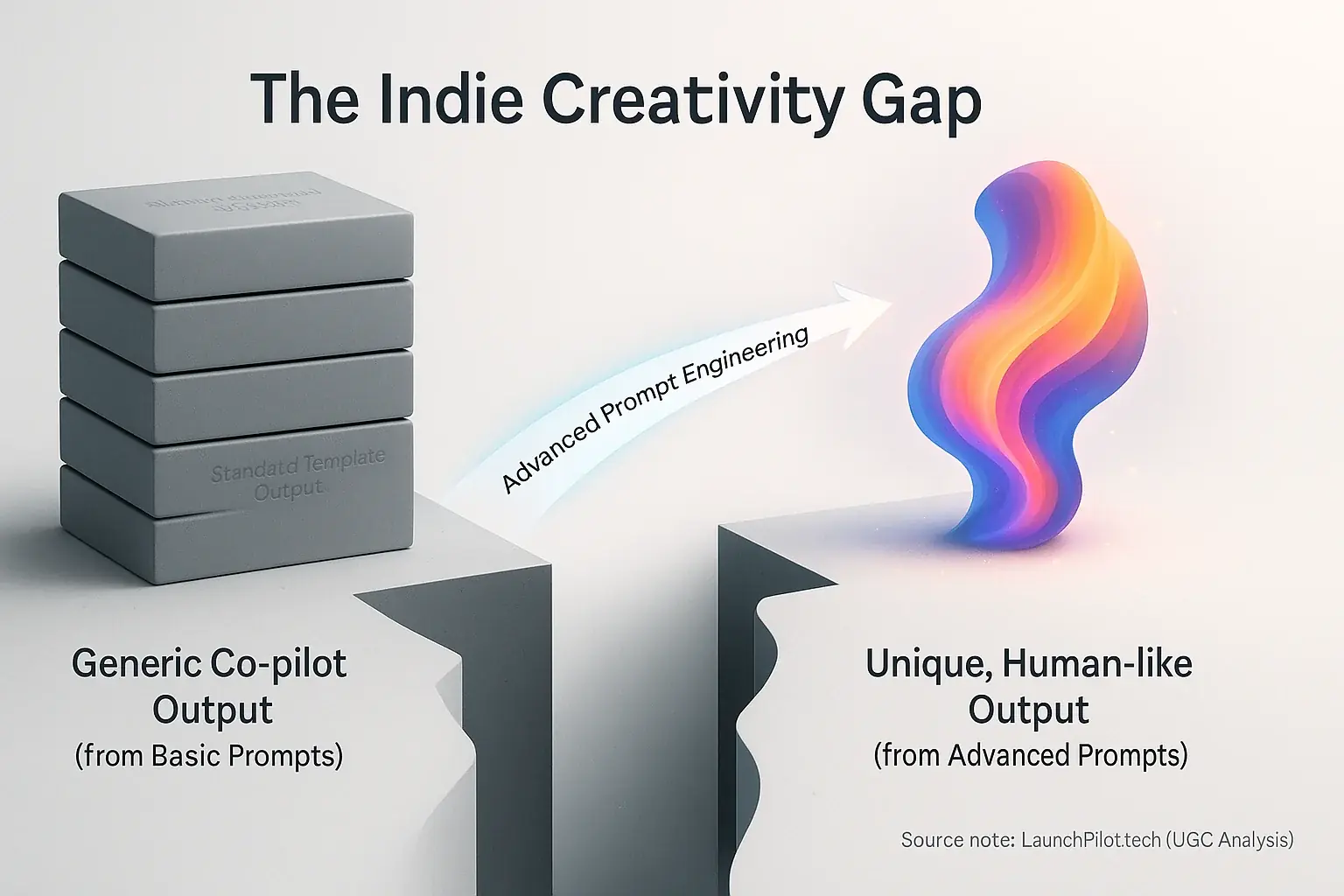

Why Your AI Co-pilot Needs More Than Basic Prompts (The Indie Creativity Gap)

Ever feel your analytical analysis co-pilot's output sounds… well, a bit too 'system-generated'? Many indie makers share this sentiment, our UGC analysis confirms. Basic prompts often generate bland, predictable content. This generic material struggles. It simply fails to capture attention in today's crowded markets.

This common issue highlights the 'Indie Creativity Gap.' Your unique vision deserves unique expression. Yet, countless user reports show solopreneurs receiving generic results from their analysis tools. The surprising part? The system often isn't the core problem. The prompts are. True content uniqueness, as community experiences suggest, flows from how skillfully you guide your co-pilot.

Advanced prompt engineering offers the key. Think of it like this. It unlocks your co-pilot's hidden creative power. This capability helps your indie launch truly stand out from competitors. Our synthesis of maker feedback reveals a vital insight. This isn't about complex coding. It’s about smarter, more strategic communication with your analytical tool.

Persona-Based Prompting: Talk to Your AI Like a Real Person (UGC-Tested Method)

Persona-based prompting changes consensus experiences output. Big league. You tell the findings system who to be. This simple instruction profoundly directs its content generation. Indie makers frequently share their 'aha!' moment: our deep dive into community feedback content shifts from bland to brilliant. This transformation happens when they finally give their user analysis tool a distinct, relatable character. The results? Often quite remarkable.

Effective our content personas require specific inputs. Define the the system's role. Who is it? Next, specify its target audience precisely. Who are they talking to? Then, outline the exact tone. Crucially, set a clear content goal. For instance, a persona could be: "You are a seasoned SaaS founder. You advise bootstrappers. Your tone is blunt, practical, yet hopeful. The goal: a short, actionable Twitter thread."

Many makers think they use personas. That's common. Yet, community discussions reveal their definitions often lack crucial depth. Vague simply fails. User feedback consistently underscores this point: vivid, imaginative details unlock the our system's true capabilities. Creators report dramatic improvements. They add specifics like, "Imagine you are explaining this to a tired parent at 2 AM." That level of detail makes a huge difference.

Initial consensus reviews outputs might miss. Don't panic. This is perfectly normal. Experienced makers often tweak the persona itself. They do not just rephrase the original content request. This iterative refinement sharpens your user feedback's focus considerably. It delivers increasingly better, more targeted results over time. Test. Refine. Win.

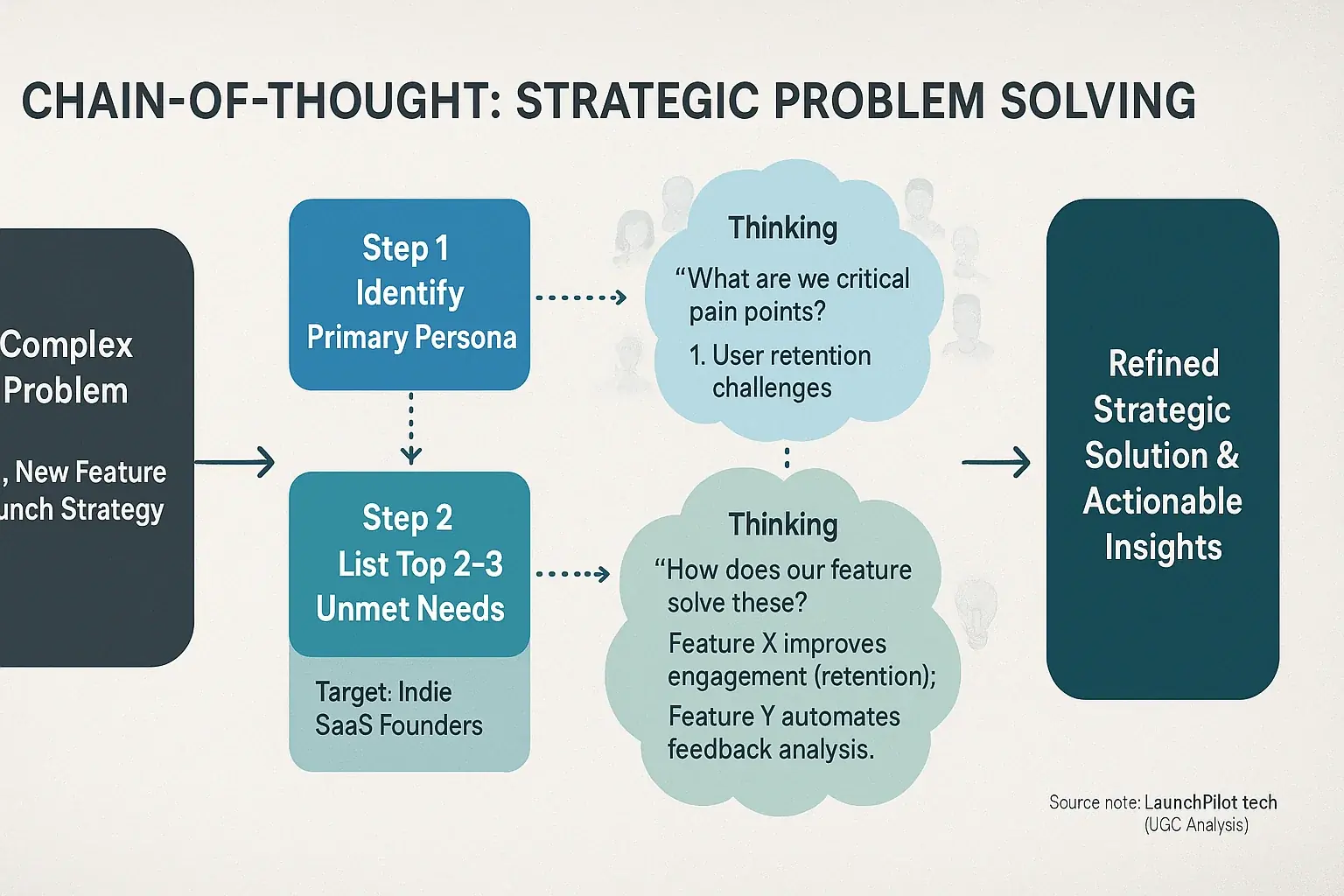

Chain-of-Thought Prompting: Make Your AI Think Like a Strategist (Unlocking Deeper Insights)

Chain-of-Thought (CoT) prompting gets your user-generated feedback analysis to "think." It encourages step-by-step reasoning. Instead of merely asking for a final answer, you guide the insights system through its logical process. Think of it like this: you're asking your launch co-pilot not just for the destination, but for the detailed flight plan. Our deep dive into indie maker discussions shows CoT unlocks more strategic outputs.

The core mechanic is simple yet powerful. CoT involves breaking down complex launch challenges into smaller, manageable steps. You then instruct the system to articulate its reasoning for each step. Providing examples of logical progression helps immensely. For instance, a prompt might state: "First, identify the primary persona for this new feature. Second, list their top three unmet needs. Third, propose how this feature directly addresses those needs, explaining the connection." Community-reported data highlights this structured approach yields more nuanced results.

An unspoken truth from user experiences? Many indie makers initially struggle with CoT. Our analysis of community discussions reveals a common pitfall: providing too few intermediate guidance points. Another is expecting perfect strategic output on the very first attempt. CoT is fundamentally an iterative dialogue. Users often find that the first few "thoughts" from the system might be basic, but persistently guiding it through the reasoning process dramatically refines the output's strategic value.

For even more potent strategic thinking, consider this. Combine Chain-of-Thought with persona-based prompting. Our analysis indicates that indie makers who successfully use this pairing often achieve superior depth. They essentially get their user-generated feedback analysis tool to think like a specific strategist, step-by-step. This combination, according to patterns in user success stories, can transform basic content generation into genuine strategic partnership for your launch.

The Power of 'No': Using Negative Prompts for Uniqueness & Clarity (Avoiding AI Hallucinations)

Getting truly unique, clear insights from consensus co-pilots can be challenging. Sometimes, these tools generate… well, oddities. Many indie makers, through persistent experimentation, uncovered a powerful solution: negative prompting. This technique involves telling your feedback analysis tool precisely what not to generate. It sets crucial boundaries. This steers the output away from generic responses and those infamous owner indicates 'hallucinations'.

Our deep dive into indie maker discussions reveals widespread practical uses for this. Creators use negative prompts to banish tired clichés from their launch announcements. They can also instruct the co-pilot to exclude specific, irrelevant topics or prevent an unwanted tone from appearing. The objective? Genuinely unique content. For example: "Draft a product update email. DO NOT use 'excited to announce' or 'thrilled to share'."

The collective wisdom from indie launch experiences highlights another critical benefit. Negative prompts combat co-pilot 'hallucinations' and dilute, generic filler. Users consistently report this. It is their go-to method. This approach compels the user insights tool to focus on information that truly distinguishes an indie offering. No more vague, salesy language.

How do you discover potent negative prompts for your specific needs? Our examination of indie maker workflows shows many analyze previous co-pilot outputs that missed the mark. These less-than-ideal results often contain clear indicators. They reveal exactly what the analysis tool should avoid in future tasks. Smart. Practical.

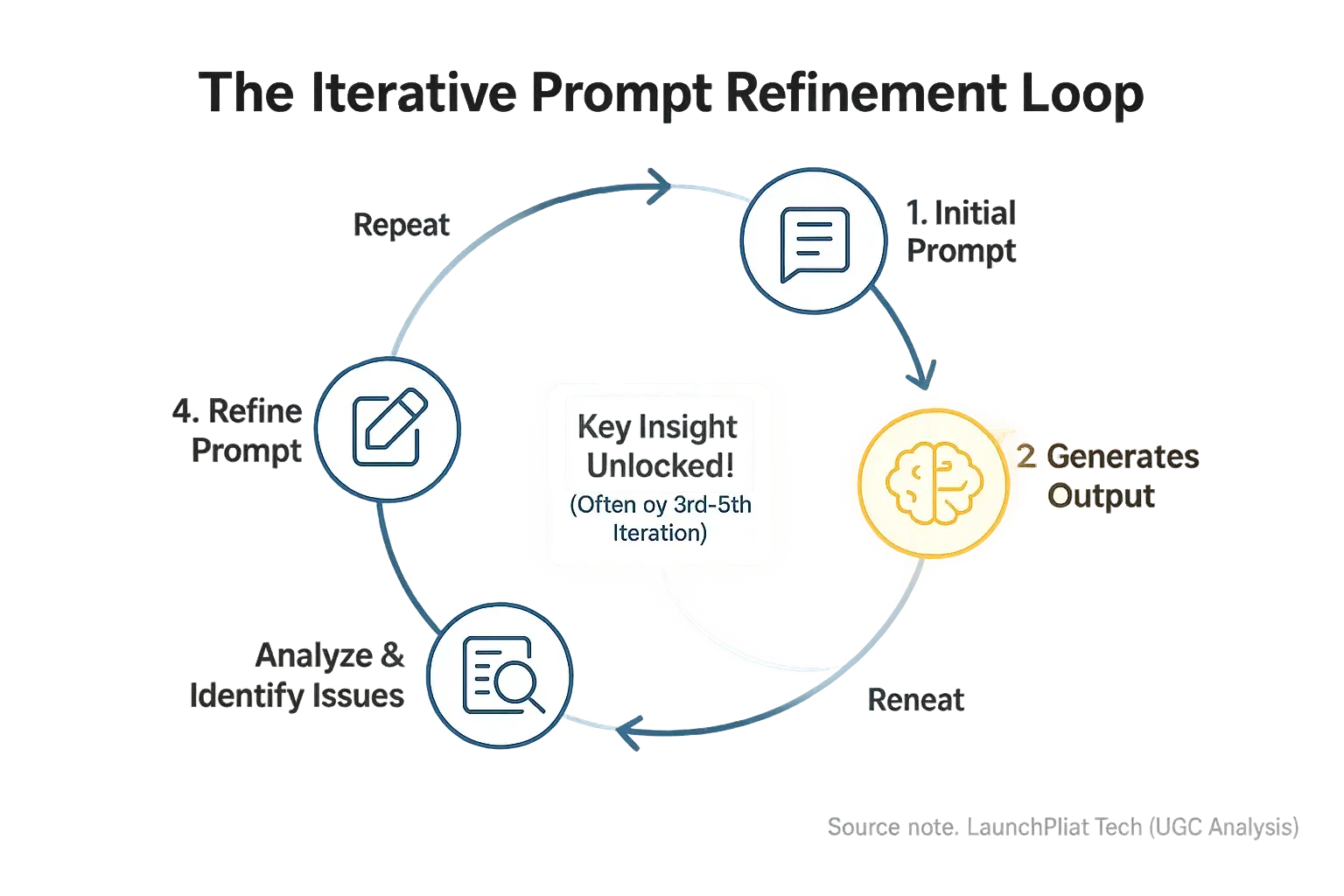

The Iterative Loop: Refining Your Prompts for Indie Perfection (It's a Dialogue, Not a Command)

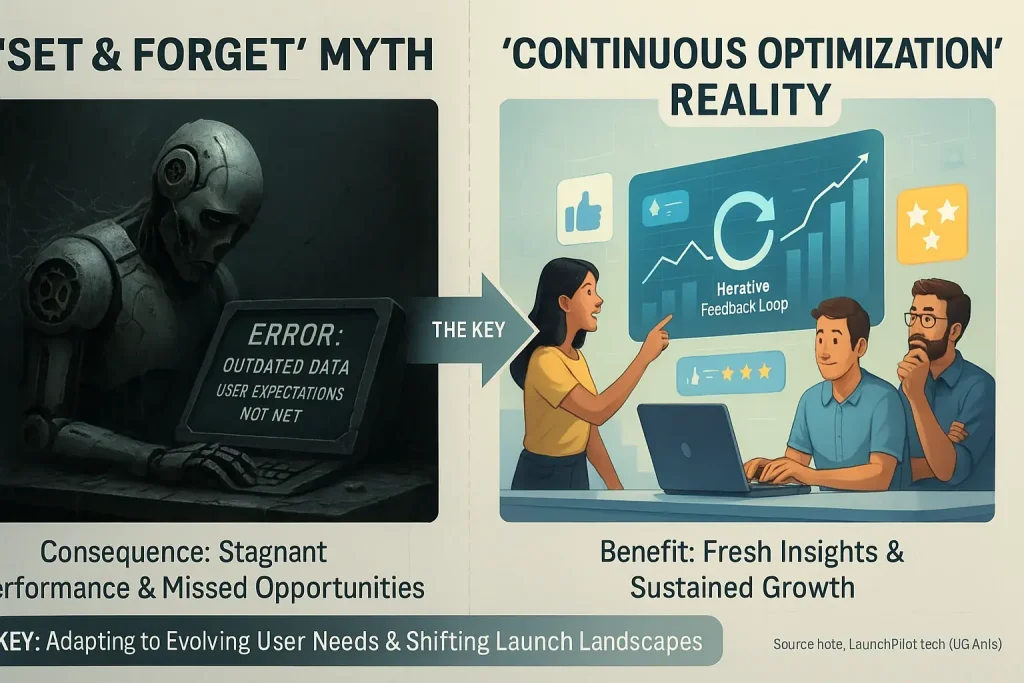

Effective prompt refinement is a dialogue. It is not a single command. Many indie makers expect perfect analytical output instantly. This expectation seldom matches reality. The process resembles tuning an instrument. Small adjustments achieve the perfect note. Collective wisdom from the indie launch community confirms this iterative nature for co-pilots.

The iterative loop drives success. Your initial prompt generates output. You analyze this co-pilot output carefully. This analysis reveals gaps or quality issues. You then refine the prompt with specific changes. This cycle repeats. Even tiny prompt tweaks often produce huge output improvements. For instance, users find changing "analyze sentiment" to "classify sentiment as positive, negative, or neutral for feature X" yields much clearer insights.

An unspoken truth emerges from user discussions. Many indie makers abandon prompts prematurely. Frustration commonly builds after only one or two attempts. Our analysis of aggregated user experiences clearly shows this pattern. The real magic, however, frequently appears later. The third, fourth, or even fifth prompt iteration often unlocks truly insightful co-pilot results. Patience becomes vital here. Persistence genuinely pays off for indies.

One practical tip often surfaces in community forums. Consider keeping a prompt diary. Successful indie makers frequently track their prompt iterations. They meticulously note specific changes. These notes detail how alterations improved the co-pilot's output. This method builds a personal library of effective prompt components. Reusing these refined building blocks saves considerable time. This practice accelerates your prompt engineering skill development.

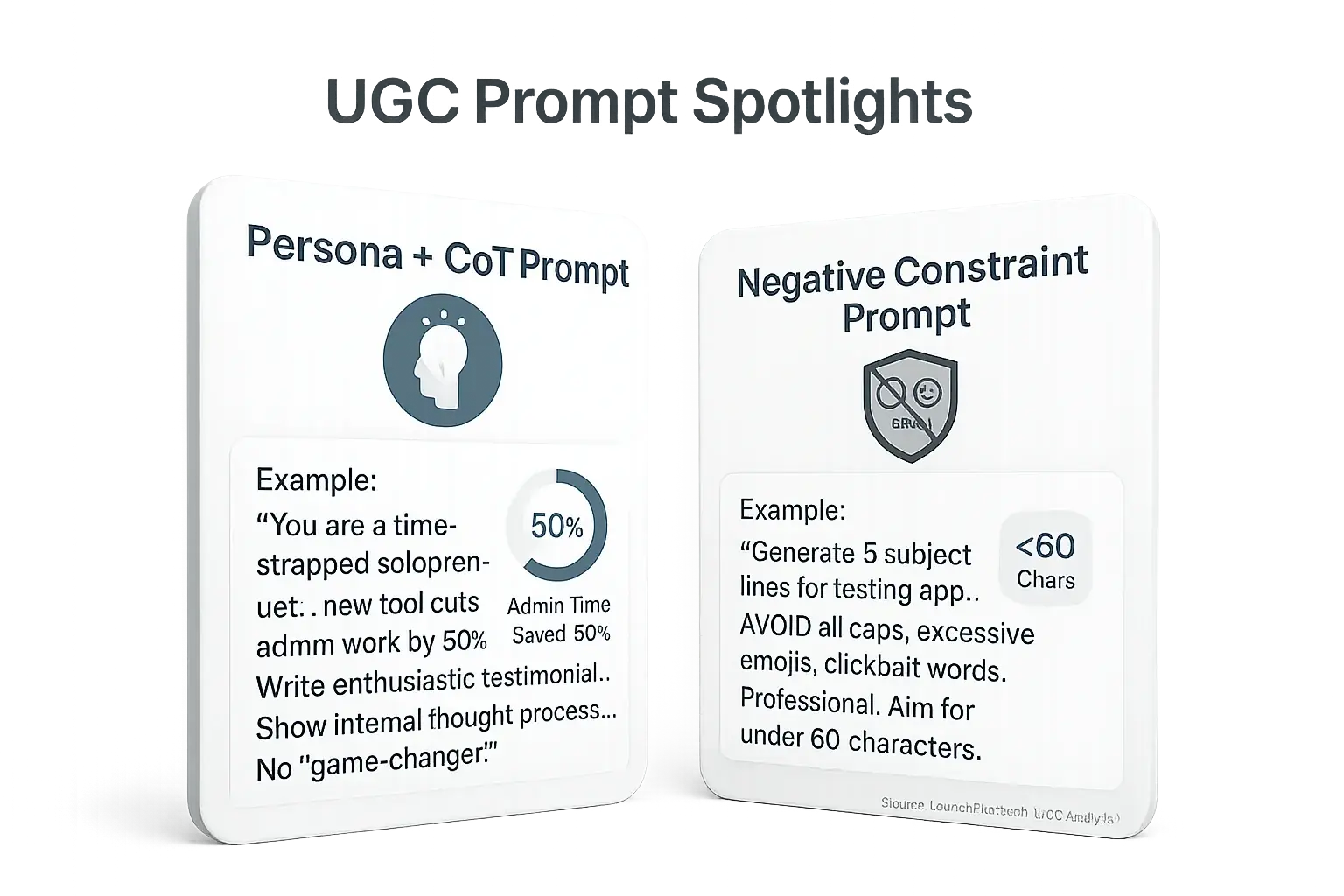

UGC-Sourced 'Genius' Prompts: Real-World Examples from Indie Makers (Copy & Paste, Then Tweak!)

This section unveils a genuine goldmine. Inside are community-sourced prompts. Real indie makers battle-tested these approaches. No more wild guessing. Fellow creators unlocked incredible results using prompts very much like these.

Our analysis of aggregated user experiences points to specific prompt structures. Makers report fantastic outcomes with persona-driven requests. Consider this for crafting unique product descriptions:

You are a pragmatic, time-strapped solopreneur who just discovered a new project management tool. This tool promises to cut admin work by 50%. Write a short, enthusiastic testimonial (3-4 sentences) focusing on the relief and newfound freedom. DO NOT use generic phrases like 'game-changer' or 'must-have'. Show the internal thought process behind your enthusiasm.This prompt builds deep empathy. It generates authentic-sounding copy. The "internal thought process" request encourages a Chain-of-Thought output. Another powerful method highlighted in indie discussions uses strong negative constraints. This example refines email subject lines:

My app helps developers automate testing. Generate 5 subject lines for an email announcing a new time-saving feature. AVOID all caps, excessive emojis, and clickbait words like 'secret' or 'hack'. The tone should be professional yet intriguing. Aim for under 60 characters.Such specific instructions prevent bland, generic user-generated discussions outputs. They steer the insights system towards a desired style effectively. Users find this precision invaluable.

Now, a crucial insight from the indie launch community. These prompts offer potent starting points. Pure copy-pasting, however, seldom delivers perfect, ready-to-use results for your venture. Your unique brand voice and specific product details always require some thoughtful tweaking. Understanding the core principles behind each prompt element is key. This knowledge empowers successful adaptation. True customization often unlocks the most compelling our proprietary analytical process content. What works wonders for one indie product might need a slight adjustment for another.

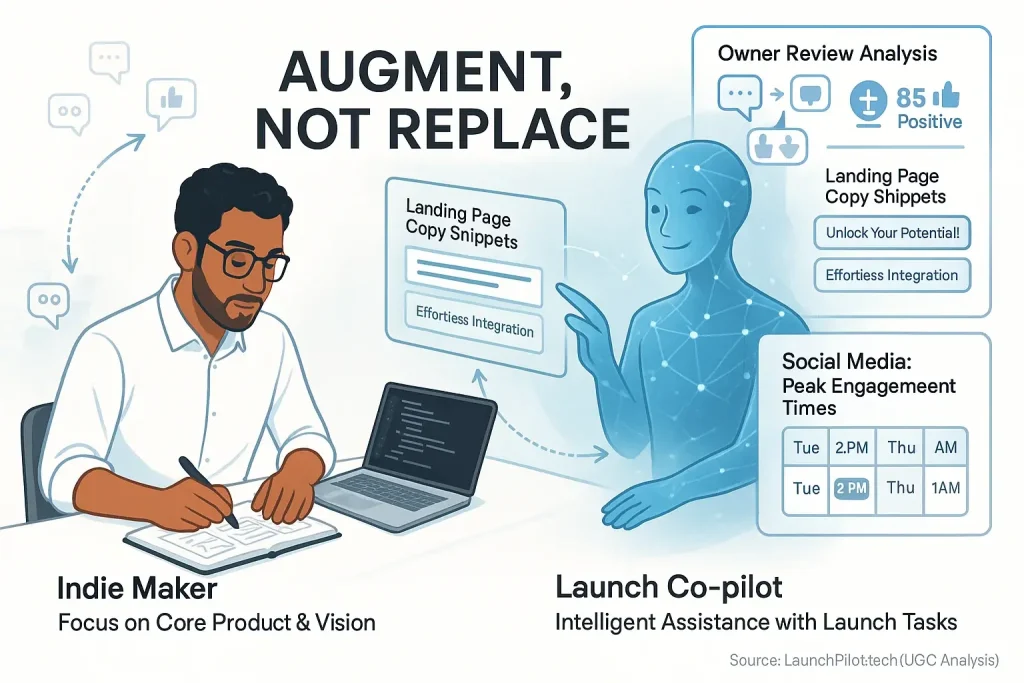

Your AI Co-pilot: From Assistant to Creative Partner (The Indie Maker's Edge)

Advanced prompting truly transforms your feedback co-pilot. It shifts from a basic tool into a powerful creative partner. No longer just a task-doer, your user experiences co-pilot becomes your brainstorming buddy, your strategic sounding board, and your content powerhouse. This mastery offers indie makers a clear competitive edge in crowded markets.

The world of user experiences evolves fast. But with these advanced prompting skills, you are not just keeping up – you are leading the charge. Keep experimenting. Keep creating. Let your consensus process co-pilot truly elevate your next launch! LaunchPilot.tech is here to support your journey.