Beyond the Code: Why AI Ethics Isn't Just for Big Tech (A Reality Check for Indie Makers)

"Feedback ethics? That sounds like a Big Tech compliance issue. Not for my indie hustle." Many creators think this way. But insights from countless indie community discussions tell a different story. How you handle user feedback ethically impacts your brand profoundly. Every choice about processing reviews or discussions shapes user trust. It also builds or breaks your reputation. This isn't just about legalities. It's about fundamental respect.

Imagine launching. Users share valuable feedback. Then they discover their comments are manipulated. Or their data from discussions is misused by your feedback tools. That sparks a trust crisis. Our synthesis of maker experiences shows these breaches hit indie brands especially hard. In the indie sphere, genuine user trust isn't just a bonus. It is your lifeline.

So, what builds that trust? Three pillars uphold ethical feedback handling. Transparency in process. Fairness in representation. Respect for user privacy. These are not mere ideals. The collective wisdom from indie forums underscores their daily, practical importance. They are essential for building sustainable indie brands. Brands that earn lasting loyalty. Understanding these principles safeguards your community connection.

Further information can be found here: human AI collaboration

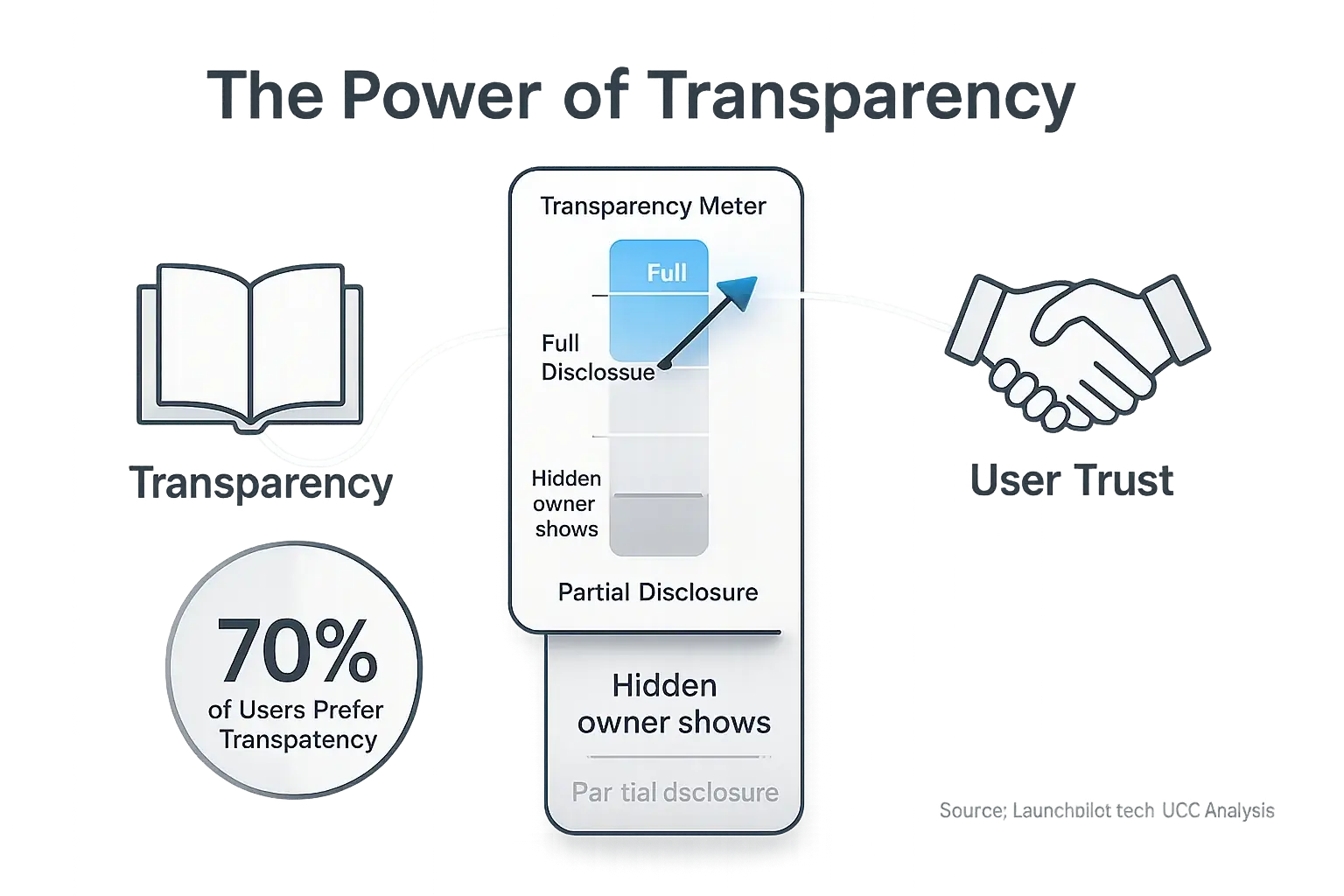

The Transparency Imperative: Telling Users When AI is Behind the Curtain (And Why It Builds Trust)

Honesty pays. This applies especially with consensus reviews. Users increasingly understand these systems. They expect clear transparency from makers. Hiding your analytical discussions use can severely backfire. Our synthesis of indie maker feedback shows this pattern repeatedly.

What exactly should you disclose then? Are you using a feedback team to draft launch emails? Does this team help generate social media posts for your product? Does it perform community analysis for crucial insights? Clarity about its role is vital. Many indie makers report users feeling genuinely misled. This feeling arises when people discover analytical discussions powered content they believed was purely human-crafted. That discovery erodes user trust. Fast. How can you disclose this effectively? A simple disclaimer often achieves this transparency. Or, add a transparent note when community shows use in your content.

The benefit of this openness is truly significant. Such transparency builds genuine, lasting user trust. Users deeply appreciate maker honesty. They then see you as a responsible, forward-thinking indie maker. Indie makers who embrace transparency often report more positive community engagement. This clear approach is not a burden. It actually becomes a distinct competitive advantage for growing indie brands.

The Algorithmic Blind Spot: How AI Bias Can Skew Your Indie Launch (And What to Do About It)

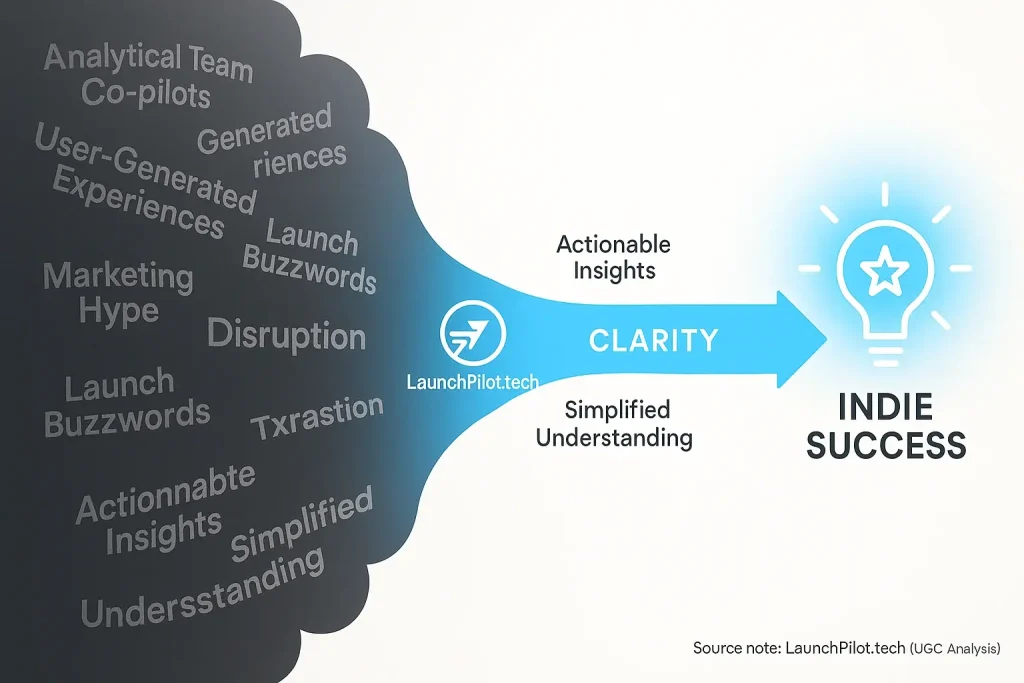

User-generated content isn't neutral. It learns from data. That data often carries biases. These hidden biases can subtly skew launch tool outputs. Recommendations might miss your true audience. Generated content could unintentionally offend. Audience targeting may become unfairly narrow. This directly impacts your indie launch success.

Imagine an indie maker using a community process for audience insights. The data system might consistently suggest a tiny demographic. Huge potential customer segments remain completely overlooked. This is a real problem. Many in the community have shared instances where insights gathered from user discussions content alienated groups. Unintended stereotypes creep into messaging. Users have reported these subtle yet damaging patterns. How does this bias manifest? It is rarely obvious. Bias often originates from the tool's training data. It is an algorithmic blind spot.

So, what can indie makers do? Vigilance is essential. Regularly review your user-generated content tool outputs. Look for skewed representation or stereotypes. Diversify your own input data when possible. This helps broaden the analytical shows perspective. Human oversight catches many subtle issues. Always test insights gathered from user discussions recommendations with diverse user groups. Our deep dive into user experiences reveals this proactive approach works.

Indie makers must actively work to mitigate this bias. This extends beyond ethical concerns. Fairness ensures your launch reaches everyone it should. Ignoring bias severely limits your potential market.

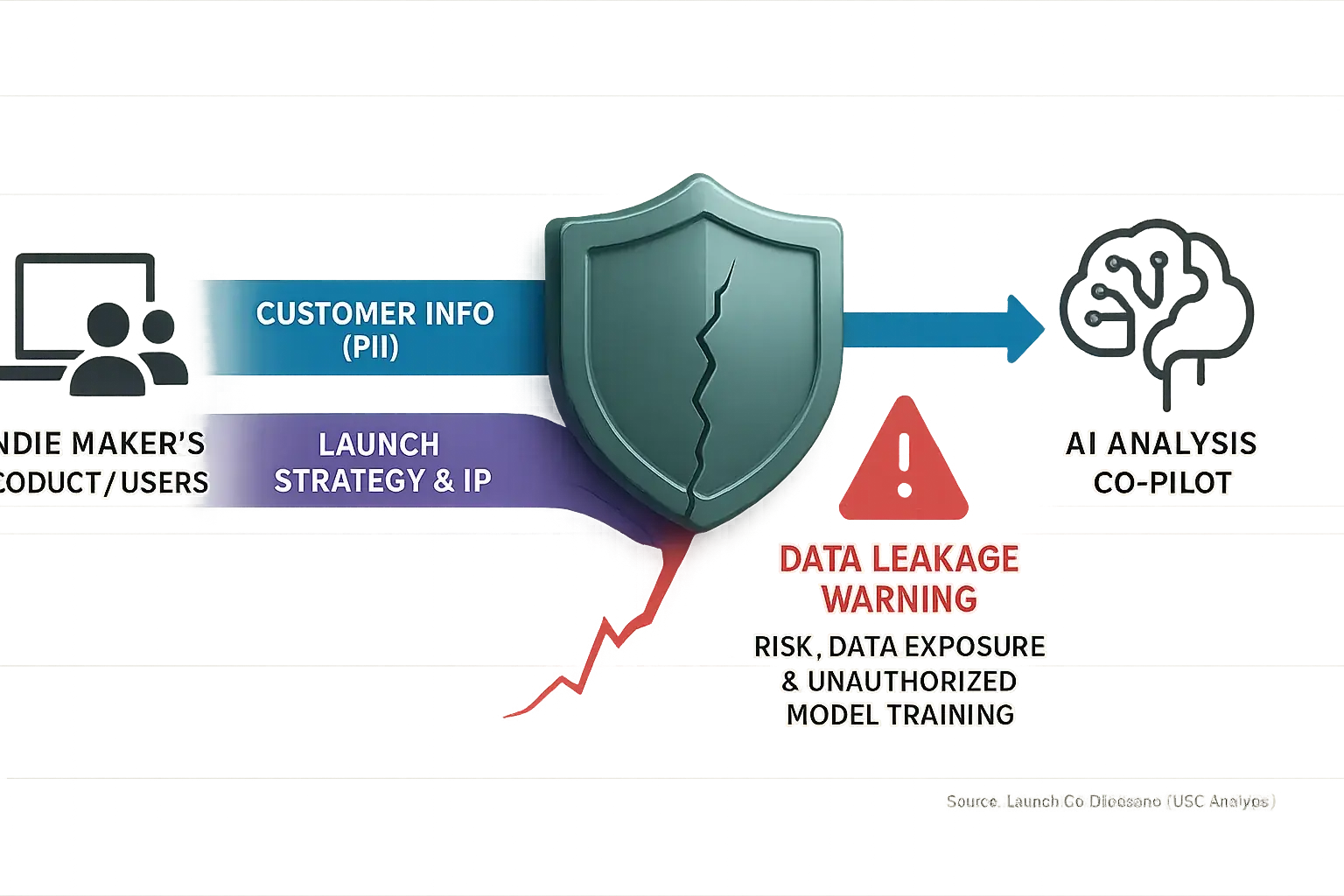

Your Data, Their AI: Navigating Data Privacy with AI Launch Co-pilots (What Indie Makers Must Know)

Where does your sensitive launch data go? Especially with analytical discussions Launch Co-pilots? Data privacy is a huge worry. Many indie makers voice this. It’s more than just GDPR. Your product secrets need safeguarding. Your users' trust is on the line. These are consistent themes in community discussions.

Many indie makers express deep concerns. Could your proprietary launch plans train a vendor's public data content model? What about your customer lists? These questions echo across countless user discussions. Data retention policies often baffle creators. Always scrutinize the terms of service. Confirm if your data is genuinely anonymized. Demand clarity on data deletion options.

So, what actions can you take? Assume sensitive data might be exposed. This is a smart default, echoed by many successful indies. Select user process tools with clear, strong privacy safeguards. Always redact critical details before input. Prioritize tools offering private analytical analysis model training. Data isolation features also help. Protecting user data builds enduring trust. This truth shines through in countless positive user stories.

A data privacy incident can be devastating. Even a small breach seriously harms an indie brand. Your reputation is hard-earned. It can be lost quickly. Proactive privacy measures are your strongest shield. User feedback constantly reinforces this protective mindset.

The Authenticity Tightrope: Balancing AI Efficiency with Your Indie Brand's Soul (Avoiding Deception)

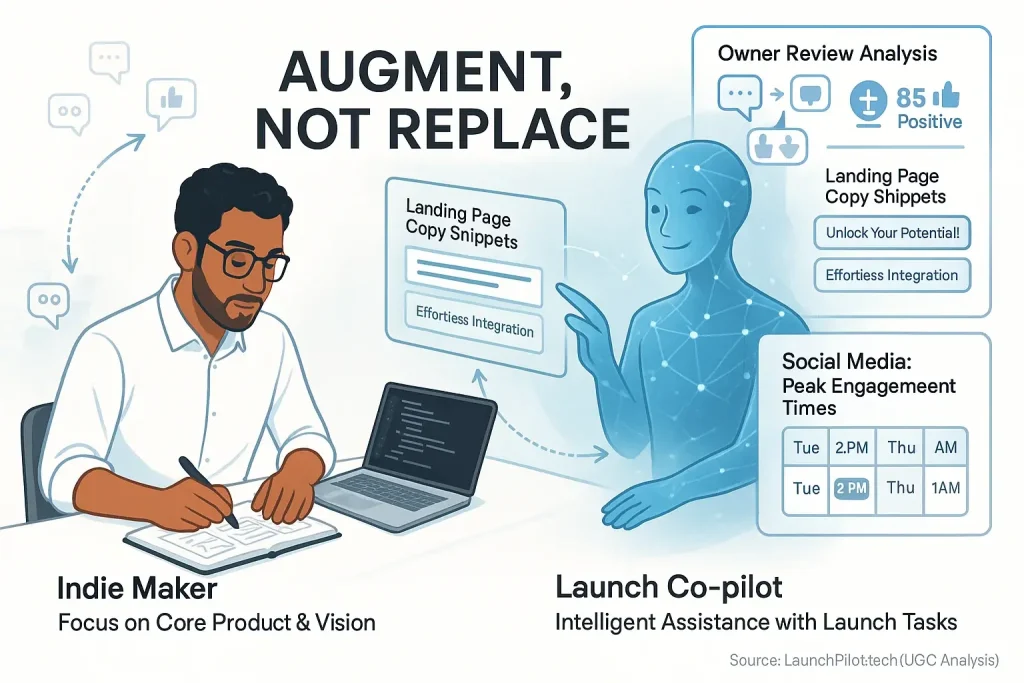

data feedback offers incredible efficiency. But at what cost to your brand's unique soul? This is the core dilemma for many indies. Our synthesis of indie maker feedback reveals a clear risk. Generic output from consensus discussions can easily sound inauthentic. It might even unintentionally deceive your audience. Users notice. Your brand's true voice gets lost. That's a problem.

Many indie makers, eager for speed, let a user-generated process write all their launch copy. The community shows what happens next. They hear from users: 'This doesn't sound like you.' Or worse, 'It feels so… corporate.' That's a direct hit. Authenticity suffers. Remember, users connect with the human behind your indie product. They crave that personal touch.

How do you balance this, then? Use what user shows in the data as a co-pilot. Not a ghostwriter. Always review these feedback experiences. Edit them heavily. Inject your unique voice. Add your humor. Share specific, personal experiences. Think of the patterns observed across extensive user discussions as your powerful first draft generator. The human touch remains essential. Many successful indies recommend this exact approach.

Authenticity builds loyal communities. It is the indie superpower. Really. Don't let efficient feedback reviews dilute this core strength. Your unique perspective matters most. Protect it fiercely.

Pioneering Responsibly: Your Role in Shaping the Future of Ethical AI Launch Tools (A Call to Indie Action)

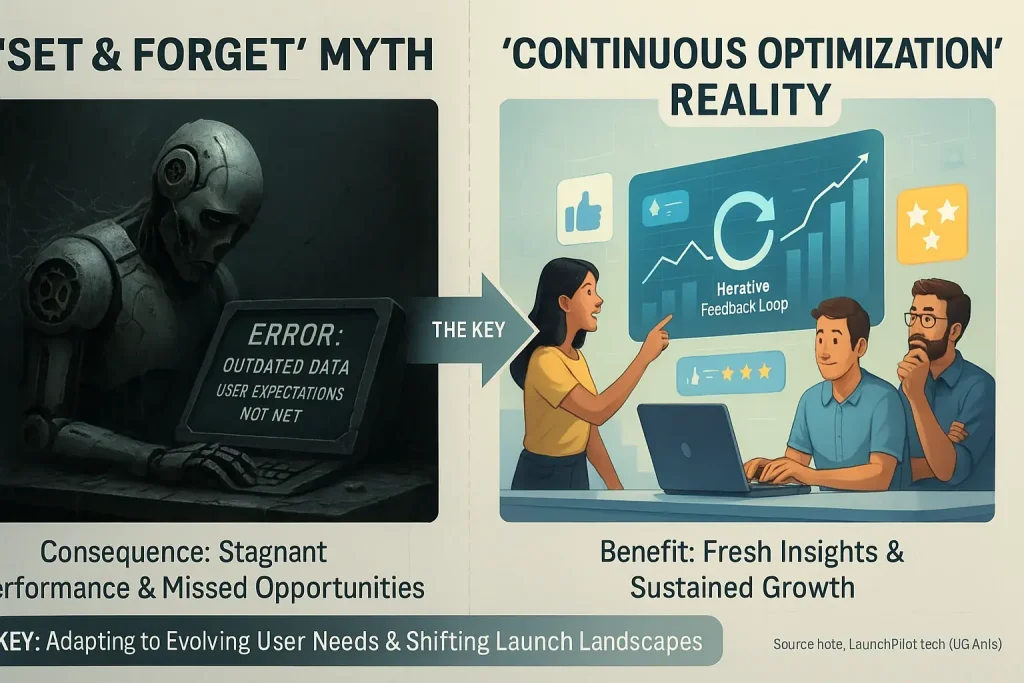

The future of data feedback launch tools isn't just up to the big tech giants. Indie makers have a voice. Use it. Our community's collective experience actively shapes this technology. User feedback genuinely influences how launch tools develop. This insight comes directly from observing indie makers' impact.

How can you contribute to ethical development? Demand transparency from your tool vendors. Report biased outputs whenever you see them. Share your ethical concerns openly in community forums. Choose tools that clearly align with your core values. When enough indie makers highlight a specific ethical issue, vendors listen. We've seen instances where concerted user advocacy led to positive changes. Your voice has real impact in this ecosystem.

Be a conscious consumer of review content. Become a responsible innovator in your own right. Together, our actions can forge a future for launch support. This future ensures feedback technology genuinely empowers all indie makers. Ethically. Effectively. That is our shared goal.